Preparing for a Data Modelling interview is crucial for aspiring data professionals, as this role uniquely combines analytical skills with creative problem-solving. Data Modellers are responsible for designing and maintaining data structures that support business intelligence and analytics, making their work foundational to informed decision-making. Proper interview preparation not only boosts confidence but also equips candidates with the knowledge to articulate their expertise effectively. This comprehensive guide will cover essential concepts, common interview questions, practical exercises, and best practices to help you stand out in your Data Modelling interview and demonstrate your ability to transform complex data into actionable insights.

What to Expect in a Data Modelling Interview

In a Data Modelling interview, candidates can expect a blend of technical and behavioral questions focused on their understanding of data structures, normalization, and database design principles. Interviews may be conducted by data architects, data analysts, or hiring managers. The process typically includes an initial phone screening followed by one or two rounds of technical interviews, which may involve case studies, practical exercises, or whiteboard sessions to assess problem-solving skills. Additionally, candidates may encounter discussions about past projects to evaluate their hands-on experience and approach to data modeling challenges.

Data Modelling Interview Questions For Freshers

Data Modelling interview questions for freshers focus on essential concepts that lay the foundation for effective database design. Candidates should master basic principles such as entity-relationship modeling, normalization, and understanding various database types to excel in their interviews.

1. What is data modeling?

Data modeling is the process of creating a conceptual representation of data structures, relationships, and constraints within a database. It helps in organizing data to meet business requirements and provides a blueprint for how data will be stored, accessed, and managed. Data models can be categorized into conceptual, logical, and physical models.

2. What are entities and attributes in data modeling?

- Entity: An entity is a real-world object or concept that can be distinctly identified, such as a customer or product.

- Attribute: An attribute is a property or characteristic of an entity, such as a customer’s name, age, or address.

Understanding entities and attributes is essential for building effective data models, as they form the basic building blocks of any database structure.

3. Explain the concept of normalization.

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves decomposing tables into smaller, related tables and defining relationships between them. The main goals of normalization are to eliminate duplicate data, ensure data dependencies are logical, and simplify data management.

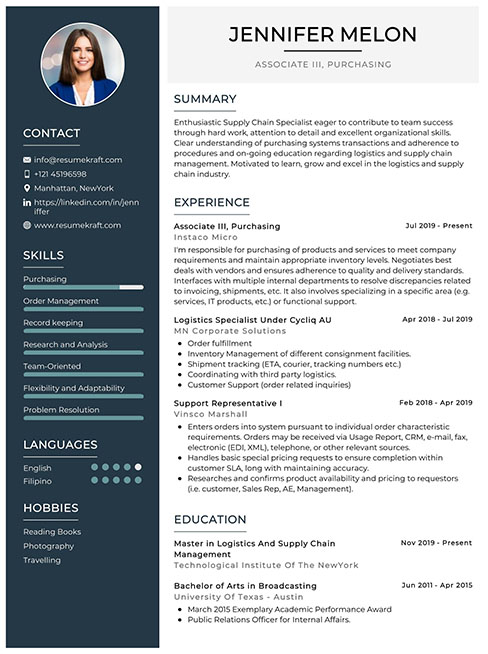

Build your resume in just 5 minutes with AI.

4. What are the different types of relationships in data modeling?

- One-to-One: Each entity in a relationship corresponds to one entity in the other.

- One-to-Many: One entity can be associated with multiple entities in another set.

- Many-to-Many: Entities in both sets can be associated with multiple entities from the other set.

Understanding these relationships is crucial for creating accurate data models that reflect real-world scenarios.

5. What is an Entity-Relationship Diagram (ERD)?

An Entity-Relationship Diagram (ERD) is a visual representation of the data model that illustrates the entities, their attributes, and the relationships between them. ERDs help in understanding the data structure and can be used as a tool for designing and communicating the database schema effectively.

6. What is a primary key?

A primary key is a unique identifier for a record in a database table. It ensures that each entry can be uniquely distinguished from others and cannot contain null values. Primary keys are essential for maintaining data integrity and establishing relationships between tables.

7. What is a foreign key?

A foreign key is an attribute in one table that links to the primary key in another table, establishing a relationship between the two tables. Foreign keys are crucial for maintaining referential integrity, ensuring that the relationship between data is preserved and valid across tables.

8. Explain the concept of data integrity.

- Entity Integrity: Ensures that each entity has a unique identifier and that no attribute of the primary key can be null.

- Referential Integrity: Ensures that foreign keys correctly reference valid primary keys.

- Domain Integrity: Ensures that all values in a column fall within a specific set of valid values.

Data integrity is vital for maintaining accurate and reliable data in a database.

9. What are the different normal forms in normalization?

- First Normal Form (1NF): Ensures that all columns contain atomic values and that each entry is unique.

- Second Normal Form (2NF): Builds on 1NF by ensuring all non-key attributes are fully functionally dependent on the primary key.

- Third Normal Form (3NF): Further refines 2NF by ensuring that all attributes are dependent only on the primary key and not on other non-key attributes.

Understanding these normal forms helps in structuring data efficiently and minimizing redundancy.

10. What is denormalization?

Denormalization is the process of intentionally introducing redundancy into a database by merging tables or adding redundant data. This technique is used to improve read performance and simplify complex queries at the cost of increased storage and potential data inconsistency. It is often used in data warehousing and reporting systems.

11. What is a data warehouse?

A data warehouse is a centralized repository designed for reporting and data analysis. It stores large amounts of historical data from various sources, optimized for query performance. Data warehouses are typically used to support business intelligence activities, enabling organizations to make data-driven decisions.

12. What is the difference between OLTP and OLAP?

- OLTP (Online Transaction Processing): Focuses on managing transactional data and supports day-to-day operations with a high transaction volume.

- OLAP (Online Analytical Processing): Designed for data analysis and complex queries, OLAP systems aggregate and summarize large volumes of data for reporting purposes.

Understanding these differences is crucial for choosing the right database design for specific use cases.

13. What is a surrogate key?

A surrogate key is an artificial primary key that is used as a unique identifier for a record. Unlike natural keys that have business meaning, surrogate keys are typically integers or GUIDs generated by the system. They provide a simple, non-meaningful way to identify records and are often used to maintain data integrity when natural keys are not suitable.

14. Explain what a composite key is.

A composite key is a primary key that consists of two or more attributes that together uniquely identify a record in a table. Composite keys are used when a single attribute is not sufficient to ensure uniqueness. For example, in an order table, a combination of order ID and product ID may serve as a composite key.

15. What is a star schema?

A star schema is a type of database schema that is used in data warehousing. It consists of a central fact table surrounded by dimension tables. The fact table contains quantitative data for analysis, while the dimension tables hold descriptive attributes related to the facts. Star schemas are designed for easy querying and improved performance in analytical applications.

Here are eight interview questions designed for freshers in Data Modelling. These questions cover fundamental concepts, basic syntax, and core features relevant to the field.

16. What is data modeling?

Data modeling is the process of creating a visual representation of a complex data system. It involves defining how data is connected, stored, and processed within a database. Data models serve as a blueprint for designing databases and facilitate communication among stakeholders regarding data structures and requirements. Proper data modeling helps in optimizing database performance and ensuring data integrity.

17. What are the different types of data models?

- Conceptual Data Model: This high-level model outlines what the system contains and how the data is structured without going into details about how it will be implemented.

- Logical Data Model: This model describes the data in a more detailed manner, including entities, attributes, and relationships, but still remains independent of the physical implementation.

- Physical Data Model: This model details how data is physically stored in the database, including data types, indexes, and the actual database schema.

Understanding these models is crucial for effective database design and implementation.

18. What is an entity-relationship diagram (ERD)?

An entity-relationship diagram (ERD) is a visual representation of the entities in a database and their relationships. It uses symbols such as rectangles for entities, diamonds for relationships, and ovals for attributes. ERDs help in understanding the data requirements and structure of a system, serving as a fundamental tool during the data modeling process.

19. What are primary keys and foreign keys?

- Primary Key: A primary key is a unique identifier for a record in a table. It ensures that each entry is distinct and can be used to retrieve data efficiently.

- Foreign Key: A foreign key is a field in one table that uniquely identifies a row of another table. It establishes a relationship between the two tables and helps maintain referential integrity.

Understanding these keys is essential for designing relational databases and ensuring data consistency.

20. What is normalization and why is it important?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing large tables into smaller, related tables and defining relationships between them. The main goals are to eliminate duplicate data, ensure logical data dependencies, and simplify data management. Normalization is crucial for efficient database design and helps maintain data accuracy.

21. Can you explain the different normal forms?

- First Normal Form (1NF): A table is in 1NF if it contains only atomic (indivisible) values and each entry is unique.

- Second Normal Form (2NF): A table is in 2NF if it is in 1NF and all non-key attributes are fully functionally dependent on the primary key.

- Third Normal Form (3NF): A table is in 3NF if it is in 2NF and all the attributes are dependent only on the primary key, eliminating transitive dependencies.

These normal forms help in structuring the database correctly to minimize redundancy and improve data integrity.

22. What is a data warehouse?

A data warehouse is a centralized repository that stores large volumes of historical data from multiple sources. It is designed for query and analysis rather than transaction processing. Data warehouses enable organizations to consolidate data, perform complex queries, and generate reports for business intelligence. They typically use a star or snowflake schema for organizing data and optimizing performance.

23. What is the difference between OLTP and OLAP?

- OLTP (Online Transaction Processing): This system is designed for managing transaction-oriented applications. It focuses on fast query processing and maintaining data integrity in multi-user environments.

- OLAP (Online Analytical Processing): This system is designed for complex queries and data analysis. It allows users to perform multidimensional analysis of business data, enabling better decision-making.

Understanding the difference between OLTP and OLAP is essential for designing systems that meet specific business needs.

Data Modelling Intermediate Interview Questions

Data Modelling interview questions for intermediate candidates focus on essential concepts such as normalization, denormalization, schema design, and the use of ER diagrams. Candidates should understand practical applications of these concepts, performance considerations, and best practices for designing effective data models that meet business needs.

24. What is normalization in database design and why is it important?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing large tables into smaller, related tables and defining relationships between them. Normalization is important because it helps to eliminate duplicate data, ensures data dependencies are logical, and simplifies data maintenance, which ultimately leads to more efficient database performance.

25. Can you explain the different normal forms?

- First Normal Form (1NF): Ensures that each column contains atomic values and each record is unique.

- Second Normal Form (2NF): Achieved when a table is in 1NF and all non-key attributes are fully functionally dependent on the primary key.

- Third Normal Form (3NF): A table is in 2NF and has no transitive dependencies, meaning non-key attributes are not dependent on other non-key attributes.

- Boyce-Codd Normal Form (BCNF): A stricter version of 3NF where every determinant is a candidate key.

Understanding these normal forms helps in designing databases that minimize redundancy and enhance data integrity.

26. What is denormalization and when would you use it?

Denormalization is the process of intentionally introducing redundancy into a database by merging tables or adding redundant data to improve read performance. It is often used in scenarios where speed is critical, such as in data warehousing or when dealing with large-scale reporting systems. While it can enhance query performance, it may lead to increased complexity in data management and the risk of data anomalies.

27. How would you design a star schema for a data warehouse?

A star schema consists of a central fact table surrounded by dimension tables. To design one, you should:

- Identify the business process to analyze and create a fact table containing measurable metrics.

- Determine the dimensions that will provide context to the facts and create corresponding dimension tables.

- Ensure that each dimension table has a primary key that can be referenced in the fact table.

This design simplifies queries and improves performance by reducing the number of joins needed during data retrieval.

28. What are surrogate keys and why are they used?

A surrogate key is a unique identifier for an entity, typically an auto-incremented number, that has no business meaning. Surrogate keys are used because they provide a consistent way to identify records, avoid issues with changing natural keys, and improve performance in joins and indexing. They also simplify relationships between tables in a database schema.

29. Explain the concept of referential integrity.

Referential integrity is a database constraint that ensures relationships between tables remain consistent. It requires that foreign keys in one table match primary keys in another, preventing orphaned records and maintaining data accuracy. For example, if a record in a child table references a parent table’s primary key, that parent record must exist. This ensures the validity of links between tables.

30. What is the difference between a primary key and a unique key?

- A primary key uniquely identifies each record in a table and cannot contain NULL values.

- A unique key also enforces uniqueness for a column or set of columns but can contain NULL values.

Both keys are essential for maintaining data integrity, but the primary key is the main identifier for records in a table.

31. How do you handle many-to-many relationships in a relational database?

Many-to-many relationships are handled using a junction table (or associative entity) that contains foreign keys referencing the primary keys of the two related tables. For example, in a library database, a books table and authors table can have a junction table that links them, allowing for multiple authors per book and multiple books per author. This approach ensures proper normalization and data integrity.

32. What is a data model, and what types are commonly used?

A data model is a conceptual representation of data structures and relationships within a system. Common types of data models include:

- Conceptual Data Model: High-level overview of the data and its relationships, without details about the actual implementation.

- Logical Data Model: Details the structure of the data elements, their relationships, and constraints, independent of a specific database.

- Physical Data Model: Specifies how the data will be stored in the database, detailing file structures and indexing.

Each type serves different purposes in the database design process.

33. What is an ER diagram, and what is its purpose?

An Entity-Relationship (ER) diagram is a visual representation of entities and their relationships within a database system. It is used in the database design phase to illustrate how data is structured and how entities interact with one another. ER diagrams help stakeholders understand data requirements and guide developers in implementing the database schema accurately.

34. How do you ensure data quality in your data models?

- Validation Rules: Implement rules to check data accuracy and completeness during entry.

- Regular Audits: Perform periodic reviews of data to identify and correct inconsistencies.

- Data Profiling: Analyze data to understand its structure, relationships, and quality metrics.

- Use of Constraints: Leverage database constraints like unique keys and foreign keys to enforce data integrity.

By employing these strategies, you can maintain high data quality and reliability in your data models.

35. What are some performance considerations when designing data models?

- Indexing: Use appropriate indexes to speed up query performance but be cautious of over-indexing, which can slow down write operations.

- Normalization vs. Denormalization: Balance normalization for data integrity with denormalization for read performance based on usage patterns.

- Partitioning: Consider partitioning large tables to improve query performance and manageability.

- Choosing Data Types: Use the most efficient data types for your columns to save space and improve performance.

Addressing these considerations during the design phase can lead to more efficient and responsive databases.

36. How do you approach schema evolution in a database?

Schema evolution involves modifying a database schema to accommodate changing business requirements. To approach schema evolution effectively, consider the following:

- Version Control: Keep track of schema changes using version control systems.

- Backward Compatibility: Design changes to be backward compatible to avoid breaking existing functionality.

- Migration Scripts: Write migration scripts to implement changes without data loss.

- Testing: Thoroughly test the schema changes in a staging environment before deployment.

By following these best practices, you can minimize disruption while evolving your database schema.

Here are some intermediate-level interview questions focused on Data Modelling. These questions explore practical applications, best practices, and performance considerations relevant to data modeling.

39. What is normalization in database design, and why is it important?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. This involves dividing large tables into smaller, related tables and defining relationships between them. The primary goals of normalization are to eliminate duplicate data, ensure data dependencies make sense, and simplify data management. By normalizing databases, we can ensure efficient data updates, reduce anomalies, and enhance performance during data retrieval.

40. Can you explain the different normal forms in database normalization?

- First Normal Form (1NF): Ensures that each column contains atomic values, and each record is unique.

- Second Normal Form (2NF): Achieves 1NF and removes partial dependencies, ensuring that all non-key attributes are fully dependent on the primary key.

- Third Normal Form (3NF): Achieves 2NF and removes transitive dependencies, meaning non-key attributes do not depend on other non-key attributes.

- Boyce-Codd Normal Form (BCNF): A stronger version of 3NF that addresses certain types of anomalies not covered by 3NF.

Each normal form serves to progressively reduce redundancy and improve the structure of the database.

41. What is denormalization, and when might you use it?

Denormalization is the process of intentionally introducing redundancy into a database by combining tables or adding redundant data. This is often done to improve read performance by reducing the number of joins required during queries. Denormalization may be used in scenarios where read operations greatly outnumber write operations, such as in data warehousing or reporting applications. However, it can lead to increased complexity during updates and may require more careful management of data integrity.

42. How do you handle many-to-many relationships in data modeling?

Many-to-many relationships are handled by creating a junction table (also known as a bridge table or associative entity) that includes foreign keys referencing the primary keys of the two related tables. This junction table can also contain additional attributes related to the relationship. For example, if we have a `Students` table and a `Courses` table, the junction table `Enrollments` would link them using `student_id` and `course_id` as foreign keys.

CREATE TABLE Enrollments (

student_id INT,

course_id INT,

PRIMARY KEY (student_id, course_id),

FOREIGN KEY (student_id) REFERENCES Students(id),

FOREIGN KEY (course_id) REFERENCES Courses(id)

);This structure allows for efficient querying and management of many-to-many relationships.

43. What are some best practices for designing a data model?

- Understand requirements: Gather detailed business requirements to ensure the model meets user needs.

- Use consistent naming conventions: Establish clear and uniform naming conventions for tables and columns to enhance readability.

- Plan for growth: Design with scalability in mind to accommodate future data volume increases.

- Document your model: Maintain clear documentation of the data model, including relationships and constraints, for future reference.

Following these best practices can lead to a more efficient, maintainable, and scalable data model.

44. How do you ensure data integrity in your data models?

- Use primary and foreign keys: Define primary keys to uniquely identify records and foreign keys to enforce relationships.

- Implement constraints: Use constraints such as NOT NULL, UNIQUE, and CHECK to enforce business rules at the database level.

- Regular audits: Conduct regular data audits to identify and rectify any inconsistencies or anomalies.

By implementing these strategies, data integrity can be effectively maintained across the database.

45. What is a star schema, and how is it different from a snowflake schema?

A star schema is a type of data modeling used in data warehousing that consists of a central fact table connected to multiple dimension tables. This structure allows for efficient querying and is optimized for read access. In contrast, a snowflake schema normalizes dimension tables into additional tables, creating a more complex structure with multiple levels of hierarchy. While snowflake schemas can reduce data redundancy, star schemas typically offer better performance for read-heavy operations due to their simpler design.

Data Modelling Interview Questions for Experienced

Data Modelling interview questions for experienced professionals delve into advanced topics such as database architecture, optimization techniques, scalability challenges, design patterns, and practical leadership skills. Candidates are expected to demonstrate a deep understanding of data relationships and the ability to mentor others in best practices.

47. What is normalization in database design, and why is it important?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing large tables into smaller, related tables and defining relationships among them. The importance of normalization lies in:

- Eliminating duplicate data to save storage space.

- Enhancing data integrity by ensuring that data dependencies are properly enforced.

- Simplifying the database design, making it easier to maintain and query.

Ultimately, normalization helps create a more robust and efficient database structure.

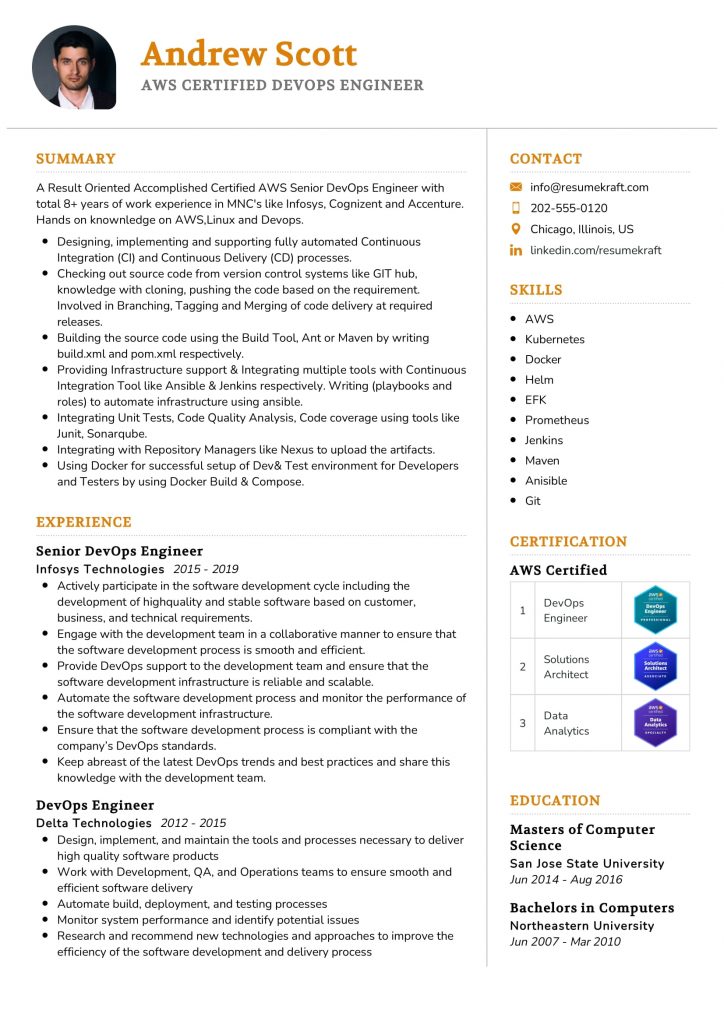

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

48. Can you explain the different normal forms in database normalization?

There are several normal forms, each with specific criteria:

- First Normal Form (1NF): Ensures all columns contain atomic values and each value in a column is of the same data type.

- Second Normal Form (2NF): Achieved when a table is in 1NF and all non-key attributes are fully functional dependent on the primary key.

- Third Normal Form (3NF): A table is in 3NF if it is in 2NF and all the attributes are not only dependent on the primary key but also independent of each other.

Each subsequent normal form builds on the previous one, leading to a more refined and efficient database structure.

49. What are some common data modeling patterns, and when would you use them?

Common data modeling patterns include:

- Entity-Relationship (ER) Model: Used for representing data entities and their relationships. Ideal for conceptual design.

- Star Schema: Common in data warehousing, it organizes data into fact and dimension tables for efficient querying.

- Snowflake Schema: A more normalized version of the star schema, which reduces redundancy but may complicate queries.

Choosing the right pattern depends on the specific requirements of the application, such as the need for performance, scalability, and ease of maintenance.

50. How do you approach optimizing a database for performance?

Optimizing a database for performance involves several strategies:

- Indexing: Creating indexes on frequently queried columns to speed up data retrieval.

- Query Optimization: Analyzing and rewriting queries for efficiency, such as avoiding SELECT * and using proper JOINs.

- Partitioning: Dividing large tables into smaller, more manageable pieces to improve performance and maintenance.

Regular monitoring and performance tuning can help ensure that the database remains efficient as data volume grows.

51. What strategies would you recommend for ensuring data integrity in a large-scale application?

Ensuring data integrity in a large-scale application requires a multi-faceted approach:

- Use of Transactions: Implementing ACID (Atomicity, Consistency, Isolation, Durability) properties to ensure reliable transactions.

- Data Validation: Enforcing constraints at the application and database levels to ensure only valid data is entered.

- Regular Backups: Performing regular data backups and having a disaster recovery plan in place.

These strategies help maintain the accuracy and consistency of data over time, especially in dynamic environments.

52. Explain the concept of denormalization and when it should be applied.

Denormalization is the process of intentionally introducing redundancy into a database by merging tables to improve read performance. It is often applied in scenarios where:

- Read Performance is Critical: In data warehousing or reporting systems, where query performance is prioritized.

- Complex Joins are Frequent: Reducing the number of joins can simplify queries and enhance performance.

However, denormalization can lead to data anomalies, so it should be used judiciously and balanced with the need for data integrity.

53. How would you design a scalable data model for a microservices architecture?

In a microservices architecture, each service typically manages its own database. A scalable data model can be designed by:

- Service Decomposition: Breaking down services into smaller, independently deployable components that manage specific data domains.

- API-First Approach: Using APIs to enable communication between services, allowing them to work with their respective databases without tight coupling.

- Event Sourcing: Implementing event sourcing to capture changes as a sequence of events, which can help with data consistency and recovery.

This design promotes scalability and flexibility, essential for handling varying loads in a microservices environment.

54. What is the role of a data steward in data governance?

A data steward plays a critical role in data governance by overseeing data management practices. Their responsibilities typically include:

- Data Quality Assurance: Monitoring data quality and implementing standards for data entry and maintenance.

- Policy Enforcement: Ensuring compliance with data governance policies and legal regulations concerning data use.

- Collaboration: Working with data users and IT to promote best practices in data management.

By serving as a bridge between technical and business teams, data stewards help maintain the integrity and usability of data across the organization.

55. Describe how you would mentor a junior data modeler.

Mentoring a junior data modeler involves several key steps:

- Knowledge Sharing: Providing insights into best practices, design patterns, and common pitfalls in data modeling.

- Hands-On Guidance: Engaging in pair modeling sessions to collaboratively design data models and review their work.

- Encouragement and Feedback: Offering constructive feedback and encouraging them to ask questions and explore new concepts.

This mentoring approach fosters growth, enhances their skills, and equips them for future challenges in data modeling.

56. How do you handle schema changes in a production environment?

Handling schema changes in a production environment requires careful planning and execution:

- Backward Compatibility: Ensuring that changes do not break existing functionality by maintaining backward compatibility.

- Versioning: Implementing version control for the database schema to track changes and roll back if necessary.

- Staged Deployment: Using a phased approach to deploy changes, such as rolling out to a small subset of users before full deployment.

These practices help minimize disruption and maintain system stability during schema updates.

57. What advanced indexing techniques do you recommend for high-volume databases?

For high-volume databases, advanced indexing techniques include:

- Bitmap Indexes: Useful for columns with low cardinality, bitmap indexes can significantly speed up query performance.

- Partial Indexes: Indexing only a subset of data based on specific criteria, which can reduce index size and improve performance.

- Covering Indexes: Creating indexes that include all columns required by the query, thus negating the need to access the table.

These techniques can greatly enhance query performance, especially in large datasets where efficiency is crucial.

58. What are some common challenges you face in data modeling for cloud-based databases?

Common challenges in data modeling for cloud-based databases include:

- Data Migration: Moving existing data to the cloud can be complex, requiring careful planning and execution to avoid downtime.

- Performance Variability: Cloud environments may exhibit variable performance due to shared resources, affecting data access speeds.

- Security and Compliance: Ensuring data security and compliance with regulations can be more challenging in a cloud environment.

Addressing these challenges requires a proactive approach to design and implementation, ensuring optimal performance and compliance.

59. How do you ensure that your data models can evolve over time?

To ensure that data models can evolve over time, consider the following strategies:

- Modular Design: Building data models in a modular fashion makes it easier to update or replace components without affecting the entire structure.

- Documentation: Keeping comprehensive documentation of the data model, including relationships and constraints, facilitates easier modifications.

- Regular Reviews: Conducting periodic reviews of the data model to identify areas that require updates or optimizations.

These practices promote flexibility and adaptability, allowing the data model to meet changing business requirements.

These questions are designed for experienced professionals in the field of Data Modelling, focusing on architecture, optimization, scalability, design patterns, and leadership aspects.

62. What are some best practices for designing a scalable data model?

To design a scalable data model, consider the following best practices:

- Normalization vs. Denormalization: Normalize to reduce data redundancy but denormalize for read-heavy applications to improve performance.

- Data Partitioning: Use horizontal or vertical partitioning to distribute data across multiple nodes, enhancing performance and manageability.

- Indexing Strategies: Implement appropriate indexing strategies to accelerate query performance while being cautious of index maintenance overhead.

- Use of Data Warehousing: Leverage data warehouses for analytics to separate transactional workloads from analytical processing.

These practices help ensure that the data model can grow and adapt to increased load while maintaining performance and consistency.

63. How do you handle schema evolution in a production environment?

Handling schema evolution in production requires careful planning and execution:

- Versioning: Maintain version control for your database schema to track changes over time.

- Backward Compatibility: Ensure new schema changes are backward compatible to avoid breaking existing functionality.

- Feature Toggles: Use feature toggles to roll out schema changes gradually, allowing for testing in production with minimal risk.

- Automated Migrations: Implement automated migration scripts to apply schema changes seamlessly during deployment.

By following these strategies, you can effectively manage schema changes without disrupting service or data integrity.

64. What design patterns are commonly used in data modeling, and why?

Common design patterns in data modeling include:

- Entity-Relationship Model: A foundational pattern that helps visualize the data entities and their relationships, making it easier to design databases.

- Star Schema: Often used in data warehousing, it simplifies queries and optimizes performance for analytical processing.

- Snowflake Schema: An extension of the star schema that normalizes dimension tables, reducing data redundancy.

- Data Vault: A flexible and scalable approach that separates raw data from business intelligence, allowing for easier integration and historical tracking.

These patterns help in creating efficient, maintainable, and scalable data models tailored to specific business needs.

65. Can you explain the CAP theorem and its implications for distributed data models?

The CAP theorem states that in a distributed data store, only two of the following three guarantees can be achieved simultaneously:

- Consistency: Every read receives the most recent write or an error.

- Availability: Every request receives a response, successful or not.

- Partition Tolerance: The system continues to operate despite network partitions.

This theorem implies that when designing distributed data models, trade-offs must be made between consistency and availability, especially in the face of network failures. For example, systems like Cassandra prioritize availability and partition tolerance over strict consistency, which can be acceptable in certain applications.

How to Prepare for Your Data Modelling Interview

Preparing for a Data Modelling interview requires a strategic approach to understand both theoretical concepts and practical applications. Candidates should focus on mastering key principles, tools, and methodologies relevant to data modeling to showcase their skills effectively during the interview.

- Familiarize Yourself with Data Modeling Concepts: Review fundamental concepts such as entity-relationship diagrams, normalization, and data definitions. Understanding these principles will help you articulate your thought process and demonstrate your knowledge during technical discussions.

- Practice Using Data Modeling Tools: Gain hands-on experience with popular data modeling tools like ERwin, Lucidchart, or Microsoft Visio. Familiarity with these tools can help you represent data structures visually and will be useful when discussing your previous projects.

- Study Real-World Use Cases: Analyze case studies or examples of data modeling in different industries. Understanding how data modeling is applied in various contexts can provide insights into best practices and help you answer scenario-based questions effectively.

- Brush Up on SQL Skills: Data modeling often involves SQL for data manipulation and querying. Practice writing complex SQL queries to retrieve and manipulate data from relational databases, as this knowledge is crucial during technical assessments.

- Review Data Warehousing Principles: Understand data warehousing concepts and architectures, including star and snowflake schemas. Being able to discuss how data modeling fits into a broader data architecture will demonstrate your comprehensive understanding of data management.

- Prepare for Behavioral Questions: Expect questions about past experiences and challenges faced in data modeling projects. Use the STAR method (Situation, Task, Action, Result) to structure your answers and highlight your problem-solving abilities and teamwork skills.

- Mock Interviews and Peer Reviews: Engage in mock interviews with peers or mentors to practice articulating your thoughts clearly. Feedback can help you refine your responses, improve your confidence, and prepare you for the actual interview environment.

Common Data Modelling Interview Mistakes to Avoid

When interviewing for a Data Modelling position, avoiding common mistakes can significantly enhance your chances of success. Understanding these pitfalls will help you present your skills effectively and demonstrate your expertise in data architecture and design.

- Neglecting Domain Knowledge: Failing to understand the specific industry or domain can hinder your ability to create effective models. Research the business context, data requirements, and key metrics relevant to the role to demonstrate your understanding.

- Inadequate Preparation: Not preparing adequately for the interview can lead to poor performance. Familiarize yourself with common data modelling tools, methodologies, and case studies relevant to the position to showcase your readiness.

- Overlooking Normalization Principles: Ignoring normalization can result in inefficient data structures. Be prepared to discuss normalization forms and trade-offs, and how they impact data integrity and retrieval performance.

- Failing to Communicate Clearly: Poor communication can lead to misunderstandings. Practice articulating your thought process clearly, especially when discussing complex data models or technical concepts during the interview.

- Not Asking Questions: Avoiding questions can make you appear uninterested. Prepare thoughtful questions about the team, data challenges, or company goals to demonstrate your engagement and critical thinking.

- Ignoring Data Quality Issues: Dismissing the importance of data quality can undermine your effectiveness as a data modeller. Be ready to discuss strategies for ensuring data accuracy, consistency, and relevance in your models.

- Underestimating Data Governance: Overlooking data governance principles can lead to compliance issues. Familiarize yourself with data policies, privacy regulations, and best practices to demonstrate your commitment to responsible data management.

- Not Showcasing Real-World Examples: Failing to provide concrete examples of past data modelling projects can weaken your candidacy. Prepare specific instances where you implemented successful data models, highlighting challenges and results.

Key Takeaways for Data Modelling Interview Success

- Prepare a targeted resume using AI resume builder tools to highlight relevant skills and experience in data modeling, ensuring it aligns with the job description.

- Utilize resume templates to create a clean, professional format that enhances readability, making it easier for interviewers to assess your qualifications at a glance.

- Showcase your experience effectively by referencing resume examples that demonstrate your proficiency in data modeling concepts and projects, which can set you apart from other candidates.

- Craft tailored cover letters that clearly articulate your interest in the position and how your skills in data modeling can benefit the organization, enhancing your application materials.

- Engage in mock interview practice to become comfortable discussing your data modeling expertise and to refine your responses to common interview questions, boosting your confidence.

Frequently Asked Questions

1. How long does a typical Data Modelling interview last?

A typical Data Modelling interview lasts between 30 minutes to 1 hour. The duration can vary depending on the company and the complexity of the role. Generally, expect a combination of technical questions, problem-solving tasks, and discussions about your previous experience. Some interviews may include a case study or practical exercise, which can extend the duration. It’s important to be prepared for a range of questions and to showcase your knowledge effectively within the allotted time.

2. What should I wear to a Data Modelling interview?

For a Data Modelling interview, you should aim for business casual attire. This typically means wearing dress pants and a collared shirt or blouse, possibly pairing it with a blazer. The goal is to appear professional yet comfortable. If the company culture is known to be more formal or creative, you might adjust your outfit accordingly. Always ensure your clothes are neat and tidy, as first impressions can significantly impact your interview experience.

3. How many rounds of interviews are typical for a Data Modelling position?

Typically, a Data Modelling position may involve two to four rounds of interviews. The first round is often a phone screening to assess your qualifications and fit for the role. Subsequent rounds may include technical interviews focused on your data modeling skills, problem-solving abilities, and cultural fit. Some companies may also include a final round with senior management or team members to gauge collaboration potential. It’s essential to prepare for each round thoroughly.

4. Should I send a thank-you note after my Data Modelling interview?

Yes, sending a thank-you note after your Data Modelling interview is highly recommended. It shows your appreciation for the opportunity and reinforces your interest in the position. A brief, polite email thanking the interviewers and mentioning something specific from the interview can help you stand out. This gesture demonstrates professionalism and can leave a positive impression, aiding in the decision-making process for your potential employer.