Preparing for an Ab Initio interview can be a unique and rewarding experience, as this role focuses on data processing and integration using a powerful ETL tool. With the increasing demand for data-driven decision-making in organizations, proficiency in Ab Initio is a valuable asset. Proper interview preparation is crucial to demonstrate your technical skills and understanding of data workflows. This comprehensive guide will cover essential topics such as core concepts of Ab Initio, practical applications, common interview questions, and strategies to effectively showcase your expertise. By familiarizing yourself with these areas, you will enhance your chances of success and stand out as a desirable candidate in the competitive job market.

What to Expect in a Abinitio Interview

In an Ab Initio interview, candidates can expect a mix of technical and behavioral questions, focusing on data integration and ETL processes. Interviews may be conducted by a panel consisting of technical leads, data architects, and HR representatives. The structure often begins with an introduction, followed by technical assessments that may include practical exercises or case studies. Behavioral questions will assess problem-solving abilities and teamwork skills. Candidates should be prepared to discuss their past projects and demonstrate their understanding of Ab Initio tools and methodologies.

Abinitio Interview Questions For Freshers

Abinitio interview questions for freshers focus on fundamental concepts and basic syntax that candidates should master to excel in their roles. Understanding Abinitio’s architecture, core components, and data processing techniques will greatly enhance a candidate’s ability to succeed in an interview.

1. What is Abinitio?

Abinitio is a data processing platform used for extracting, transforming, and loading (ETL) data. It provides a graphical user interface for creating data flows and a range of components for data manipulation and integration. Abinitio is popular in industries that require high-performance data processing and has capabilities that support parallel execution of data tasks.

2. What are the key components of Abinitio?

- Graphical Development Environment (GDE): A tool for designing data processes visually.

- Component Library: A collection of pre-built components for various data processing tasks.

- Co>Operating System: The execution environment where data processing jobs run.

- Meta-Data: Information about data structure and processing rules.

These components work together to facilitate efficient data processing and integration.

3. What is a graph in Abinitio?

A graph in Abinitio is a visual representation of a data flow process. It consists of connected components that define how data is extracted, transformed, and loaded. Each component performs a specific function, and the connections between them dictate the flow of data. Graphs can be executed to process data according to the defined logic.

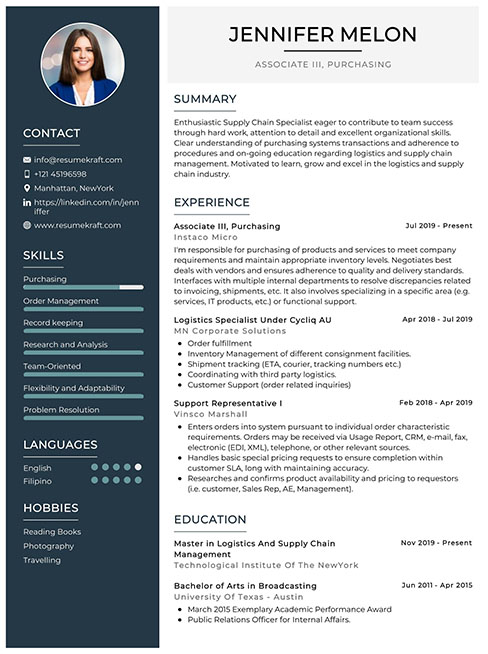

Build your resume in just 5 minutes with AI.

4. Explain the concept of a component in Abinitio.

Components in Abinitio are the building blocks of a graph. Each component performs a specific operation, such as reading data, transforming it, or writing it to a target destination. Examples of components include Input File, Output File, Join, and Sort components. They can be configured with parameters to customize their behavior.

5. What is an ETL process?

ETL stands for Extract, Transform, Load. It is a process used to gather data from multiple sources (Extract), modify and clean the data as needed (Transform), and finally load the processed data into a target system (Load). Abinitio is commonly used for performing ETL tasks because of its efficiency and scalability in handling large datasets.

6. How do you handle error handling in Abinitio?

- Using the Error Component: This component captures errors that occur during processing.

- Data Quality Checks: Implement checks to validate data before processing.

- Logging: Maintain logs of errors to analyze issues after execution.

Effective error handling is crucial for maintaining data integrity and ensuring smooth data processing operations.

7. What is a sandbox in Abinitio?

A sandbox in Abinitio is an isolated environment where developers can create, test, and debug graphs without affecting the production environment. It allows for experimentation and development of new data flows while ensuring stability and security of the main processing environment.

8. How do you optimize performance in Abinitio?

- Parallel Processing: Utilize Abinitio’s parallel processing capabilities to speed up data processing.

- Efficient Component Usage: Choose the right components and avoid unnecessary data transformations.

- Memory Management: Monitor and adjust memory settings for optimal performance.

Optimizing performance is essential for handling large volumes of data efficiently.

9. What is a partition in Abinitio?

A partition in Abinitio refers to dividing data into smaller subsets that can be processed in parallel. This allows for faster processing and efficient use of resources. Each partition can be processed independently, which is a key feature of Abinitio’s architecture that supports high-performance data processing.

10. Explain the difference between a batch and a real-time process.

- Batch Process: Processes data in large groups at scheduled intervals. It is suitable for tasks that do not require immediate results.

- Real-Time Process: Processes data immediately as it arrives, providing instant results. It is used in applications where timely data processing is critical.

Understanding the differences between these processes helps in choosing the right approach for specific data processing needs.

11. What is the purpose of the Input File component?

The Input File component in Abinitio is used to read data from external files. It allows users to specify file paths, formats, and any necessary parameters for data extraction. This component is essential for initiating data flows in a graph and can handle various file types, including text, CSV, and binary files.

12. How does Abinitio handle data lineage?

Abinitio provides data lineage capabilities that allow users to trace the flow of data from its source to its destination. This includes tracking transformations and operations performed on the data throughout the processing pipeline. Data lineage is crucial for ensuring data quality, compliance, and understanding the impact of changes within data processes.

13. What is a graph parameter in Abinitio?

A graph parameter in Abinitio is a variable that can be defined and used within a graph to customize its behavior. Parameters allow developers to change values dynamically at runtime, making graphs more flexible and reusable. They can be used for file paths, conditional processing, and other configurable settings.

14. Describe the role of the Output File component.

The Output File component is used to write processed data to external files. It enables users to define the file format, location, and other writing parameters. This component plays a crucial role in data integration workflows by ensuring that the final output is stored correctly for further analysis or reporting.

15. What is a join in Abinitio?

A join in Abinitio is used to combine data from two or more sources based on a common key. This operation can be performed using different join types, such as inner, outer, and left joins. Joins are essential for integrating datasets and performing comprehensive data analysis.

Here are four interview questions tailored for freshers in Ab Initio, focusing on fundamental concepts and basic syntax.

16. What is Ab Initio and what are its primary components?

Ab Initio is a powerful data integration tool used for extracting, transforming, and loading (ETL) data. It is known for its high performance and parallel processing capabilities. The primary components of Ab Initio include:

- Graphical User Interface (GUI): Used to design data flows and transformations visually.

- Co>Operating System: The runtime environment that executes the graphs designed in the GUI.

- Components: Pre-built functions and components such as input/output, transforms, and joins that facilitate data processing.

- Metadata: Information about data sources, structures, and transformations which helps in managing data lineage.

These components work together to enable robust data processing solutions in various industries.

17. How do you create a simple graph in Ab Initio?

To create a simple graph in Ab Initio, you can follow these steps:

- Open the Ab Initio Graphical User Interface.

- Create a new graph by selecting ‘New Graph’ from the File menu.

- Drag and drop components from the component palette onto the graph canvas.

- Connect the components by using the connection tool to define data flow.

- Configure each component’s properties as needed.

- Save and run the graph to execute the data processing.

This process allows users to visually map out data processes without needing extensive coding skills.

18. What is a Transform component in Ab Initio?

A Transform component in Ab Initio is used to perform various data transformation operations during data processing. Key functions of the Transform component include:

- Data Filtering: Removing unwanted records based on specific criteria.

- Data Enrichment: Adding additional information to existing records.

- Data Aggregation: Summarizing data from multiple records into a single output.

- Data Conversion: Changing data types or formats to meet target requirements.

This component is essential for shaping data as it moves from source to target systems, ensuring that it meets business requirements.

19. Explain the concept of parallel processing in Ab Initio.

Parallel processing in Ab Initio refers to the ability to execute multiple operations simultaneously, enhancing performance and efficiency. Key points include:

- Data Partitioning: Ab Initio divides large datasets into smaller partitions for processing across multiple nodes.

- Load Balancing: Distributing workloads evenly among available resources to optimize performance.

- Scalability: The architecture allows for scaling up the system by adding more resources as data volume grows.

- Improved Performance: By executing tasks in parallel, overall processing time is significantly reduced.

This capability makes Ab Initio particularly suited for handling large volumes of data in enterprise environments.

Abinitio Intermediate Interview Questions

Abinitio interview questions for intermediate candidates focus on essential concepts and practical applications of the tool. Candidates should understand data processing, performance optimization, components, and best practices in Abinitio to demonstrate their capability in real-world scenarios.

20. What is Abinitio and what are its main components?

Abinitio is a data processing platform that provides data integration, transformation, and analytics solutions. Its main components include:

- Graphical Development Environment (GDE): A visual interface for designing data processing graphs.

- Co>Operating System: The execution environment for Abinitio graphs, managing job execution and resource allocation.

- Data Profiler: A tool for analyzing data quality and structure before processing.

- Express>It: A component for rapid data transformation and integration.

Understanding these components is vital for leveraging Abinitio’s capabilities effectively.

21. How do you optimize performance in Abinitio graphs?

Performance optimization in Abinitio graphs can be achieved through various techniques:

- Parallelism: Utilize parallel processing by designing graphs that can run multiple instances simultaneously.

- Partitioning: Divide large data sets into smaller, manageable partitions to increase throughput.

- Memory Management: Optimize memory usage by adjusting buffer sizes and configuring components to minimize data movement.

- Component Configuration: Fine-tune component settings to enhance execution efficiency, such as using the right types of joins or filters.

Effective performance optimization leads to faster processing and reduced resource consumption.

22. Explain the difference between a ‘reformat’ and a ‘transform’ in Abinitio.

In Abinitio, both ‘reformat’ and ‘transform’ are used for data manipulation, but they serve different purposes:

- Reformat: This component is used to change the structure of the data without altering its content. It is typically used to rearrange fields or change data types.

- Transform: This component not only changes the structure but also allows for complex data manipulations, such as applying functions or calculations to the data.

Choosing between them depends on the specific needs of the data processing task.

23. What is the role of ‘lookup’ in Abinitio?

The ‘lookup’ in Abinitio is used to enrich data by retrieving additional information from a secondary data source. It allows you to perform the following:

- Data Enrichment: Add relevant attributes from external datasets to the primary dataset.

- Validation: Check data integrity and ensure values exist in the reference dataset.

- Conditional Logic: Apply conditional transformations based on the lookup results.

Using lookups effectively can significantly enhance the quality and context of the processed data.

24. How do you handle errors in Abinitio?

Error handling in Abinitio can be managed through a combination of techniques:

- Error Ports: Use error ports on components to capture and redirect records that fail processing.

- Logging: Implement comprehensive logging to track errors and understand failure points.

- Checkpoints: Use checkpoints to save the state of the process, allowing for recovery in case of failures.

- Debugging Tools: Utilize Abinitio’s debugging features to analyze graphs and identify issues before execution.

Effective error management ensures data integrity and minimizes downtime during processing.

25. Can you explain the concept of ‘partitioning’ in Abinitio?

Partitioning in Abinitio refers to the process of dividing a dataset into smaller, manageable subsets to improve performance and scalability. Key points include:

- Types of Partitioning: Common types include hash, round-robin, and key-based partitioning.

- Performance Benefits: Partitioning allows parallel processing, reducing execution time and resource usage.

- Data Locality: It helps in maintaining data locality, which can enhance processing efficiency, especially in distributed systems.

Properly implemented partitioning strategies can lead to significant improvements in data processing workflows.

26. What is the purpose of the ‘join’ component in Abinitio?

The ‘join’ component in Abinitio is used to combine records from two or more datasets based on a common key. Its purposes include:

- Data Integration: Merge data from different sources to create a unified view.

- Data Enrichment: Augment datasets with additional attributes from other data sources.

- Conditional Joins: Perform various types of joins (inner, outer, etc.) based on business logic.

Using the join component effectively can enhance the richness of the data being processed.

27. Describe how to use the ‘aggregate’ component in Abinitio.

The ‘aggregate’ component in Abinitio is used to perform summarization operations on data. Key aspects include:

- Group By: Define grouping fields to aggregate data, such as calculating totals or averages.

- Aggregation Functions: Utilize built-in functions like SUM, COUNT, AVG, etc., to derive summary statistics.

- Output Structure: Specify the output structure to reflect the aggregated results accurately.

Proper use of the aggregate component allows for insightful data analysis and reporting.

28. What is the difference between ‘static’ and ‘dynamic’ parameters in Abinitio?

In Abinitio, parameters can be classified as static or dynamic based on their assignment:

- Static Parameters: These are defined at design time and do not change during execution. They are typically hardcoded values.

- Dynamic Parameters: These are assigned at runtime and can vary based on external inputs or configurations, allowing for more flexible graph execution.

Understanding the difference helps in designing adaptable and robust Abinitio graphs.

29. How do you implement source control in Abinitio?

Implementing source control in Abinitio is critical for managing changes and collaboration. Key strategies include:

- Version Control Systems: Use systems like Git or SVN to manage versions of graph files and configurations.

- Change Management: Maintain a record of changes made to graphs, including comments and reasons for modifications.

- Graph Naming Conventions: Establish clear naming conventions for graphs to reflect their purpose and version.

Effective source control practices help in maintaining the integrity and traceability of data processing workflows.

30. What are ‘metadata’ and its importance in Abinitio?

Metadata in Abinitio refers to data that describes other data, providing context and meaning. Its importance includes:

- Data Governance: Enhances data quality and compliance by maintaining standards and definitions.

- Improved Data Management: Facilitates efficient data integration and processing by providing insights into data lineage and structure.

- Collaboration: Enables better communication among teams by providing a shared understanding of data assets.

Effective management of metadata is crucial for successful data projects and analytics initiatives.

These intermediate questions are designed for candidates with some experience in Ab Initio, focusing on practical applications and considerations in real-world scenarios.

35. What is the purpose of the Ab Initio graph, and how is it structured?

An Ab Initio graph is a visual representation of a data flow process. It is structured using various components such as input and output files, transformations, and data processing functions. The main elements include:

- Components: These are the building blocks such as read, write, and transform operations.

- Connections: Arrows that define the flow of data between components.

- Parameters: Variables that can be set to control the behavior of components.

Graphs enable users to design complex data processes visually, making it easier to manage and understand data transformations.

36. How do you optimize a graph in Ab Initio for performance?

Optimizing a graph in Ab Initio can significantly enhance performance. Here are some best practices:

- Use parallelism: Leverage Ab Initio’s parallel processing capabilities to run multiple processes simultaneously.

- Optimize data partitioning: Ensure that data is evenly distributed across available resources to prevent bottlenecks.

- Minimize data movement: Reduce the number of times data is read and written to improve efficiency.

- Efficient component choice: Select the appropriate components for the task to minimize unnecessary processing overhead.

Implementing these strategies can lead to significant reductions in processing time and resource utilization.

37. Can you explain the concept of ‘reusable components’ in Ab Initio?

Reusable components in Ab Initio are pre-defined graph sections or functions that can be utilized across multiple graphs. This practice enhances maintainability and reduces redundancy. Key benefits include:

- Consistency: Ensures that the same logic is applied uniformly across different graphs.

- Efficiency: Reduces development time as components do not need to be recreated.

- Ease of maintenance: Changes made to a reusable component automatically propagate to all graphs using it.

Creating and using reusable components is a best practice that streamlines the development process and improves overall code quality.

38. What are the different types of data files supported by Ab Initio?

Ab Initio supports a variety of data file types, allowing flexibility in data processing. The main types include:

- Flat files: Simple text files, often used for structured data.

- Delimited files: Files where data fields are separated by specific characters (like commas or tabs).

- Binary files: Non-text files used for efficient storage and retrieval of complex data.

- Database files: Data sourced from relational databases, allowing integration with SQL queries.

Understanding the types of data files that Ab Initio can process helps in designing effective data workflows and ensuring compatibility with various data sources.

Abinitio Interview Questions for Experienced

This set of Ab Initio interview questions is tailored for experienced professionals, focusing on advanced topics such as architecture, optimization techniques, scalability challenges, design patterns, and leadership in data integration projects.

39. What are the key architectural components of Ab Initio?

Ab Initio’s key architectural components include the following:

- Graphical Development Environment (GDE): This is the interface where developers design ETL processes using a drag-and-drop approach.

- Co>Operating System: This is the runtime environment that executes the graphs created in GDE, managing resources and execution flow.

- Data Integration Engine: This component handles data processing and transformation, optimizing performance and scalability.

- Metadata Repository: It stores important metadata related to data integration jobs, which aids in job tracking and data lineage.

40. How can you optimize performance in Ab Initio?

Performance optimization in Ab Initio can be achieved through several strategies:

- Parallelism: Utilize parallel processing by designing graphs that allow for concurrent execution of components.

- Memory Management: Fine-tune memory settings in the Co>Operating System to ensure optimal use of available resources.

- Partitioning: Use data partitioning techniques to distribute workloads across multiple nodes, enhancing processing speed.

- Caching: Implement caching for frequently accessed data to reduce the need for repeated data retrieval.

Combining these strategies can significantly enhance the performance of data processing jobs.

41. What design patterns are commonly used in Ab Initio?

Common design patterns in Ab Initio include:

- Data Flow Pattern: This pattern focuses on the flow of data through various transformations and is fundamental in ETL design.

- Modular Design: Components are designed to be reusable, allowing for easier maintenance and updates.

- Pipeline Pattern: Data is processed in a continuous stream, which can improve processing efficiency.

- Batch Processing Pattern: Jobs are scheduled and executed in batches, optimizing resource utilization during off-peak hours.

These patterns help in building scalable and maintainable data integration solutions.

42. Explain the concept of data lineage in Ab Initio.

Data lineage in Ab Initio refers to the process of tracking the flow of data from its origin through the various transformations it undergoes until it reaches the final output. It involves:

- Source Tracking: Identifying the original data sources.

- Transformation Tracking: Documenting how data is transformed at each stage of the processing pipeline.

- Impact Analysis: Understanding how changes in data sources or transformations affect downstream processes.

Data lineage is essential for auditing, compliance, and troubleshooting data quality issues.

43. How do you implement error handling in Ab Initio graphs?

Error handling in Ab Initio graphs can be implemented through the following methods:

- Reject Files: Configure components to write rejected records to reject files for further analysis.

- Error Handling Components: Use specific components such as the Error Component to process errors and implement recovery logic.

- Notifications: Set up notifications to alert developers or operators about errors during job execution.

Effective error handling mechanisms ensure data integrity and facilitate prompt issue resolution.

44. Discuss the importance of metadata in Ab Initio.

Metadata plays a crucial role in Ab Initio by providing context and meaning to the data being processed. Its importance includes:

- Data Management: Helps in understanding data structures, relationships, and definitions, aiding in effective management.

- Quality Assurance: Enables tracking of data quality metrics and lineage, ensuring accuracy and reliability.

- Documentation: Acts as a reference for developers and analysts, facilitating easier onboarding and knowledge transfer.

Effective metadata management enhances the overall efficiency and effectiveness of data integration processes.

45. What are some best practices for developing Ab Initio graphs?

Best practices for developing Ab Initio graphs include:

- Modular Design: Create reusable components to promote code efficiency and maintainability.

- Performance Testing: Regularly test graphs under different loads to identify performance bottlenecks.

- Version Control: Implement a version control system to manage changes and maintain a history of modifications.

- Documentation: Maintain clear documentation for each component and graph to facilitate future maintenance.

Following these best practices can lead to more robust and scalable data integration solutions.

46. How do you handle large volumes of data in Ab Initio?

Handling large volumes of data in Ab Initio involves several strategies:

- Partitioning: Divide large datasets into smaller partitions to enable parallel processing and improve performance.

- Incremental Load: Use incremental loading techniques to process only new or changed data, reducing the volume of data handled at once.

- Resource Allocation: Optimize resource allocation by configuring the Co>Operating System to utilize available hardware effectively.

- Data Compression: Apply data compression methods to minimize storage requirements and improve transmission times.

These strategies enhance performance and make processing large datasets more manageable.

47. Explain the role of the Ab Initio Co>Operating System.

The Ab Initio Co>Operating System is the execution engine responsible for running Ab Initio graphs. Its key roles include:

- Resource Management: Manages CPU, memory, and I/O resources to optimize job execution and performance.

- Execution Control: Coordinates the execution of components and manages data flow between them.

- Job Scheduling: Facilitates the scheduling of jobs, allowing for efficient batch processing and resource utilization.

The Co>Operating System is essential for ensuring that data integration tasks are executed efficiently and effectively.

48. What is the significance of the Ab Initio graph?

An Ab Initio graph is a visual representation of a data integration process, illustrating the flow of data through various components. Its significance lies in:

- Ease of Design: The graphical interface simplifies the design process, allowing developers to easily visualize and understand data flows.

- Debugging: Graphs enable easier debugging as developers can track data movement and identify bottlenecks visually.

- Collaboration: Facilitates collaboration among team members by providing a clear framework for discussing data integration processes.

Overall, graphs enhance productivity and communication in data integration projects.

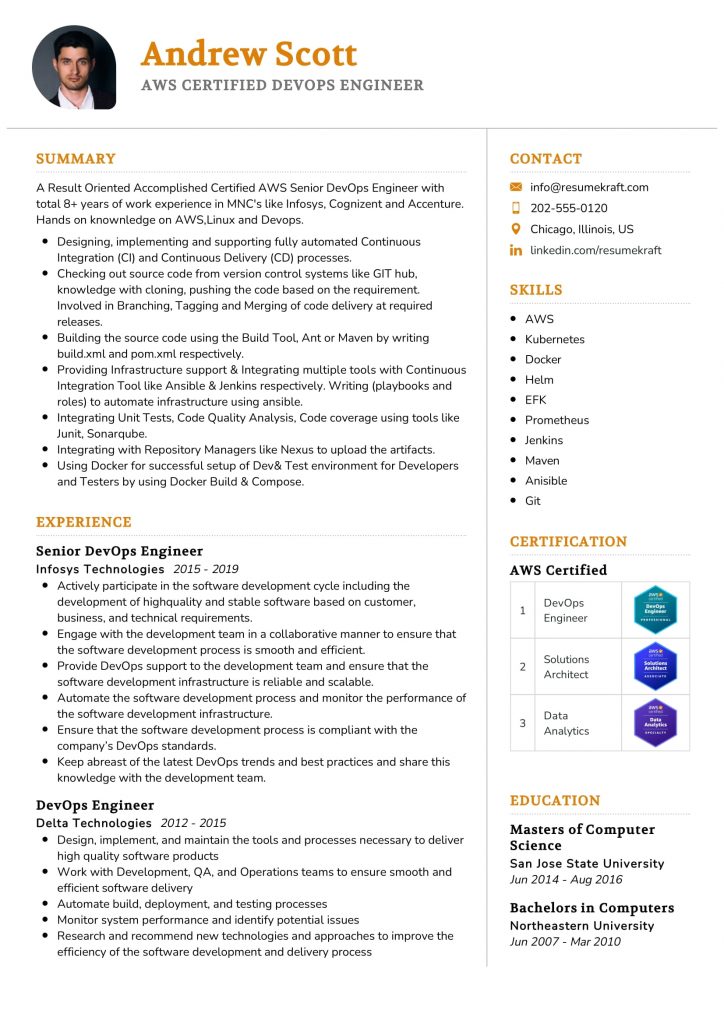

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

49. How do you mentor junior team members in Ab Initio?

Mentoring junior team members in Ab Initio can be approached through the following methods:

- Knowledge Sharing: Conduct regular knowledge-sharing sessions to discuss best practices, design patterns, and troubleshooting techniques.

- Hands-on Training: Provide hands-on training by guiding them through real projects and encouraging them to take ownership of tasks.

- Code Reviews: Conduct code reviews to provide constructive feedback and highlight areas for improvement.

- Encouraging Questions: Foster an environment where juniors feel comfortable asking questions and seeking guidance.

Effective mentorship can significantly enhance the skills and confidence of junior team members.

50. What are the challenges of scaling Ab Initio solutions?

Scaling Ab Initio solutions presents several challenges, including:

- Resource Constraints: Limited hardware resources can impact the ability to scale processing capabilities effectively.

- Data Volume Growth: As data volumes increase, ensuring that the architecture can handle the load without performance degradation is crucial.

- Complexity Management: As solutions grow, managing the complexity of graphs and ensuring maintainability becomes challenging.

- Integration with Other Systems: Ensuring seamless integration with other data sources or systems can complicate scaling efforts.

Addressing these challenges requires careful planning and a strategic approach to architecture and resource management.

Below is a question designed for experienced candidates in Ab Initio, focusing on architecture and optimization.

54. How do you optimize performance in Ab Initio graphs?

Performance optimization in Ab Initio graphs can be achieved through several strategies:

- Parallelism: Utilize parallel processing capabilities by configuring the graphs to run multiple instances simultaneously, effectively utilizing available resources.

- Component Selection: Choose the most efficient components for the task. For example, use the Join component instead of a Merge if the data needs to be combined based on key fields.

- Data Partitioning: Implement data partitioning to distribute the workload. This allows multiple processes to handle smaller chunks of data, reducing processing time.

- Memory Management: Monitor and adjust memory settings for components to avoid excessive swapping and enhance performance.

- Resource Management: Schedule jobs during off-peak hours to maximize resource availability and reduce contention.

By applying these strategies, you can significantly improve the performance and scalability of your Ab Initio graphs, leading to more efficient data processing workflows.

How to Prepare for Your Abinitio Interview

Preparing for an Abinitio interview requires a focused approach on technical skills, problem-solving abilities, and understanding of data processing concepts. Familiarity with the Abinitio toolset, along with practical experience, is essential for success in this specialized field.

- Understand Abinitio Architecture: Familiarize yourself with the architecture and components of Abinitio, including graphs, components, and the metadata layer. Knowing how these elements interact will help you answer technical questions and demonstrate your understanding of the tool’s ecosystem.

- Practice Graph Development: Build sample graphs using Abinitio to solidify your skills. Focus on creating complex data transformations and error handling techniques. Hands-on experience will prepare you for practical scenarios during the interview.

- Review ETL Concepts: Ensure you have a strong grasp of Extract, Transform, Load (ETL) processes. Be prepared to discuss data integration strategies, data quality, and data warehousing concepts, as these are critical in Abinitio environments.

- Study Performance Tuning: Learn about performance tuning techniques in Abinitio, such as optimizing graphs, parallel processing, and resource management. Interviewers often look for candidates who can enhance system efficiency and reduce processing time.

- Familiarize with Metadata Management: Understand how Abinitio handles metadata and its significance in data governance. Be ready to discuss how you’ve used metadata to improve data lineage and compliance in your previous projects.

- Prepare for Scenario-Based Questions: Anticipate scenario-based questions that test your problem-solving skills. Practice articulating your thought process clearly, particularly in situations involving data discrepancies or complex transformations.

- Engage with Community Resources: Join online forums, webinars, and Abinitio user groups to connect with professionals in the field. Engaging with the community can provide insights, tips, and networking opportunities that can enhance your interview preparation.

Common Abinitio Interview Mistakes to Avoid

Preparing for an Abinitio interview requires careful attention to detail. Candidates often overlook critical aspects that can impact their performance. Here are common mistakes to avoid to enhance your chances of success in securing the position.

- Lack of Understanding of Abinitio Components: Failing to grasp key components like graphs, components, and metadata can hinder your ability to discuss how to implement solutions effectively.

- Neglecting Performance Optimization: Not being prepared to discuss performance tuning techniques and best practices can be a red flag, as efficiency is crucial in data processing environments.

- Ignoring the Importance of ETL Processes: Abinitio is primarily an ETL tool. Not articulating your understanding of ETL concepts can make you appear unqualified for the role.

- Underestimating the Role of Data Quality: Failing to emphasize the significance of data quality and validation within your projects can indicate a lack of attention to detail.

- Inadequate Knowledge of Parallel Processing: Abinitio’s strength lies in parallel processing. Lack of familiarity with how it works can limit your ability to leverage the tool effectively.

- Not Practicing Common SQL Queries: SQL proficiency is often assessed in Abinitio interviews. Not practicing can result in poor performance when asked to write queries or optimize them.

- Failure to Provide Real-World Examples: Candidates often miss the opportunity to showcase their practical experience with Abinitio through specific examples, which can demonstrate their problem-solving skills.

- Being Unprepared for Scenario-Based Questions: Many interviews include scenario-based questions. Not preparing for these can leave you unable to demonstrate your analytical thinking and approach to real-world challenges.

Key Takeaways for Abinitio Interview Success

- Prepare an impactful resume using an AI resume builder to highlight your Abinitio skills and relevant experience, ensuring it aligns with industry standards.

- Utilize resume templates for a clean and professional format, making it easier for hiring managers to read and understand your qualifications.

- Showcase your experience with effective resume examples that demonstrate your proficiency in Abinitio, emphasizing your contributions to previous projects.

- Craft personalized cover letters that convey your enthusiasm for the role and the specific skills you bring to the table, making your application stand out.

- Engage in mock interview practice to refine your responses and boost your confidence, focusing on technical questions related to Abinitio and its applications.

Frequently Asked Questions

1. How long does a typical Abinitio interview last?

A typical Abinitio interview usually lasts between 30 minutes to an hour. This timeframe often includes a mix of technical questions related to Abinitio’s features, data processing concepts, and behavioral questions to assess cultural fit. Candidates should be prepared for a focused discussion on their experience with Abinitio, as well as potential problem-solving scenarios. It’s important to manage your time effectively during the interview to cover all necessary topics.

2. What should I wear to a Abinitio interview?

For an Abinitio interview, it’s best to dress in business casual attire. This typically means slacks or a skirt, a collared shirt or blouse, and closed-toe shoes. While it’s important to look professional, ensure your outfit is comfortable, as this will help you feel more confident during the interview. Research the company culture beforehand; if they have a more formal environment, consider wearing a suit to make a positive impression.

3. How many rounds of interviews are typical for a Abinitio position?

For an Abinitio position, candidates can typically expect two to three rounds of interviews. The first round is often a screening interview, focusing on your resume and basic technical skills. Subsequent rounds will delve deeper into technical knowledge, problem-solving abilities, and possibly a practical assessment. Each round may involve different interviewers, including HR representatives and technical team members, to evaluate both soft and hard skills comprehensively.

4. Should I send a thank-you note after my Abinitio interview?

Yes, sending a thank-you note after your Abinitio interview is highly recommended. It demonstrates professionalism and gratitude for the opportunity. In your note, briefly express your appreciation for the interviewer’s time, reiterate your interest in the position, and mention a key point from the discussion that resonated with you. This can help reinforce your candidacy and keep you top of mind as they make their hiring decisions.