Top 10 Highest Paying Government Jobs in India 2026

Landing a government job in India is a dream come true for many people. The attractive salaries, influential positions, and generous benefits that come with these roles make them highly sought after. Whether it’s in the All India Services, Defence, or the PSU sectors, these jobs not only offer financial stability but also a sense of social prestige.

- 1. Indian Administrative Service (IAS)

- 2. Indian Foreign Service (IFS)

- 4. Indian Police Service (IPS)

- 4. Indian Revenue Service (IRS)

- 5. Defence Services (Army, Navy, Air Force)

- 6. RBI Grade B Officer

- 7. PSU Executive Positions

- 8. Professors in Government Universities

- 9. Railway Officers

- 10. SSC CGL Posts (Income Tax Inspector, Examiner, ASO)

- Bonus Mention: High Court and Supreme Court Judges

- Comprehensive Comparison Table: Top Government Jobs and Salaries

- Career Growth and Benefits

- Conclusion

1. Indian Administrative Service (IAS)

The IAS is considered one of the most prestigious careers in Indian public service. Officers play a crucial role in managing essential administrative tasks across the country, which include everything from policymaking to district governance and developmental initiatives.

- Starting salary: ₹1,40,000/month (basic pay) along with various allowances (DA, HRA, transport, medical).

- Eligibility: A graduation degree is required, and candidates must pass the UPSC Civil Services Exam.

- Perks: Enjoy benefits like housing, a vehicle, travel allowances, a pension after retirement, and the assurance of lifelong job security.

2. Indian Foreign Service (IFS)

The Indian Foreign Service (IFS) is India’s face on the global stage. IFS officers handle international relations, represent the country at embassies and consulates, and safeguard India’s interests overseas. Their careers take them around the world, diving deep into the realms of global diplomacy and negotiations.

- Starting salary: ₹1,40,000/month with sizable allowances – plus perks for international postings.

- Eligibility: Graduation; selection through UPSC Civil Services Exam.

- Perks: Foreign travel, housing abroad, special incentives.

4. Indian Police Service (IPS)

Officers in the Indian Police Service (IPS) are at the helm of law enforcement across the nation. Their primary focus is on maintaining public order, preventing crime, and ensuring national security. During emergencies, IPS officers step up to manage disaster responses and uphold the law, playing a vital role in keeping society safe and stable.

- Starting salary: ₹1,40,000/month with DA, HRA, travel allowances.

- Eligibility: Graduation; chosen via UPSC CSE.

- Perks: Government residence, security, vehicle, and retirement pension.

4. Indian Revenue Service (IRS)

The Indian Revenue Service (IRS) is key to managing India’s tax system, overseeing both direct (Income Tax) and indirect (Customs & GST) revenue collection. IRS officers investigate financial crimes, enforce tax regulations, and are crucial to the country’s economic structure. Their work has a direct impact on government funding and fiscal policies.

- Starting salary: ₹1,40,000/month including DA and HRA.

- Eligibility: Graduation; via UPSC CSE.

- Perks: Housing, medical insurance, transport, study leave.

5. Defence Services (Army, Navy, Air Force)

The Defence Services, which include the Indian Army, Navy, and Air Force, are tasked with protecting the nation’s security and strategic interests. A career in defense is characterized by discipline, leadership, and bravery, providing opportunities for personal development and national service. Officers undergo extensive training, face unique challenges, and gain significant respect in society.

- Salary range: ₹60,000 – ₹2,50,000/month (with risk pay, allowances for high-altitude postings, pension).

- Eligibility: 12th or graduation; entry via NDA, CDS, AFCAT, UES.

- Perks: Free healthcare, canteen facilities, retirement pension, housing.

Build your resume in just 5 minutes with AI.

6. RBI Grade B Officer

If you’re eyeing a position as a Grade B Officer with the Reserve Bank of India (RBI), you’re stepping into a pivotal role in shaping the country’s financial and monetary policies. RBI officers are tasked with regulating banking institutions, maintaining currency stability, and driving national economic growth. It’s a role that’s not only intellectually stimulating but also comes with great perks and opportunities for career progression.

- Starting salary: ₹74,000-₹1,25,000/month including allowances, special benefits for officers.

- Eligibility: Graduation; Recruitment by RBI Grade B exam.

- Perks: Accommodation, educational reimbursement, retirement benefits.

7. PSU Executive Positions

When it comes to Public Sector Undertakings (PSUs) like ONGC, BHEL, and NTPC, they’re on the lookout for executives to steer state-run enterprises and manage industrial operations. PSU executives lead large teams, oversee projects, and ensure that government businesses run profitably and efficiently. These positions offer a sense of stability, attractive salaries, and a chance to gain diverse experience across major industries.

- Starting salary: ₹85,000-₹1,50,000/month plus bonuses, performance pay, HRA.

- Eligibility: Graduation, especially engineering; GATE or company-specific exams.

- Perks: Subsidized housing, medical, pension, travel concessions.

8. Professors in Government Universities

Professors at government universities, both central and state, play a crucial role in molding India’s educational framework. They’re involved in teaching, conducting research, developing curricula, and mentoring the next generation of leaders. Government professors enjoy competitive pay, academic freedom, and ample support for their scholarly endeavors.

- Salary range: ₹70,000-₹1,50,000/month (with UGC Pay Commission scale).

- Eligibility: NET/JRF or PhD depending on the level.

- Perks: Research grants, housing, sabbaticals, pension.

9. Railway Officers

Railway Officers are essential to the smooth operation of the extensive Indian Railways network, which ranks as the fourth largest in the world. They oversee key departments like operations, engineering, accounts, and traffic management. Their leadership is vital for ensuring safe, efficient, and seamless train services that connect the entire nation.

- Starting salary: ₹69,000/month plus allowances, free tickets/housing.

- Eligibility: Graduation; selection via RRB exams or UPSC Engineering Services.

- Perks: Travel concessions, government housing, medical benefits.

10. SSC CGL Posts (Income Tax Inspector, Examiner, ASO)

Lastly, positions like Income Tax Inspector, Examiner, and Assistant Section Officer (ASO) filled through the Staff Selection Commission Combined Graduate Level (SSC CGL) exam are some of the most sought-after government jobs for graduates. These roles involve important administrative, revenue, and policy-related responsibilities, offering solid pay, rapid promotions, and job security.

- Average salary: ₹44,900 – ₹1,42,400/month.

- Eligibility: Any graduate; SSC CGL exam.

- Perks: Regular increments, job stability, retirement pension.

Bonus Mention: High Court and Supreme Court Judges

In India, judges of the High Court and Supreme Court hold the top positions within the judicial system, playing a crucial role in upholding justice and the Constitution. High Court judges oversee the highest judicial authority at the state level, dealing with appeals from lower courts, interpreting constitutional matters, and managing significant civil and criminal cases.

On the other hand, Supreme Court judges serve as the final appellate authority in the country, addressing disputes between states and the central government, reviewing laws for their constitutionality, and protecting the fundamental rights of citizens. These roles require exceptional legal knowledge and impartiality, and they are among the most esteemed and well-compensated positions in Indian public service.

- Salary range: ₹2,50,000 – ₹2,80,000/month, not including allowances.

- Eligibility: Law Graduate, years of practice, judicial service selection.

Comprehensive Comparison Table: Top Government Jobs and Salaries

| Job Title | Starting Salary (₹/month) | Key Allowances & Perks | Eligibility/Exam |

|---|---|---|---|

| IAS, IPS, IFS, IRS | 1,40,000+ | DA, HRA, transport, residence | UPSC CSE |

| Defence Services | 60,000 – 2,50,000 | Pension, risk pay, housing | NDA/CDS/AFCAT |

| RBI Grade B Officer | 74,000 – 1,25,000 | HRA, education, housing | RBI Grade B Exam |

| PSU Executive | 85,000 – 1,50,000 | Performance bonus, housing | GATE/Company Exam |

| Professor (Govt Univ.) | 70,000 – 1,50,000 | Research, housing, retirement | NET/JRF/PhD |

| Railway Officer | 69,000+ | Free tickets, housing, HRA | Engineering/UPSC |

| SSC CGL Posts | 44,900 – 1,42,400 | DA, HRA, retirement pension | SSC CGL |

| Supreme/High Court Judge | 2,50,000 – 2,80,000 | Official residence, security | Law Graduate |

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

Career Growth and Benefits

When it comes to government jobs, they don’t just offer attractive salaries; they also provide a sense of job security that lasts a lifetime, along with great retirement benefits, affordable housing, health care, and a respected position in society. As employees climb the ranks, their pay can increase significantly thanks to promotions, additional allowances, and regular pay adjustments.

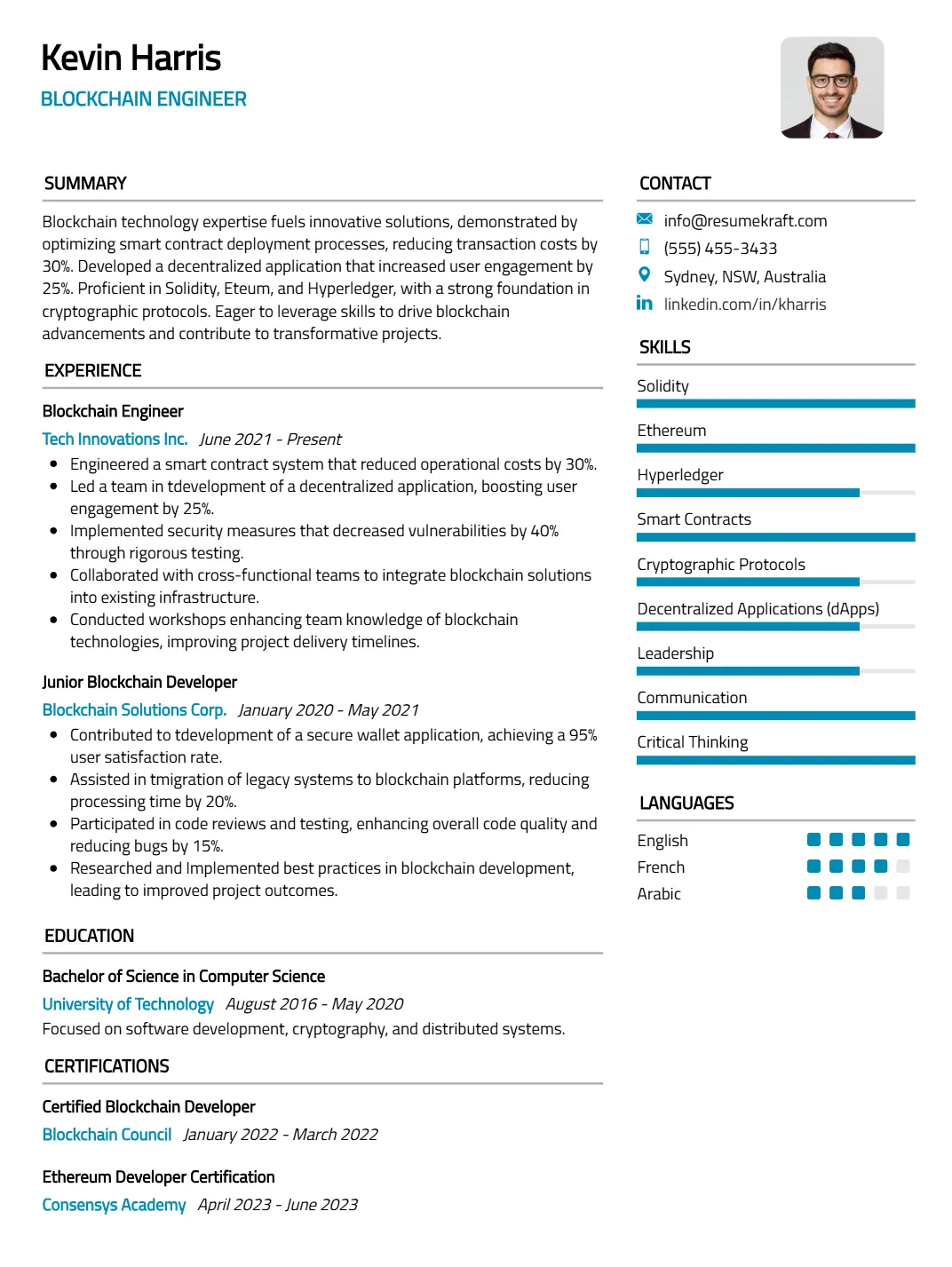

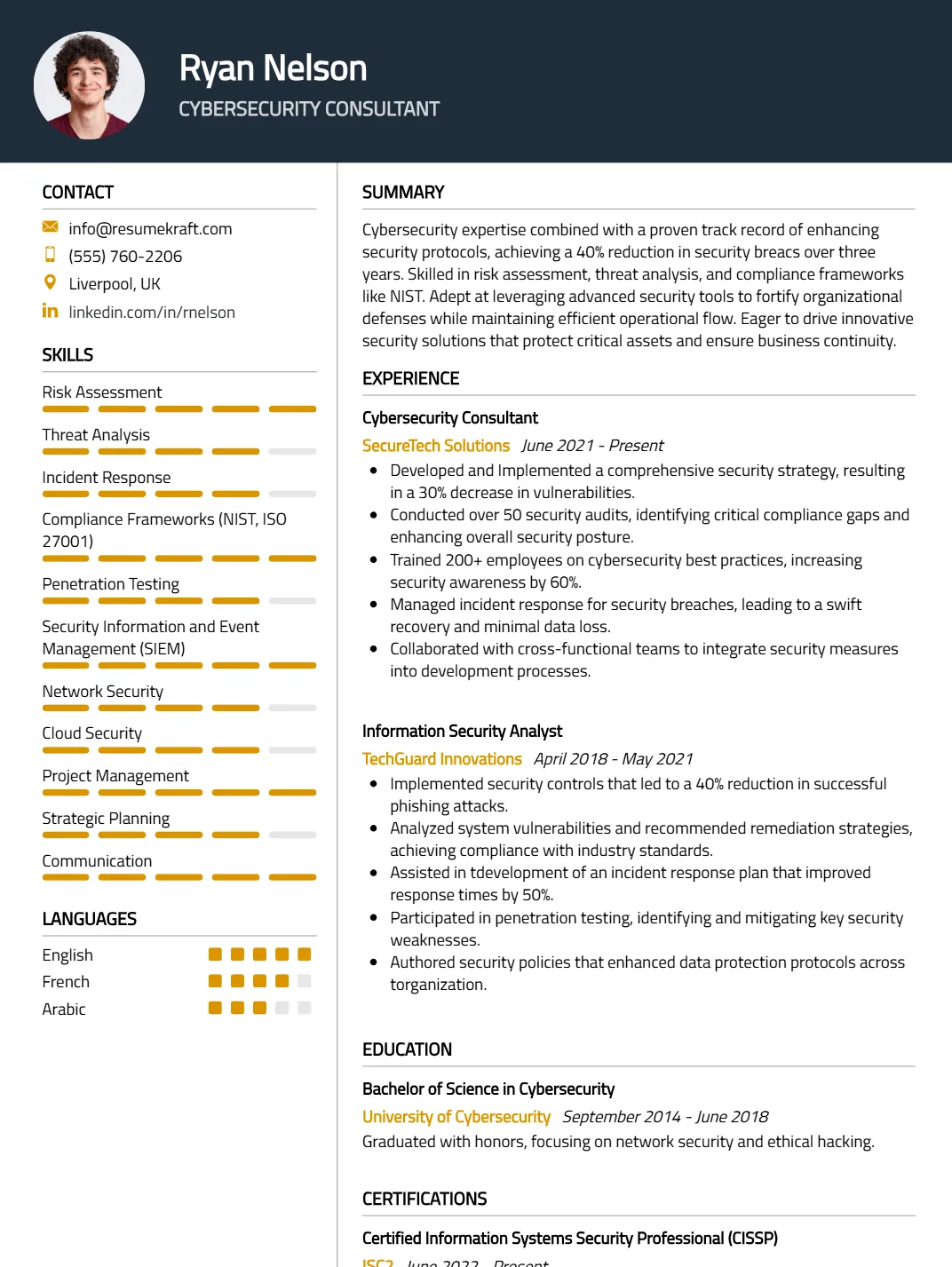

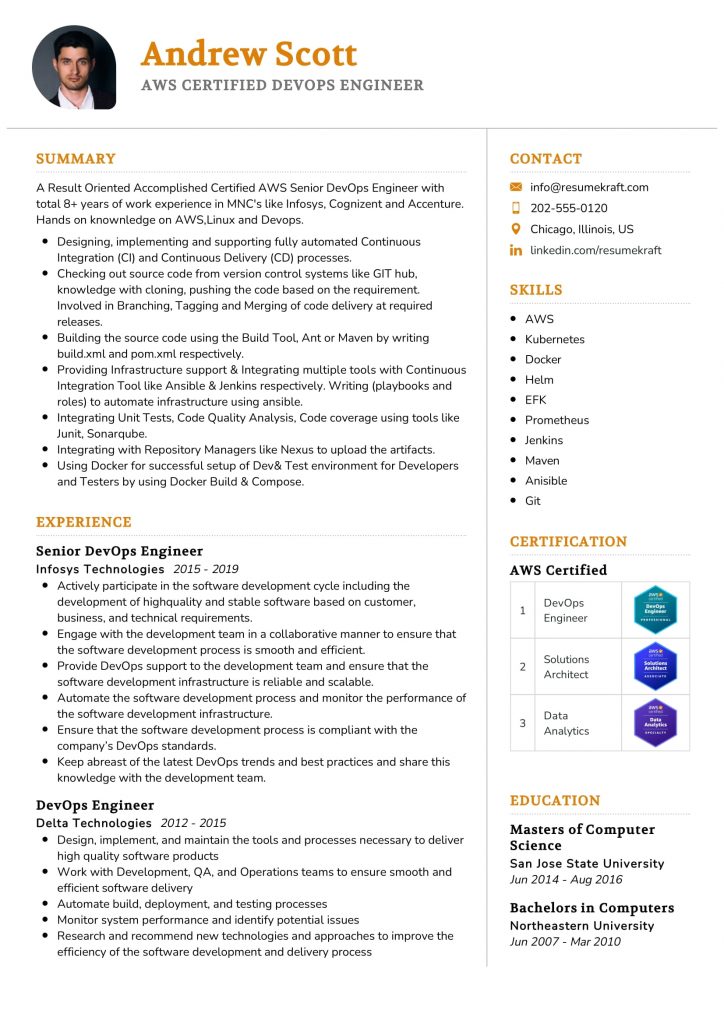

Guide to Prepare Resume for Government Jobs in India

A well-crafted resume can truly set you apart when you’re applying for government jobs in India. Every recruiting body is on the lookout for formats that demonstrate clarity, compliance, and alignment with government standards. Here’s a step-by-step guide to help your application shine.

Guide to Prepare Resume for Government Jobs in India

- Use the Right Resume Format

Government recruitment panels typically favor reverse-chronological resumes that provide complete details without any unexplained gaps. Opt for an ATS-friendly template that emphasizes clarity and compliance. Check out some proven formats here: Resume formats. - Add a Clear Header and Objective

Kick things off with a header that includes your name, contact information, and a concise career objective tailored to the specific role you’re aiming for. A well-crafted summary can really grab the attention of recruiters. - Detailed Work History

List your job experiences in reverse chronological order. For each position, include your job title, employer, location, and the duration of your employment. Use bullet points to highlight your projects and achievements—quantify your impact whenever you can (for example, “Managed a team of 15; Improved project delivery by 20%”). For more inspiration, check out: Resume examples. - Highlight Skills Relevant to Public Sector

Emphasize skills that are crucial for government work, such as compliance, teamwork, project management, leadership, and technical expertise. Incorporate keywords from the job description to enhance your resume’s visibility with ATS. Some examples include:

– Regulatory compliance

– Data analysis

– Public speaking

– Disaster response (for IAS, IPS)

– Budget management - Showcase Educational Qualifications

Start with your highest degree, including the institution and year of completion. Don’t forget to mention any awards or scholarships you’ve received. - Certifications and Training

List any certifications that are directly relevant to the job you’re pursuing, such as computer skills (CCC, MS Office), technical licenses, or sector-specific courses. - Achievements and Results

Highlight measurable outcomes (like “Reduced backlog by 30%” or “Managed a ₹5Cr budget”). This showcases your leadership and the impact you’ve made. - Cover Letters for Government Positions

When writing your cover letter, make sure it complements your resume and highlights your suitability for the role. for samples, see: Cover letters - Use an AI Resume Builder

AI-powered tools can optimize formatting and jargon, ensuring compliance and keyword matching. Try automated suggestions here: AI resume builder

Conclusion

Government jobs in India aren’t just about the appealing salaries; they come with amazing benefits and a chance to make a real impact. To snag one of these esteemed positions, you’ll need to dedicate yourself to exam prep and put together a well-crafted resume that highlights your skills and accomplishments. With the right approach and the resources mentioned earlier, your dream of a fulfilling career in public service is totally achievable!

For further insights and personalized templates, utilize: