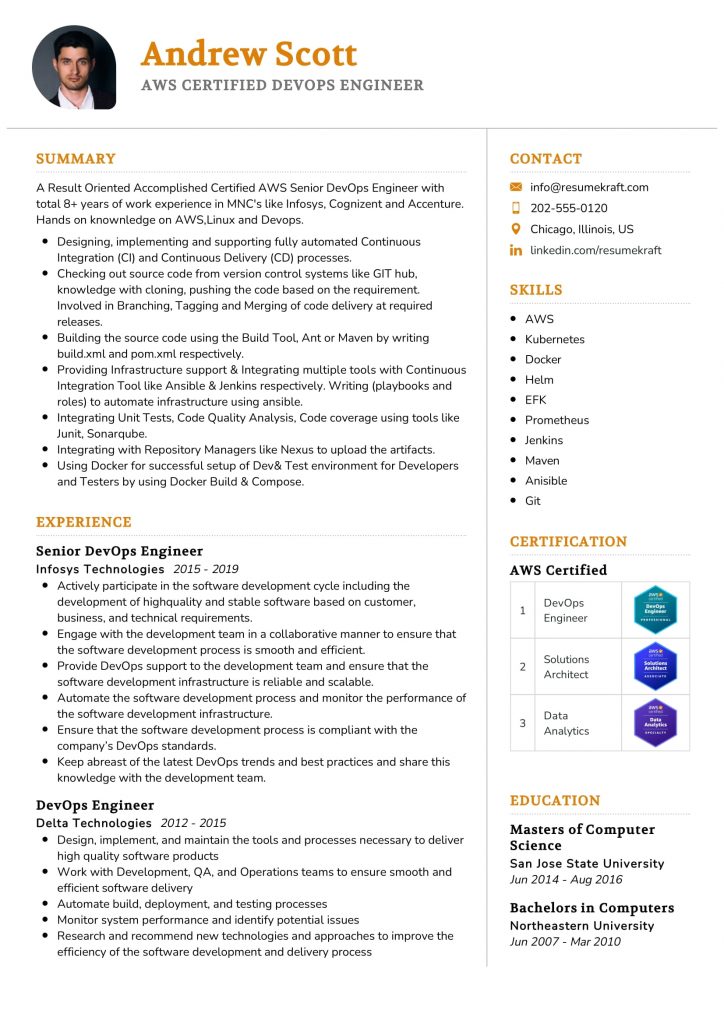

53 Resume Synonyms for Reviewed To Use On Your Resume

Using the word “Reviewed” on resumes has become a common practice, yet its overuse can dilute the impact of your accomplishments. While this term may accurately describe your role in assessing documents or processes, relying on it too heavily suggests a lack of creativity and fails to highlight the nuanced skills you possess. Hiring managers are inundated with resumes, and repetitive language can lead them to overlook your unique qualifications. A varied vocabulary not only keeps your resume engaging but also allows you to showcase your expertise more dynamically. It conveys your ability to communicate effectively and adapt to different contexts, both of which are valuable in any professional setting. This comprehensive guide will delve into the significance of using synonyms effectively, offering alternatives to “Reviewed” that better capture the essence of your contributions while maintaining clarity and impact. By diversifying your language, you can illustrate a broader skill set and ultimately make a more compelling case for your candidacy.

- Why Synonyms for “Reviewed” Matter on Your Resume

- The Complete List: 53 Resume Synonyms for Reviewed

- Strategic Synonym Selection by Industry

- Power Combinations: Advanced Synonym Usage

- Common Mistakes to Avoid

- Quantification Strategies for Maximum Impact

- Industry-Specific Example Sentences

- Advanced ATS Optimization Techniques

- Tailoring Synonyms to Career Level

- The Psychology of Leadership Language

- Final Best Practices

- Key Takeaways for Strategic Synonym Usage

- Frequently Asked Questions

- How many different synonyms should I use in one resume?

- Can I use the same synonym multiple times if it fits different contexts?

- Should I always replace ‘Reviewed’ with a synonym?

- How do I know which synonym is most appropriate for my industry?

- Do synonyms really make a difference in getting interviews?

- Related Resume Synonym Guides

Why Synonyms for “Reviewed” Matter on Your Resume

Using synonyms for “Reviewed” on resumes is essential for several reasons. First, the repetitive use of the term—found on approximately 70% of professional resumes—creates monotony and predictability, making candidates appear less engaging. Moreover, relying on a single descriptor can lead to missed specificity; various synonyms can convey different nuances of the review process, such as “evaluated,” “analyzed,” or “assessed.” This improved specificity enhances the overall impact of your accomplishments. Additionally, using diverse terminology can aid in optimizing your resume for Applicant Tracking Systems (ATS), which may favor varied keywords over repetition. Finally, incorporating synonyms helps create dynamic narratives that highlight your unique contributions and capabilities, ultimately making your resume stand out in a competitive job market. Diversifying language not only enriches your resume’s content but also demonstrates your attention to detail and communication skills.

The Complete List: 53 Resume Synonyms for Reviewed

Here’s our comprehensive collection of “Reviewed” alternatives, organized for easy reference:

| Synonym | Best Context | Professional Level |

|---|---|---|

| Evaluated | Quality assurance | Mid-level |

| Assessed | Performance analysis | Mid-level |

| Analyzed | Data interpretation | Mid-level |

| Examined | Audit processes | Senior |

| Scrutinized | Compliance checks | Senior |

| Audited | Financial assessments | Senior |

| Inspected | Product quality | Mid-level |

| Reviewed | Document verification | Entry-level |

| Surveyed | Market research | Mid-level |

| Critiqued | Creative projects | Mid-level |

| Validated | Process improvement | Senior |

| Appraised | Investment opportunities | Senior |

| Verified | Data accuracy | Entry-level |

| Investigated | Incident analysis | Senior |

| Compared | Benchmarking | Mid-level |

| Monitored | Project tracking | Mid-level |

| Cataloged | Inventory management | Entry-level |

| Observed | Field studies | Entry-level |

| Checked | Process compliance | Entry-level |

| Cross-checked | Data verification | Mid-level |

| Summarized | Reporting | Entry-level |

| Concluded | Research findings | Senior |

| Reflected | Strategic planning | Senior |

| Tested | Software development | Mid-level |

| Gathered insights | Market analysis | Mid-level |

| Highlighted | Key findings | Mid-level |

| Outlined | Project scopes | Mid-level |

| Clarified | Technical documentation | Mid-level |

| Identified | Risk assessment | Mid-level |

| Dissected | Complex issues | Senior |

| Presented findings | Board meetings | Executive |

| Facilitated | Team discussions | Mid-level |

| Clarified | Process workflows | Mid-level |

| Synthesized | Research data | Senior |

| Forecasted | Future trends | Senior |

| Articulated | Strategic objectives | Executive |

| Highlighted | Key performance indicators | Mid-level |

| Correlated | Data relationships | Senior |

| Specified | Requirements gathering | Mid-level |

| Elucidated | Complex concepts | Senior |

| Clarified | Stakeholder expectations | Mid-level |

| Disclosed | Findings in reports | Senior |

| Corroborated | Information accuracy | Senior |

| Reviewed | Compliance documents | Entry-level |

| Explored | Potential improvements | Mid-level |

| Highlighted | Critical issues | Senior |

| Presented | Data summaries | Mid-level |

| Documented | Process evaluations | Entry-level |

| Annotated | Research papers | Mid-level |

| Compiled | Information resources | Entry-level |

| Summarized | Annual reviews | Senior |

| Cataloged | Research findings | Entry-level |

| Clarified | Project objectives | Mid-level |

| Evaluated | Program effectiveness | Senior |

| Reviewed | Performance metrics | Entry-level |

Strategic Synonym Selection by Industry

Strategic synonym selection is crucial in tailoring your resume for specific industries, as different sectors prioritize distinct qualities and vocabulary. Here are five industry categories with their preferred synonyms:

- Technology: Synonyms such as “innovation,” “agility,” and “systems thinking” resonate well. Technology companies value innovation and systematic thinking to drive advancements and maintain competitive edges.

- Healthcare: In this sector, terms like “precision,” “collaborative care,” and “patient-centered” are essential. Healthcare emphasizes precision in treatment and collaborative care among professionals to ensure the best patient outcomes.

- Finance: Preferred synonyms include “analytical,” “risk management,” and “strategic forecasting.” The finance industry values analytical skills and strategic planning to navigate market complexities and minimize risks effectively.

- Consulting: Words like “problem-solving,” “stakeholder engagement,” and “change management” are key. Consulting firms prioritize problem-solving abilities and change management to help clients adapt and thrive in evolving environments.

- Manufacturing: Synonyms such as “process optimization,” “lean management,” and “quality assurance” are vital. The manufacturing sector focuses on process optimization and quality assurance to enhance efficiency and product reliability.

Power Combinations: Advanced Synonym Usage

Advanced synonym usage can significantly enhance your resume by demonstrating your professional growth and adaptability.

To illustrate career advancement, use increasingly sophisticated synonyms. For example, instead of saying “managed a team,” you could state “led a cross-functional team,” then elevate it to “orchestrated a high-performing team.” This progression showcases not just your experience but your evolving leadership style.

Build your resume in just 5 minutes with AI.

When changing industries, it’s crucial to use synonyms that translate your experience. For instance, if you were a “sales representative” in retail, transitioning to tech could be framed as “client relationship manager,” emphasizing transferable skills like customer engagement and solutions delivery.

Choosing synonyms that fit the situation can make your achievements resonate more. Instead of “improved efficiency,” use “streamlined operations” in a manufacturing context but say “enhanced user experience” for a tech role. This tailored approach demonstrates your understanding of industry language.

Common Mistakes to Avoid

Oversaw the entire project review process, ensuring every detail was perfect.

Assisted in reviewing project submissions to ensure compliance with standards.

Evaluated team performance during the project review meetings.

Critically assessed team progress in weekly project update sessions.

Reviewed reports, reviewed budgets, and reviewed timelines.

Reviewed reports, analyzed budgets, and assessed timelines for accuracy.

Quantification Strategies for Maximum Impact

Quantification strategies are crucial for demonstrating the impact of your leadership roles. Each leadership synonym should be accompanied by measurable results to convey effectiveness. Here are three categories to consider:

- Team-Focused Synonyms: Highlight your role in managing teams, specifying the number of people involved and the outcomes achieved. For example, instead of saying “led a team,” say “led a team of 15 over 12 months, resulting in a 25% increase in productivity.”

- Project-Focused Synonyms: When discussing projects, include project value, timeline, and success metrics. For instance, rather than stating “managed a project,” use “managed a $500K project over six months, delivering on time and achieving a 30% cost reduction.”

- Strategic-Focused Synonyms: Emphasize strategic initiatives by showcasing before and after metrics. Instead of “developed a strategic plan,” say “developed a strategic plan that increased market share from 10% to 15% within one year, impacting revenue growth by $2 million.”

Industry-Specific Example Sentences

- Technology: Conducted a comprehensive analysis of system performance, identifying 15% inefficiencies and implementing solutions that resulted in a 30% increase in speed.

- Technology: Evaluated security protocols across multiple platforms, leading to a 40% reduction in vulnerabilities and enhanced user trust.

- Technology: Assessed software functionality through user feedback, resulting in a 25% improvement in user satisfaction scores post-implementation.

- Technology: Audited code quality and compliance, decreasing bug reports by 50% within three months through targeted training sessions.

- Healthcare: Analyzed patient care protocols, which improved treatment adherence rates by 20%, resulting in better health outcomes for chronic disease patients.

- Healthcare: Inspected medical records management processes, leading to a 30% decrease in retrieval times and enhancing overall operational efficiency.

- Healthcare: Critiqued the implementation of new health technologies, facilitating training that improved staff proficiency by 35% within the first quarter.

- Healthcare: Reviewed clinical trial data integrity, ensuring compliance with regulations, and contributed to a successful study publication.

- Business/Finance: Appraised financial reports for accuracy, identifying discrepancies that improved budget forecasting by 15% for the upcoming fiscal year.

- Business/Finance: Scrutinized investment proposals, leading to the selection of projects that yielded a 25% increase in ROI over two years.

- Business/Finance: Evaluated market trends and competitor performance, providing insights that shaped a new strategy resulting in a 10% growth in market share.

- Business/Finance: Examined audit findings and implemented corrective actions that reduced compliance risks by 40% within six months.

- Education: Analyzed curriculum effectiveness, leading to a 20% increase in student engagement and improved test scores across multiple subjects.

- Education: Assessed teaching methodologies through classroom observations, resulting in a 30% improvement in student retention rates.

- Education: Reviewed academic performance data, identifying key areas for intervention that boosted graduation rates by 15% over three years.

- Education: Evaluated the impact of extracurricular programs, leading to enhanced student participation and a 25% increase in overall program satisfaction.

Advanced ATS Optimization Techniques

To effectively optimize your resume for Applicant Tracking Systems (ATS) using synonyms, employ the following techniques:

- Keyword Density Strategy: Aim for 2-3 different synonyms per job role to maintain keyword density without overstuffing. For example, if the job requires “project management,” also include terms like “project coordination” and “program oversight.” This variety enhances your chances of matching against ATS algorithms.

- Semantic Clustering: Group related synonyms to create a comprehensive keyword family. For instance, if you’re in sales, cluster terms like “sales,” “business development,” and “client relations.” This approach not only improves readability but also increases keyword relevance across different ATS.

- Job Description Matching: Analyze job postings and incorporate similar synonyms found in them. If a posting mentions “data analysis,” ensure your resume includes terms like “data interpretation” and “analytics.” This strategy aligns your qualifications with the employer’s terminology, enhancing ATS compatibility.

Tailoring Synonyms to Career Level

When tailoring synonyms to career levels, it’s essential to align language with the expectations and responsibilities of each position.

- Entry-Level Professionals: Focus on collaborative and learning-oriented synonyms like “assisted,” “contributed,” “collaborated,” and “supported.” This language conveys eagerness to learn and work with others, appealing to employers seeking fresh talent willing to grow.

- Mid-Level Managers: Emphasize direct management and project leadership with terms such as “led,” “coordinated,” “oversaw,” and “managed.” These words highlight responsibility and the ability to drive results, appealing to organizations looking for candidates who can bridge strategy and execution.

- Senior Executives: Use strategic and transformational language like “transformed,” “spearheaded,” “visionary,” and “orchestrated.” This choice reflects a focus on high-level decision-making and long-term impact, resonating with stakeholders who value leadership that drives organizational change.

By tailoring language to the appropriate career level, candidates can effectively communicate their relevance and potential value to prospective employers.

The Psychology of Leadership Language

The psychology of leadership language significantly impacts how hiring managers perceive candidates. By selecting the right synonyms, leaders can evoke specific psychological responses that align with their desired traits.

- Action-Oriented Words: Terms like “achieve,” “drive,” and “execute” convey focus on results and decisiveness, appealing to managers seeking goal-oriented leaders.

- Collaborative Words: Words such as “partner,” “collaborate,” and “engage” indicate strong team-building skills, resonating with organizations that prioritize collective success.

- Innovation Words: Synonyms like “innovate,” “strategize,” and “vision” reflect strategic thinking, attracting companies that value forward-thinking leaders.

- Nurturing Words: Phrases such as “mentor,” “support,” and “develop” showcase a commitment to people development, aligning with cultures that emphasize employee growth.

By understanding the psychological impact of these word choices, candidates can tailor their language to resonate with the company culture, enhancing their appeal to hiring managers.

Final Best Practices

To effectively use synonyms in your resume, follow these best practices:

- The 60-Second Rule: Your resume should tell a compelling story that can be conveyed in 60 seconds. Choose synonyms that maintain clarity and impact. For instance, instead of “managed,” consider “led” or “oversaw” to convey leadership without losing specificity.

- The Mirror Test: Ensure the language you use resonates with your natural speaking style. If “collaborated” doesn’t feel authentic, opt for “worked together with” to maintain your voice while ensuring professionalism.

- The Peer Review: Have colleagues review your synonym choices. They can provide valuable feedback on whether your language feels genuine and effective, helping you refine your word selection.

- Measuring Success: Track your application response rates to gauge the effectiveness of your synonym usage. If certain terms lead to more interviews, consider incorporating them more broadly while balancing authenticity.

Key Takeaways for Strategic Synonym Usage

- Utilize synonyms for ‘Reviewed’ to enhance clarity in your resume, ensuring your formatting aligns with professional standards found in various resume templates for a polished presentation.

- Incorporate synonyms that fit the context of your achievements, making your experiences stand out by referencing effective resume examples that demonstrate powerful language use.

- Leverage an AI resume builder to suggest alternative verbs for ‘Reviewed’, allowing you to fine-tune your language and tailor your application for specific roles.

- Choose action-oriented synonyms that resonate with the industry you’re targeting, using resume templates that highlight these dynamic verbs for maximum impact.

- Showcase your analytical skills by selecting synonyms that reflect critical thinking and thoroughness, enhancing your profile with strong resume examples that illustrate such competencies.

- Vary your language throughout your resume by incorporating synonymous terms, ensuring consistent formatting that aligns with the best practices suggested in professional resume templates.

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

Frequently Asked Questions

How many different synonyms should I use in one resume?

It’s advisable to use 2-3 different synonyms for ‘Reviewed’ throughout your resume. This variety enhances readability and prevents monotony, while also appealing to both Applicant Tracking Systems (ATS) and human readers. However, ensure that the synonyms accurately reflect your actions and responsibilities in each context. Overusing synonyms may lead to confusion, so it’s essential to maintain clarity and relevance in your descriptions. Balance is key—diversify your vocabulary without straying from the message you want to convey.

Can I use the same synonym multiple times if it fits different contexts?

Yes, you can use the same synonym more than once if it accurately fits different contexts. Consistency in terminology can reinforce your expertise in specific areas, while also maintaining clarity. However, be mindful of the overall flow of your resume; repetition in close proximity might reduce impact. Instead, consider using variations that align with the specific tasks or responsibilities you’re describing. This approach not only showcases your versatility but also keeps your resume engaging and tailored to the various roles you are targeting.

Should I always replace ‘Reviewed’ with a synonym?

No, it’s not necessary to replace ‘Reviewed’ in every instance. Sometimes, the word itself may be the most straightforward and effective choice to convey your actions. If the term succinctly describes what you did and fits the overall tone of your resume, then it’s perfectly acceptable to keep it. Focus on clarity and impact; if using a synonym adds value or enhances the description, then consider making the change. The key is to maintain a professional and coherent narrative throughout your resume.

How do I know which synonym is most appropriate for my industry?

To determine the most appropriate synonym for your industry, research industry-specific terminology and language. Analyze job postings, professional profiles, and industry publications to identify commonly used terms that convey similar meanings to ‘Reviewed’. Engage with professionals in your field or seek feedback from mentors who can provide insights into best practices. Tailoring your vocabulary to align with industry norms not only enhances your credibility but also demonstrates your understanding of the sector, making your resume more appealing to potential employers.

Do synonyms really make a difference in getting interviews?

Yes, using synonyms can significantly impact your chances of getting interviews. A well-crafted resume that employs varied language not only engages the reader but also showcases your communication skills and attention to detail. Synonyms can help highlight your accomplishments and responsibilities more effectively, making your qualifications stand out. Additionally, optimizing your resume with relevant keywords improves its chances of passing through ATS filters. Overall, thoughtful use of synonyms enhances both the clarity and appeal of your resume, potentially leading to more interview opportunities.

Related Resume Synonym Guides

Exploring synonyms for commonly overused resume words is essential for crafting a compelling professional narrative. By strategically choosing varied language throughout your resume, you can effectively highlight your unique skills and experiences, making a stronger impact on potential employers and standing out in a competitive job market.