Preparing for a Data Modeling interview is an exciting journey, as this role sits at the intersection of data analysis and database design. Data modelers play a crucial role in defining how data is structured, stored, and accessed, which directly impacts an organization’s ability to derive insights and make informed decisions. Proper interview preparation is vital, as it not only showcases your technical skills but also your understanding of business needs. This comprehensive guide will cover essential concepts such as normalization, entity-relationship diagrams, data warehousing, and practical interview questions to help you stand out and demonstrate your expertise in data modeling.

What to Expect in a Data Modeling Interview

In a Data Modeling interview, candidates can expect a mix of technical questions and case studies. Interviews may be conducted by data architects, data engineers, or hiring managers who specialize in data analytics. The structure typically includes an initial screening, followed by in-depth technical assessments that evaluate the candidate’s understanding of data modeling concepts, normalization, and denormalization techniques. Candidates may also face practical exercises where they design a data model based on specific business requirements. Behavioral questions assessing teamwork and problem-solving skills are also common.

Data Modeling Interview Questions For Freshers

Data modeling interview questions for freshers focus on essential concepts and practices that help in structuring data effectively. Candidates should master fundamental topics such as entity-relationship modeling, normalization, data types, and the basics of SQL to excel in these interviews.

1. What is data modeling?

Data modeling is the process of creating a visual representation of a complex data system. It helps in defining the structure, relationships, and constraints of the data within a system. This representation can assist in understanding how data is stored, accessed, and manipulated, making it easier to design databases and applications.

2. What are the different types of data models?

- Conceptual Data Model: This is a high-level representation that outlines the structure of the data without considering how it will be implemented.

- Logical Data Model: This model provides a more detailed view of the data structure, including entities, attributes, and relationships, but is still independent of physical considerations.

- Physical Data Model: This model describes how the data will be physically stored in the database, including tables, indexes, and data types.

3. What is normalization in data modeling?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing a database into smaller tables and defining relationships between them. The main goals of normalization are to eliminate duplicate data, ensure data dependencies make sense, and facilitate data integrity.

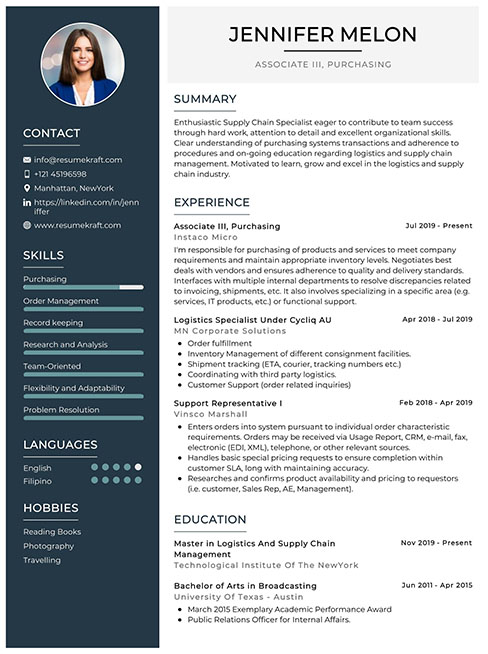

Build your resume in just 5 minutes with AI.

4. Can you explain the different normal forms?

- First Normal Form (1NF): Ensures that each column contains atomic values and each entry in a column is unique.

- Second Normal Form (2NF): Builds on 1NF by ensuring that all non-key attributes are fully functional dependent on the primary key.

- Third Normal Form (3NF): Requires that all the attributes are not only dependent on the primary key but also independent of each other.

5. What is an entity-relationship diagram (ERD)?

An entity-relationship diagram (ERD) is a visual representation of the entities within a system and their relationships. ERDs use specific symbols to represent entities (like rectangles), attributes (ovals), and relationships (diamonds). They are essential for designing databases as they provide a clear map of how data is structured.

6. What are primary keys and foreign keys?

- Primary Key: A unique identifier for a record in a database table. It ensures that no two records can have the same value in this field.

- Foreign Key: A field in one table that links to the primary key of another table, establishing a relationship between the two tables.

Primary and foreign keys are crucial for maintaining data integrity and relationships in relational databases.

7. What is a relationship in data modeling?

A relationship in data modeling defines how two entities are related to each other. There are several types of relationships: one-to-one, one-to-many, and many-to-many. Understanding these relationships is vital for designing a robust database structure that accurately reflects real-world interactions between data entities.

8. What is denormalization?

Denormalization is the process of intentionally introducing redundancy into a database design to improve read performance. By combining tables or adding redundant data, denormalization can reduce the number of joins needed during queries, thus speeding up data retrieval at the cost of potential data anomalies and increased storage requirements.

9. What are data types, and why are they important in data modeling?

Data types define the kind of data that can be stored in a column of a database table, such as integers, strings, dates, and binary data. They are important because they determine how data can be used, ensure data integrity by preventing invalid data entries, and affect the performance of database operations.

10. How do you handle data integrity in a database?

- Use of Primary and Foreign Keys: Establish relationships and constraints to ensure valid data.

- Constraints: Implement rules such as NOT NULL, UNIQUE, and CHECK to enforce data integrity.

- Triggers: Use database triggers to enforce business rules and automate actions based on changes.

Maintaining data integrity is crucial for accurate and reliable data within a database.

11. What is a schema in database design?

A schema is a blueprint or architecture of how a database is structured, including the tables, fields, relationships, and constraints. It provides a framework for how data is organized and accessed, serving as a guide for database administrators and developers when designing and managing a database system.

12. What is the difference between a fact table and a dimension table?

- Fact Table: Contains quantitative data for analysis and is often denormalized. It typically includes metrics, measures, and foreign keys to dimension tables.

- Dimension Table: Contains descriptive attributes related to the facts. It is usually denormalized and provides context to the data in the fact table.

Understanding the distinction is essential for designing a data warehouse and performing effective data analysis.

13. What is an index in a database?

An index is a database object that improves the speed of data retrieval operations on a table at the cost of additional storage space and slower write operations. Indexes are created on specific columns to enhance search operations, allowing the database engine to find rows faster without scanning the entire table.

14. Can you explain the role of SQL in data modeling?

SQL (Structured Query Language) is used to communicate with relational databases. It allows users to create and manage database schemas, perform data manipulation (insert, update, delete), and query data. SQL’s capabilities are essential for implementing data models and interacting with the data effectively.

15. What is a data dictionary?

A data dictionary is a centralized repository that contains metadata about the data within a database. It provides information about data types, relationships, constraints, and usage. A data dictionary is essential for understanding the structure and semantics of the data, facilitating better database management and use.

Here are 8 interview questions designed for freshers in Data Modeling. These questions cover fundamental concepts and basic practices that every aspiring data modeler should be familiar with.

16. What is data modeling and why is it important?

Data modeling is the process of creating a visual representation of information and data structures within a system. It is important because it helps to clarify the data requirements, establish relationships between data entities, and ensure that data is organized in a way that supports business processes. A well-structured data model enhances data integrity, reduces redundancy, and improves communication among stakeholders.

17. What are the different types of data models?

- Conceptual Data Model: This model provides a high-level overview of the data and its relationships without going into technical details.

- Logical Data Model: This model defines the structure of the data elements and their relationships, focusing on the logical structure without considering how the data will be physically implemented.

- Physical Data Model: This model describes how the data will be stored in the database, including table structures, indexes, and constraints.

Each type of data model serves a unique purpose and helps in different stages of the database design process.

18. What is normalization in data modeling?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing large tables into smaller, related tables and defining relationships between them. The main goals of normalization are to eliminate duplicate data, ensure data dependencies are logical, and simplify data management. Various normal forms (1NF, 2NF, 3NF, etc.) provide guidelines for achieving normalization.

19. Can you explain the difference between a primary key and a foreign key?

- Primary Key: A primary key is a unique identifier for each record in a database table. It ensures that no two rows have the same key value and cannot be null.

- Foreign Key: A foreign key is a field in one table that uniquely identifies a row in another table, establishing a relationship between the two tables. It can accept duplicate values and nulls.

Understanding the distinction between primary and foreign keys is critical for establishing relationships and maintaining data integrity in relational databases.

20. What is a star schema in data modeling?

A star schema is a type of data model used in data warehousing that organizes data into facts and dimensions. In a star schema, a central fact table is connected to multiple dimension tables, resembling a star shape. This structure allows for efficient querying and reporting, as it simplifies the relationships and optimizes performance for analytical queries. Star schemas are commonly used in business intelligence applications due to their straightforward design.

21. What are the benefits of using an Entity-Relationship (ER) diagram?

- Visual Representation: ER diagrams provide a clear visual representation of data entities and their relationships, making it easier to understand the data model.

- Improved Communication: These diagrams facilitate communication among stakeholders by providing a common language to discuss data requirements and design.

- Documentation: ER diagrams serve as effective documentation for database design, helping future developers and analysts understand the structure and purpose of the data.

Using ER diagrams can greatly enhance the clarity and efficiency of the data modeling process.

22. What is denormalization, and when is it used?

Denormalization is the process of intentionally introducing redundancy into a database by merging tables or adding redundant data. It is used primarily to improve query performance by reducing the number of joins needed to retrieve data. Denormalization is often applied in data warehousing and reporting scenarios where read performance is prioritized over write performance. However, it should be done carefully to maintain data integrity and avoid inconsistencies.

23. What is a dimension table in a data warehouse?

A dimension table is a structure that categorizes facts and measures in order to enable users to answer business questions. Dimension tables contain attributes related to the data, such as time, location, product details, etc. They provide context to the data in fact tables and are typically denormalized to optimize query performance. Dimension tables allow for slice-and-dice analysis, enabling users to view data from different perspectives.

Data Modeling Intermediate Interview Questions

Data modeling interview questions for intermediate candidates focus on essential concepts such as normalization, denormalization, data integrity, and schema design. Candidates should understand how to design efficient databases, optimize performance, and ensure data consistency through practical applications and best practices in real-world scenarios.

24. What is normalization and why is it important in data modeling?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing large tables into smaller, related tables and defining relationships between them. The importance of normalization includes:

- Eliminating data redundancy: Minimizes duplicate data, saving storage space.

- Improving data integrity: Ensures data is consistent and reliable across related tables.

- Facilitating easier maintenance: Changes to data structure can be made with minimal impact on the entire database.

Normalization is crucial for creating a well-structured database that is efficient and easy to manage.

25. What are the different normal forms in database normalization?

There are several normal forms, each addressing specific issues related to data redundancy and integrity:

- First Normal Form (1NF): Ensures that all columns contain atomic values and each entry in a column is unique.

- Second Normal Form (2NF): Achieves 1NF and eliminates partial dependencies by ensuring all non-key attributes are fully functional dependent on the primary key.

- Third Normal Form (3NF): Achieves 2NF and removes transitive dependencies, ensuring that non-key attributes depend only on the primary key.

- Boyce-Codd Normal Form (BCNF): A stronger version of 3NF that deals with certain types of anomaly not handled by 3NF.

Understanding these normal forms helps in designing databases that are efficient and eliminate redundancy.

26. What is denormalization, and when would you use it?

Denormalization is the process of intentionally introducing redundancy into a database by merging tables or adding redundant data to improve read performance. It is useful in scenarios where:

- Read performance is critical: Reducing the number of joins in queries can speed up read operations.

- Reporting needs require aggregating data: Denormalized tables can simplify complex reporting queries.

- Data is mostly read-heavy: In systems where data is rarely updated, denormalization can enhance performance without significant risks.

While denormalization can improve performance, it must be balanced with the potential risks of data anomalies.

27. How do you ensure data integrity in a relational database?

Data integrity can be ensured through several practices:

- Using primary keys: Establishes unique identifiers for records, preventing duplicates.

- Foreign keys: Enforces referential integrity by linking records across tables.

- Constraints: Implementing constraints such as NOT NULL, UNIQUE, and CHECK ensures data validity.

- Transactions: Using transactions helps maintain consistent data states by allowing rollback in case of errors.

Implementing these practices helps maintain the accuracy and reliability of the data in the database.

28. What is a star schema, and how is it used in data warehousing?

A star schema is a type of database schema that is commonly used in data warehousing. It consists of a central fact table connected to multiple dimension tables, resembling a star. The characteristics of a star schema include:

- Simplicity: Easy to understand and query, which enhances performance.

- Efficient for analytical queries: Optimized for read-heavy operations typical in reporting.

- Denormalized structure: Dimension tables may be denormalized, reducing the complexity of joins.

Star schemas are widely used in Business Intelligence (BI) environments for reporting and data analysis.

29. Explain the difference between a fact table and a dimension table.

In a data warehouse, fact tables and dimension tables serve different purposes:

- Fact Table: Contains quantitative data for analysis, such as sales amounts or transaction counts. It typically has foreign keys referencing dimension tables and is usually large in size.

- Dimension Table: Contains descriptive attributes related to the facts, such as product names, dates, or customer information. Dimension tables are usually smaller and provide context for the data in the fact table.

Understanding these differences is crucial for effectively designing a data warehouse schema.

30. What are surrogate keys, and when should you use them?

Surrogate keys are unique identifiers for records in a database that are not derived from application data. They are typically auto-incrementing integers or UUIDs. You should use surrogate keys when:

- The natural key is too complex: Simplifies primary key definitions for easier management.

- Data changes frequently: Prevents issues with changing natural keys, maintaining stability in relationships.

- Enhancing performance: Surrogate keys can improve indexing and querying efficiency due to their uniformity.

Surrogate keys provide a flexible and efficient way to uniquely identify records in a database.

31. How do you handle slowly changing dimensions (SCD) in data warehousing?

Slowly Changing Dimensions (SCD) refer to dimensions that change over time. There are several strategies to handle SCD:

- SCD Type 1: Overwrites old data with new data, losing historical information.

- SCD Type 2: Creates a new record with a new version number or effective date, preserving historical data.

- SCD Type 3: Stores both old and new values in the same record, allowing for limited historical tracking.

The choice of SCD type depends on business requirements for historical data tracking and the complexity of dimension changes.

32. What is a snowflake schema, and how does it differ from a star schema?

A snowflake schema is a more complex version of a star schema where dimension tables are normalized into multiple related tables. The key differences are:

- Structure: Snowflake schemas have more tables and relationships due to normalization, while star schemas are simpler and more denormalized.

- Query performance: Star schemas generally provide faster query performance due to fewer joins, while snowflake schemas may require more complex queries.

- Storage requirements: Snowflake schemas can reduce data redundancy, potentially saving storage space.

The choice between star and snowflake schemas depends on specific use cases and performance needs.

33. What are indexes, and how do they improve database performance?

Indexes are data structures that improve the speed of data retrieval operations on a database table. They work like a book’s index, allowing quick access to rows based on the indexed column values. Key benefits of using indexes include:

- Faster query performance: Reduces the amount of data scanned during query execution.

- Efficiency in sorting and filtering: Enhances the performance of sorting operations and WHERE clause evaluations.

- Improved join performance: Speeds up joins between tables based on indexed columns.

However, indexes can slow down write operations, so it’s important to use them judiciously based on query patterns.

34. How do you optimize a database for performance?

Optimizing a database for performance can involve several strategies:

- Indexing: Create appropriate indexes on frequently queried columns to speed up data retrieval.

- Query optimization: Analyze and rewrite complex queries for efficiency, making use of joins and subqueries appropriately.

- Partitioning: Divide large tables into smaller, more manageable pieces to improve performance.

- Normalization and denormalization: Strike a balance between normalization for data integrity and denormalization for performance based on use cases.

- Regular maintenance: Implement regular database maintenance tasks like updating statistics and rebuilding indexes.

Combining these strategies can lead to a well-performing database that meets application demands.

35. What role does an Entity-Relationship Diagram (ERD) play in data modeling?

An Entity-Relationship Diagram (ERD) is a visual representation of the entities in a database and their relationships. Key roles of ERDs include:

- Visualizing schema design: Provides a clear picture of how different entities interact, aiding in the design process.

- Facilitating communication: Serves as a common language between technical and non-technical stakeholders about the database structure.

- Identifying relationships: Helps in identifying cardinality and participation constraints, which are vital for accurate database design.

ERDs are essential tools in the data modeling process, ensuring a well-structured and understood schema.

Here are some intermediate interview questions for Data Modeling that focus on practical applications, best practices, and performance considerations.

39. What is normalization in database design and why is it important?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing a database into tables and defining relationships between them. This is important because it minimizes the risk of data anomalies, ensures that the data is logically stored, and optimizes storage efficiency. Normalization typically involves several normal forms, each with its own set of rules to achieve a higher level of organization.

40. Can you explain the difference between a primary key and a foreign key?

A primary key is a unique identifier for a record in a database table, ensuring that no two rows have the same key value. It is essential for maintaining entity integrity. A foreign key, on the other hand, is a field in one table that links to the primary key of another table, establishing a relationship between the two tables. Foreign keys are crucial for maintaining referential integrity, ensuring that relationships between tables remain consistent.

41. What are some common types of relationships in data modeling?

- One-to-One: A single record in one table is related to a single record in another table. This is often used for splitting data into separate tables for clarity.

- One-to-Many: A single record in one table can relate to multiple records in another table, commonly used in a parent-child relationship.

- Many-to-Many: Multiple records in one table can relate to multiple records in another table, usually implemented through a junction table.

Understanding these relationships helps in designing a database schema that accurately reflects the business requirements and ensures data integrity.

42. What is denormalization and when should it be used?

Denormalization is the process of intentionally introducing redundancy into a database by merging tables or adding duplicate data to improve read performance. It is often used in scenarios where read-heavy operations are prevalent, such as data warehousing or reporting systems, where the speed of data retrieval is prioritized over write performance. However, it can lead to data anomalies and increased storage requirements, so it should be used judiciously.

43. How would you approach designing a star schema for a data warehouse?

Designing a star schema involves the following steps:

- Identify the business process: Determine the key business process you want to analyze, such as sales or inventory.

- Define fact and dimension tables: Create a fact table that contains measurable metrics and dimension tables that provide context, such as time, location, and product details.

- Establish relationships: Ensure the fact table has foreign keys referencing the primary keys of the dimension tables.

This structure simplifies queries and improves performance, making it easier for analysts to retrieve and analyze data efficiently.

44. What are some best practices for data modeling in a relational database?

- Use descriptive naming conventions: Choose clear and meaningful names for tables and columns to make the schema self-explanatory.

- Implement proper indexing: Create indexes on frequently queried columns to enhance performance but avoid excessive indexing that can slow down write operations.

- Maintain data integrity: Use constraints such as primary keys, foreign keys, and unique constraints to enforce data validity.

- Document the design: Keep comprehensive documentation of the schema, including relationships and data types, to aid future developers and analysts.

These practices help ensure that the database remains efficient, maintainable, and scalable as data needs grow.

45. How do you ensure data quality in your data models?

- Data validation: Implement checks during data entry to ensure that the data meets predefined standards and formats.

- Regular audits: Conduct periodic reviews and audits of the data to identify inconsistencies or anomalies.

- Use ETL processes: Employ Extract, Transform, Load (ETL) processes to cleanse and standardize data before loading it into the data warehouse.

- User feedback: Incorporate feedback from end-users to identify areas where data quality may be lacking and make necessary adjustments.

Maintaining data quality is crucial for reliable analytics and reporting, as poor quality data can lead to incorrect insights and decisions.

Data Modeling Interview Questions for Experienced

Data Modeling interview questions for experienced professionals delve into advanced topics such as normalization, denormalization, data warehousing, and the implications of data architecture on scalability and performance. These questions also explore design patterns and best practices, as well as leadership roles in mentoring teams to implement effective data strategies.

47. What is normalization and why is it important in database design?

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves dividing a database into tables and defining relationships between them. Its importance lies in minimizing duplicate data, ensuring data dependencies are logical, and enhancing data consistency. Proper normalization can also lead to increased efficiency in querying and maintaining the database.

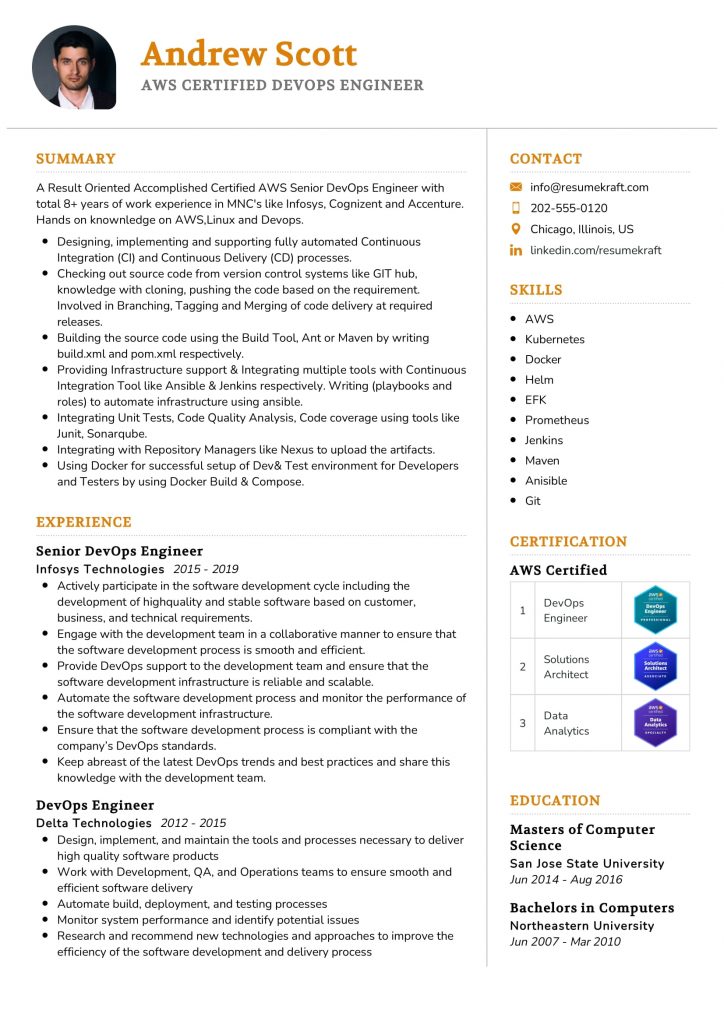

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

48. Can you explain denormalization and when it might be beneficial?

Denormalization is the process of intentionally introducing redundancy into a database by merging tables or including redundant data. This can be beneficial in scenarios where read performance is critical, such as in reporting databases or data warehouses. It reduces the number of joins needed in queries, improving response times at the cost of increased storage and potential data anomalies.

49. What are the key differences between OLTP and OLAP systems?

- OLTP (Online Transaction Processing): Focuses on managing transactional data, suitable for day-to-day operations, and requires high speed and efficiency for transaction processing.

- OLAP (Online Analytical Processing): Designed for complex queries and analysis, OLAP systems aggregate data from multiple sources to facilitate decision-making processes.

Understanding these differences is crucial for selecting the appropriate database design based on system requirements.

50. What is a star schema and how does it differ from a snowflake schema?

A star schema is a type of database schema that is characterized by a central fact table connected to multiple dimension tables. It is simple and optimized for read-heavy operations. In contrast, a snowflake schema normalizes the dimension tables into multiple related tables, which can lead to more complex queries but also reduces redundancy. The choice between them depends on the specific use case and performance needs.

51. Describe the CAP theorem and its implications for distributed database systems.

The CAP theorem states that a distributed data store can only guarantee two out of the following three properties: Consistency, Availability, and Partition Tolerance. This means that in the event of a network partition, a system must choose between remaining consistent (all nodes have the same data) or being available (responding to requests). Understanding the CAP theorem is essential for designing systems that meet specific availability and consistency requirements.

52. How do you approach data modeling for a large-scale application?

When modeling data for a large-scale application, I follow these steps:

- Understand business requirements and data sources

- Create an Entity-Relationship Diagram (ERD) to visualize data relationships

- Normalize data to eliminate redundancy while considering denormalization for performance

- Implement indexing strategies for fast query performance

- Iterate based on performance testing and feedback

This approach ensures that the data model is both efficient and scalable.

53. What are the best practices for indexing in a relational database?

- Choose the right columns for indexing based on query patterns to enhance performance.

- Avoid over-indexing, as it can slow down write operations and increase storage requirements.

- Regularly monitor and analyze query performance to adjust indexing strategies accordingly.

- Utilize composite indexes for multi-column queries where applicable.

Following these practices can significantly boost query performance while maintaining data integrity.

54. Explain the concept of a data lake and its advantages over traditional data warehouses.

A data lake is a centralized repository that allows you to store all structured and unstructured data at scale. Unlike traditional data warehouses that require structured data and predefined schemas, data lakes can handle raw data in its native format. The advantages include greater flexibility in data ingestion, the ability to store large volumes of diverse data types, and facilitating advanced analytics and machine learning on varied data sets.

55. What is data lineage and why is it important in data governance?

Data lineage refers to the tracking and visualization of data’s origins and its movement through the data lifecycle. It is crucial in data governance as it helps organizations understand data flow, ensures compliance with regulations, and enhances data quality management by providing clarity on data transformations and processes. This transparency fosters trust in data-driven decision-making.

56. How do you ensure data quality in your data models?

- Implement validation rules to enforce data integrity at the point of entry.

- Schedule regular data audits to identify and rectify anomalies or inconsistencies.

- Utilize ETL (Extract, Transform, Load) processes to cleanse and standardize data before ingestion.

- Incorporate user feedback mechanisms to continuously improve data quality.

These practices contribute to maintaining high data quality standards throughout the data lifecycle.

57. Discuss the role of NoSQL databases in modern data modeling.

NoSQL databases play a significant role in modern data modeling by providing flexible schemas and scalability options for handling large volumes of unstructured data. They are particularly useful for applications requiring high availability and horizontal scaling, such as real-time analytics and social media platforms. By allowing dynamic data structures, NoSQL databases enable developers to adapt quickly to changing data requirements.

58. What strategies would you use to mentor junior data modelers?

- Encourage hands-on practice through real-world projects, allowing them to apply theoretical concepts.

- Provide resources such as books, articles, and tutorials to foster continuous learning.

- Conduct regular review sessions to discuss their work, offer constructive feedback, and share best practices.

- Promote collaboration on data modeling tasks to enhance their problem-solving skills.

These strategies can help junior data modelers grow their skills and confidence in the field.

59. How do you approach performance tuning in databases?

Performance tuning in databases involves several steps, including:

- Analyzing slow queries using query execution plans to identify bottlenecks.

- Optimizing indexes and queries based on usage patterns.

- Monitoring database performance metrics regularly to detect issues early.

- Adjusting configurations such as memory allocation and connection pooling to enhance performance.

This proactive approach ensures that the database operates efficiently and meets application needs.

60. Explain the significance of data abstraction in data modeling.

Data abstraction is the process of simplifying complex data structures by exposing only relevant details while hiding unnecessary complexity. In data modeling, it allows developers to focus on high-level data interactions without being bogged down by implementation specifics. This promotes better communication among stakeholders, enhances system flexibility, and aids in building scalable architectures by allowing changes in underlying data structures without impacting the overall system.

Here are three experienced interview questions focused on data modeling, covering essential concepts related to architecture, optimization, scalability, and design patterns.

62. What are the key differences between normalization and denormalization in data modeling?

Normalization is the process of organizing data to reduce redundancy and improve data integrity, typically involving the division of tables and the establishment of relationships between them. Denormalization, on the other hand, is the process of combining tables to reduce the complexity of queries and improve read performance, often at the expense of redundancy. While normalization is crucial for transactional systems where data integrity is paramount, denormalization is often employed in analytical systems where read performance is prioritized.

63. How can you optimize a star schema for better performance in a data warehouse?

- Indexing: Create appropriate indexes on fact and dimension tables to speed up query performance, especially on foreign keys and frequently queried columns.

- Partitioning: Implement partitioning on large fact tables to improve query performance and manageability by dividing data into smaller, more manageable pieces.

- Aggregations: Pre-compute and store aggregate values to reduce the amount of data processed during queries, leading to faster response times.

Optimizing a star schema involves balancing the trade-offs between query performance and data storage efficiency, ensuring that the data warehouse serves its intended analytical purposes effectively.

64. Can you explain the concept of data vault modeling and its advantages?

Data vault modeling is an approach to data warehouse design that emphasizes agility and scalability. It consists of three main components: hubs (unique business keys), links (relationships between hubs), and satellites (descriptive attributes). The advantages of data vault modeling include:

- Scalability: It can easily accommodate changes in business requirements and evolving data sources without extensive redesign.

- Historical Tracking: It allows for comprehensive historical data tracking, making it suitable for organizations needing to analyze changes over time.

- Separation of Concerns: By separating the structural components, it simplifies the process of integrating new data sources.

This model is particularly beneficial for large organizations with complex data environments, as it supports ongoing changes and adaptations in data strategy.

How to Prepare for Your Data Modeling Interview

Preparing for a Data Modeling interview requires a solid understanding of data structures, database design principles, and practical experience with relevant tools. Candidates should focus on both theoretical knowledge and hands-on skills to effectively convey their expertise during the interview.

- Review Data Modeling Concepts: Revisit fundamental concepts like normalization, denormalization, ER diagrams, and star/snowflake schemas. Understanding these principles will help you articulate your design choices and decisions during the interview, demonstrating your foundation in data modeling.

- Practice with Real-World Scenarios: Work on case studies or real-world projects that require data modeling. This practical experience allows you to discuss specific examples during interviews, showcasing your skills and thought process in solving complex data challenges.

- Familiarize Yourself with Database Systems: Gain proficiency in popular database management systems like MySQL, PostgreSQL, and MongoDB. Understanding their features and limitations will enable you to tailor your data models accordingly and answer questions about database performance and constraints.

- Brush Up on SQL Skills: Since data modeling often involves SQL, practice writing queries to create, modify, and retrieve data. Being able to demonstrate SQL proficiency will enhance your ability to discuss how your models translate into functional database structures.

- Understand Business Requirements: Learn how to gather and interpret business requirements effectively. Being able to align your data models with business goals will show interviewers that you can bridge the gap between technical implementation and strategic objectives.

- Stay Updated on Data Modeling Tools: Familiarize yourself with data modeling tools like ER/Studio, Lucidchart, or Microsoft Visio. Being adept at these tools can help you create clear and professional data models, making it easier to communicate your ideas during the interview.

- Prepare for Behavioral Questions: Be ready to discuss how you have handled challenges in previous data modeling projects. Use the STAR (Situation, Task, Action, Result) method to structure your responses, which will help you convey your experience effectively and demonstrate your problem-solving skills.

Common Data Modeling Interview Mistakes to Avoid

When interviewing for a Data Modeling position, candidates often make critical mistakes that can hinder their chances of success. Understanding and avoiding these common pitfalls can significantly improve performance and demonstrate expertise in the field.

- Neglecting to Understand Business Requirements: Failing to grasp the core business needs can lead to inappropriate data models. Candidates should demonstrate their ability to translate business requirements into effective data structures.

- Ignoring Data Quality and Integrity: Candidates often overlook the importance of data quality. Emphasizing strategies for maintaining data integrity should be a key focus during the interview.

- Not Demonstrating Technical Skills: Many candidates underestimate the importance of showcasing their technical abilities with tools like SQL, ER diagrams, or data modeling software. Practical demonstrations can help validate their expertise.

- Overcomplicating Models: Some candidates create overly complex data models instead of simple, efficient ones. It’s essential to emphasize the importance of clarity and maintainability in data design.

- Failing to Discuss Normalization: Neglecting to discuss normalization principles can be a red flag. Candidates should articulate how they would balance normalization with performance considerations in their data models.

- Not Preparing for Scenario-Based Questions: Interviewers often ask situational questions to assess problem-solving skills. Candidates should prepare to discuss how they would approach real-world data modeling challenges.

- Underestimating Collaboration: Data modeling often requires teamwork. Candidates should highlight their ability to collaborate with stakeholders, developers, and analysts to create effective models.

- Ignoring Documentation Practices: Proper documentation is crucial for data models. Candidates should discuss their approach to documenting data structures and ensuring that they are understandable for future reference.

Key Takeaways for Data Modeling Interview Success

- Prepare your resume using an AI resume builder to ensure clarity and impact. Highlight relevant skills and experiences that align with data modeling positions.

- Utilize well-structured resume templates to create a professional appearance. A clean format makes it easier for hiring managers to identify your qualifications.

- Showcase your experience with resume examples that reflect your data modeling projects. Demonstrating tangible achievements can set you apart from other candidates.

- Don’t overlook cover letters; they provide an opportunity to express your passion for data modeling and explain how your skills directly benefit the company.

- Engage in mock interview practice to refine your responses to common data modeling questions. This preparation boosts your confidence and improves your performance during the actual interview.

Frequently Asked Questions

1. How long does a typical Data Modeling interview last?

A typical Data Modeling interview can last anywhere from 30 minutes to 1 hour. The duration depends on the company’s interview structure and the complexity of the role. During this time, you may be asked technical questions about data modeling concepts, tools, and methodologies, as well as behavioral questions to assess your problem-solving skills and team fit. It’s essential to be prepared for both types of questions within the allotted time.

2. What should I wear to a Data Modeling interview?

Your attire for a Data Modeling interview should be professional and appropriate for the company culture. In most cases, business casual is a safe choice, including slacks, a collared shirt, or a blouse. If the company has a more formal dress code, consider wearing a suit. It’s crucial to feel comfortable and confident in your outfit, as it contributes to the overall impression you make during the interview.

3. How many rounds of interviews are typical for a Data Modeling position?

For a Data Modeling position, you can typically expect 2 to 4 rounds of interviews. The first round may focus on technical skills, while subsequent rounds often include behavioral interviews and discussions with team members or management. Some companies may also include a practical assessment or case study to evaluate your data modeling capabilities. Each round helps the employer assess your fit for the role and the organization.

4. Should I send a thank-you note after my Data Modeling interview?

Yes, sending a thank-you note after your Data Modeling interview is highly recommended. It demonstrates your appreciation for the interviewer’s time and reinforces your interest in the position. In your note, briefly express gratitude, mention specific points discussed in the interview, and reiterate your enthusiasm for the role. Sending this note within 24 hours of the interview can leave a positive impression and may help you stand out among other candidates.