Preparing for a Meta Data Engineer interview is an exciting venture that combines technical prowess with a deep understanding of data ecosystems. This role is unique as it focuses on the architecture, management, and optimization of metadata, essential for data governance and analytics. Proper interview preparation is crucial, as it not only sharpens your technical skills but also enhances your ability to communicate complex ideas effectively. In this comprehensive guide, we will cover key concepts, commonly asked interview questions, and practical strategies to help you stand out in your interviews. Equip yourself with the knowledge and confidence needed to excel in this dynamic and rapidly evolving field.

What to Expect in a Meta Data Engineer Interview

In a Meta Data Engineer interview, candidates can expect a mix of technical and behavioral questions. Interviews typically include multiple rounds, starting with a phone screen conducted by a recruiter, followed by one or more technical interviews with data engineering managers or senior engineers. The technical interviews often focus on data modeling, ETL processes, and SQL proficiency, alongside problem-solving exercises. Candidates may also face system design questions and discuss past project experiences. Overall, the interview process aims to assess both technical skills and cultural fit within the team.

Meta Data Engineer Interview Questions For Freshers

This collection of interview questions is tailored for freshers aspiring to become Meta Data Engineers. These questions cover fundamental concepts in data management, metadata frameworks, and basic programming skills that candidates should master to excel in their roles.

1. What is metadata and why is it important?

Metadata is data that provides information about other data. It helps in understanding, managing, and organizing data effectively. Metadata is crucial for data discovery, data governance, and ensuring data quality. It allows users to understand the context of data, such as its origin, format, and structure, making it easier to retrieve and use.

2. Can you explain the different types of metadata?

- Descriptive metadata: Provides information for discovery and identification, such as titles and keywords.

- Structural metadata: Indicates how different components of a data set are organized and related, for example, the layout of a database.

- Administrative metadata: Contains information to help manage resources, including creation dates and access rights.

Understanding these types is essential for effective data management and utilization.

3. What are some common metadata standards?

- DCMI (Dublin Core Metadata Initiative): A widely used standard for describing a variety of resources.

- MODS (Metadata Object Description Schema): A standard for encoding descriptive metadata for digital resources.

- PREMIS (Preservation Metadata): Focuses on the preservation of digital objects.

Familiarity with these standards is important for ensuring interoperability and effective data management.

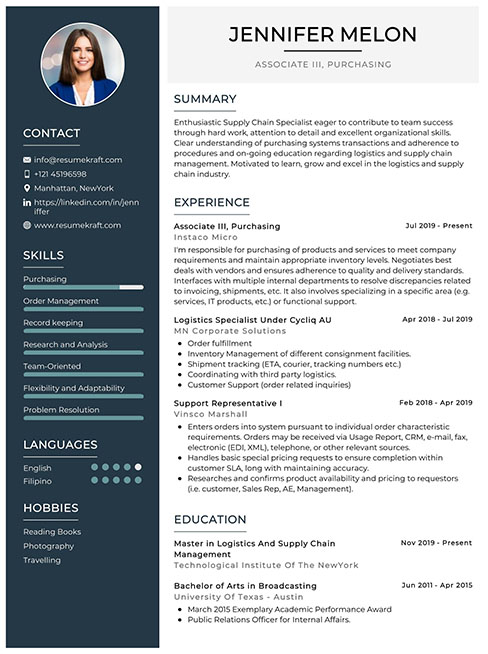

Build your resume in just 5 minutes with AI.

4. What is a metadata repository?

A metadata repository is a centralized database that stores and manages metadata for an organization. It serves as a reference point for data assets, allowing users to easily search for and retrieve metadata information. A well-structured repository enhances data governance, aids in compliance, and improves data quality by providing consistent metadata across platforms.

5. How can metadata improve data quality?

- Clarity: Well-defined metadata clarifies the meaning and context of data, reducing ambiguity.

- Consistency: Standardized metadata ensures uniformity across datasets, facilitating better analysis.

- Validation: Metadata can include rules for data validation, helping to maintain accuracy and reliability.

By improving data quality, metadata directly contributes to better decision-making processes.

6. What is ETL in the context of metadata?

ETL stands for Extract, Transform, Load, which is a data integration process used to combine data from different sources into a single data warehouse. In the context of metadata, ETL processes use metadata to understand the structure and context of the data being handled. This ensures that data is accurately transformed and properly loaded into the destination system, maintaining its integrity.

7. Can you describe a use case for metadata in a data analytics project?

In a data analytics project, metadata can be used to document the sources, transformations, and structures of datasets. For example, when analyzing customer behavior, metadata can track which data sources were used, how data was cleaned and transformed, and what metrics were derived. This documentation is essential for reproducibility, auditing, and maintaining data quality throughout the project.

8. What is data lineage and how does it relate to metadata?

Data lineage refers to the tracking of data’s origins and its movement through various processes. It is closely related to metadata, as metadata provides the necessary context for understanding where data comes from, how it is transformed, and where it is stored. Maintaining data lineage through metadata helps organizations ensure compliance, enhance data governance, and improve trust in their data.

9. What programming languages are commonly used for metadata management?

- Python: Widely used for data manipulation and automation tasks, including metadata processing.

- SQL: Essential for querying databases and managing structured metadata.

- Java: Often used in enterprise-level applications for metadata management.

Proficiency in these languages is beneficial for working with metadata systems and data integration tasks.

10. How would you document metadata for a new data source?

To document metadata for a new data source, I would include the following elements: data source name, description, type (structured or unstructured), data owner, data format, access rights, and update frequency. Additionally, I would ensure to capture any relevant business rules or transformations applied to the data. This comprehensive documentation aids in understanding and utilizing the data effectively.

11. What are some challenges in managing metadata?

- Data Silos: Different departments may maintain separate metadata repositories, leading to inconsistencies.

- Dynamic Nature of Data: As data evolves, keeping metadata up to date can be difficult.

- Lack of Standardization: Without agreed-upon standards, metadata can become fragmented and less useful.

Overcoming these challenges requires a strategic approach to metadata management and governance.

12. What is a data dictionary and how is it related to metadata?

A data dictionary is a centralized repository of information about data elements in a database, including definitions, data types, and relationships. It is a crucial component of metadata management, as it provides detailed descriptions of the data assets, facilitating better understanding and usage of data within an organization.

13. How can automation be used in metadata management?

- Data Discovery: Automation tools can scan databases to identify and catalog metadata.

- Metadata Updates: Automated processes can ensure that metadata is consistently updated as data changes.

- Reporting: Automation can generate reports on metadata usage and compliance, saving time and effort.

Utilizing automation in metadata management can enhance efficiency and accuracy in handling data assets.

14. Explain the role of metadata in data governance.

Metadata plays a vital role in data governance by providing the necessary context and information needed to manage data assets effectively. It helps establish data ownership, access controls, and compliance requirements. By ensuring that metadata is well-documented and maintained, organizations can improve data quality, enhance security, and facilitate better decision-making.

15. What skills are essential for a career in metadata management?

- Analytical Skills: Ability to analyze and interpret data effectively.

- Technical Skills: Proficiency in programming languages and data management tools.

- Attention to Detail: Ensuring accuracy in documentation and metadata entries.

- Communication Skills: Ability to convey complex metadata concepts clearly.

These skills are crucial for ensuring effective metadata management and contributing to overall data governance efforts.

Here are some interview questions designed for freshers applying for Data Engineer positions at Meta. These questions cover fundamental concepts and basic skills necessary for the role.

16. What is data engineering and why is it important?

Data engineering is the practice of designing, constructing, and maintaining systems and architectures for collecting, storing, and analyzing data. It is crucial because it enables organizations to leverage data effectively for decision-making, analytics, and machine learning. Data engineers build pipelines that ensure data is accessible and reliable, facilitating insights and driving business strategies.

17. Can you explain the difference between structured and unstructured data?

- Structured Data: This type of data is organized and easily searchable in fixed fields within a record or file, typically stored in relational databases. Examples include tables in SQL databases.

- Unstructured Data: Unstructured data lacks a predefined format or organization, making it more complex to analyze. Examples include text files, images, videos, and social media posts.

Understanding these types of data is essential for data engineers, as different data types require different processing and storage solutions.

18. What is ETL, and how does it work?

ETL stands for Extract, Transform, Load. It is a data processing framework that involves three main steps:

- Extract: Data is collected from various sources, such as databases, APIs, and flat files.

- Transform: The extracted data is cleaned, normalized, and transformed into a suitable format for analysis.

- Load: The transformed data is then loaded into a target system, usually a data warehouse.

ETL is vital for ensuring that data is accurately processed and available for analytics.

19. What are some common data storage solutions?

- Relational Databases: Systems like MySQL and PostgreSQL that store data in structured tables.

- NoSQL Databases: Systems like MongoDB and Cassandra that are designed for unstructured or semi-structured data.

- Data Warehouses: Specialized systems like Amazon Redshift and Google BigQuery optimized for analytical queries.

Choosing the right storage solution depends on the nature of the data and the specific use cases of the organization.

20. What is a data pipeline?

A data pipeline is a series of data processing steps that involve collecting data from multiple sources, processing it through a series of transformations, and then delivering it to a destination, such as a database or a data warehouse. Pipelines automate the flow of data, ensuring that it is timely, reliable, and accessible for analytics and reporting.

21. How do you handle data quality issues?

Handling data quality issues involves several strategies:

- Validation: Implement checks to ensure data integrity and accuracy at the point of entry.

- Cleaning: Use data cleaning techniques to correct or remove inaccurate records.

- Monitoring: Continuously monitor data quality metrics and set up alerts for anomalies.

Proactively addressing data quality issues is essential for reliable data-driven decision-making.

22. What programming languages are commonly used in data engineering?

- Python: Widely used for data manipulation and ETL processes due to its simplicity and extensive libraries.

- SQL: Essential for querying and managing relational databases.

- Java/Scala: Often used in big data frameworks like Apache Spark.

Familiarity with these languages is crucial for aspiring data engineers to build and maintain data systems effectively.

23. Can you provide a simple SQL query to retrieve all records from a table?

Certainly! Here’s a basic SQL query:

SELECT * FROM employees;This query selects all columns from the “employees” table. Understanding SQL is fundamental for data engineers to manipulate and retrieve data from relational databases.

Meta Data Engineer Intermediate Interview Questions

This set of interview questions is tailored for intermediate Meta Data Engineer candidates. It covers essential concepts such as data modeling, ETL processes, data governance, and performance tuning, which are crucial for effectively managing and utilizing metadata in data warehousing and analytics.

25. What is metadata and why is it important in data engineering?

Metadata is data that provides information about other data. It is crucial in data engineering as it helps in understanding the structure, context, and management of data. Metadata enhances data discoverability, facilitates data governance, and improves data quality by providing essential details such as data source, format, lineage, and usage statistics.

26. Explain the difference between operational metadata and descriptive metadata.

- Operational Metadata: This type details how data is created, processed, and managed. It includes information such as data lineage, data processing times, and system performance metrics.

- Descriptive Metadata: It provides information that helps users understand the content and context of the data. This includes data definitions, formats, and relationships between data entities.

Understanding both types of metadata is essential for effective data management and governance in data engineering.

27. How do you ensure data quality in a metadata management system?

- Validation Rules: Implement validation rules to check data accuracy and completeness during data ingestion.

- Data Profiling: Regularly profile data to assess its quality, identifying anomalies or inconsistencies.

- Automated Testing: Utilize automated tests to verify data quality periodically.

These practices help maintain high data quality standards, which are vital for reliable analytics and reporting.

28. What are the best practices for designing a metadata repository?

- Scalability: Ensure the repository can grow with increasing data volume and complexity.

- Standardization: Use standardized metadata schemas to promote consistency across the organization.

- User Accessibility: Make the repository user-friendly and easily accessible for data stewards and analysts.

Following these best practices enhances the effectiveness and usability of the metadata repository.

29. Describe the process of data lineage and its significance.

Data lineage refers to the tracking of data flow from its origin to its final destination, including all transformations along the way. It is significant because it provides transparency, which is essential for compliance, auditing, and troubleshooting data issues. Understanding data lineage helps organizations maintain data integrity and trust in their analytics.

30. How can you implement version control for metadata?

Version control for metadata can be implemented by using versioning systems like Git to track changes. This involves storing metadata in a version-controlled repository, allowing data engineers to manage changes, collaborate effectively, and roll back to previous versions if necessary. This practice enhances accountability and traceability of changes made to metadata.

31. What is the role of ETL in metadata management?

ETL (Extract, Transform, Load) plays a crucial role in metadata management by facilitating the movement and transformation of data. During the ETL process, metadata is generated that describes data sources, transformation rules, and loading procedures. This metadata helps ensure that data is accurately processed and can be effectively utilized in analytics and reporting.

32. Explain how you would use metadata for data governance.

- Policy Enforcement: Use metadata to enforce data governance policies, ensuring compliance with regulations.

- Data Stewardship: Assign data stewards based on metadata to manage data quality and integrity.

- Access Control: Implement metadata-driven access control to protect sensitive data and ensure authorized access.

Utilizing metadata in these ways strengthens the overall data governance framework, enhancing data security and compliance.

33. What tools or technologies do you prefer for metadata management?

Popular tools for metadata management include Apache Atlas, Alation, and Collibra. These tools offer features such as metadata cataloging, data lineage tracking, and data governance capabilities. Choosing the right tool depends on the organization’s specific needs, such as integration capabilities, user interface, and scalability.

34. How would you handle duplicate metadata entries?

Handling duplicate metadata entries involves implementing deduplication strategies, such as establishing unique identifiers for each metadata entry and utilizing automated tools to identify and merge duplicates. Regular audits of the metadata repository can also help detect duplicates early. This ensures a clean and reliable metadata environment.

35. What is the significance of data classification in metadata management?

Data classification is significant in metadata management as it helps categorize data based on its sensitivity, usage, and compliance requirements. This classification enables organizations to apply appropriate security measures, manage access controls effectively, and ensure compliance with data protection regulations, thus enhancing overall data governance.

36. How do you approach metadata documentation?

- Consistent Format: Use a consistent format for documenting metadata to ensure clarity and ease of understanding.

- Comprehensive Descriptions: Provide detailed descriptions for each metadata entry, including definitions and relationships.

- Regular Updates: Ensure that documentation is regularly updated to reflect changes in data sources or structures.

Effective documentation improves usability and provides valuable context for users accessing metadata.

37. Explain how to use metadata to improve data discovery.

Metadata enhances data discovery by providing searchable attributes that describe data assets. By implementing a metadata catalog that includes searchable keywords, descriptions, and relationships, organizations can enable users to quickly find relevant datasets. This improved discoverability leads to increased data utilization and supports better decision-making.

38. What challenges have you faced in metadata management and how did you overcome them?

- Data Silos: Overcome by integrating disparate systems and consolidating metadata into a unified repository.

- Inconsistent Standards: Address by establishing and enforcing organization-wide metadata standards and guidelines.

- Stakeholder Buy-In: Achieve by demonstrating the value of metadata management through pilot projects that showcase its benefits.

Overcoming these challenges is essential for building a robust metadata management framework.

Below are nine intermediate-level interview questions specifically tailored for a Meta Data Engineer role. These questions address practical applications, best practices, and real-world scenarios that a candidate may encounter in the field.

40. What are the key components of a metadata management strategy?

A comprehensive metadata management strategy should include the following key components:

- Data Governance: Establish policies and procedures for data quality, data ownership, and compliance.

- Metadata Repository: Centralized storage for metadata that allows easy access and management.

- Data Lineage: Tracking the flow of data from its origin to its final destination to ensure integrity.

- Tool Integration: Use of tools for automated metadata extraction and management to enhance efficiency.

- Stakeholder Engagement: Involvement of all relevant stakeholders to ensure metadata meets business needs.

These components work together to enhance data quality and usability across the organization.

41. How do you ensure data quality in a metadata management process?

Ensuring data quality involves several best practices:

- Regular Audits: Conduct audits to check for inconsistencies and inaccuracies in the metadata.

- Validation Rules: Implement rules to validate data inputs, ensuring they meet predefined standards.

- Automated Monitoring: Use automated tools to monitor data quality metrics continuously.

- Feedback Loop: Establish a feedback loop with data users to identify issues and improve processes.

By focusing on these practices, organizations can maintain high-quality metadata that supports accurate data analysis and reporting.

42. What is data lineage, and why is it important in metadata management?

Data lineage refers to the tracking of data as it flows from its origin to its final destination, showing how it is transformed along the way. It is important because:

- Data Transparency: Provides visibility into data sources and transformations, which is crucial for trust and understanding.

- Impact Analysis: Helps assess the impact of changes in data structures or processes on downstream applications.

- Regulatory Compliance: Assists in meeting regulatory requirements by demonstrating data traceability.

- Troubleshooting: Facilitates easier identification of issues related to data quality or integrity.

Overall, data lineage enhances the reliability and accountability of data management practices.

43. Can you describe a scenario where you had to optimize a metadata extraction process?

In a previous project, we faced performance issues with our metadata extraction process from a large database. Here’s how I optimized it:

- Batch Processing: Instead of extracting metadata in real-time, I implemented batch processing to reduce system load.

- Incremental Extraction: Only new or changed records were extracted, minimizing data transfer and processing time.

- Parallel Processing: I utilized parallel processing techniques to handle multiple extraction tasks simultaneously, improving throughput.

- Database Indexing: Improved database indexing on key tables to speed up query performance.

These optimizations led to a significant reduction in extraction time and resource usage, enhancing overall system performance.

44. What tools and technologies do you recommend for effective metadata management?

For effective metadata management, I recommend the following tools and technologies:

- Apache Atlas: An open-source tool for data governance and metadata management that supports data lineage and classification.

- Collibra: A comprehensive data governance platform that provides robust metadata management capabilities.

- Informatica Metadata Manager: A tool that helps in managing and visualizing metadata across various data environments.

- Talend: An ETL tool that provides metadata management features alongside data integration capabilities.

- AWS Glue: A fully managed ETL service that offers a data catalog and metadata management features in the cloud.

These tools facilitate the organization, governance, and utilization of metadata in diverse environments.

45. How do you handle versioning of metadata in a dynamic data environment?

Handling versioning of metadata in a dynamic environment involves several strategies:

- Change Tracking: Implement systems to track changes in metadata, including who made changes and when.

- Version Control Systems: Use version control tools (like Git) to manage different versions of metadata schemas and definitions.

- Backward Compatibility: Design metadata structures to be backward compatible, allowing older versions to coexist with newer ones.

- Documentation: Maintain comprehensive documentation of changes and version histories to aid stakeholders in understanding modifications.

These practices ensure that the metadata remains accurate and usable despite ongoing changes in the data environment.

46. What role does data cataloging play in metadata management?

Data cataloging is essential for effective metadata management as it helps in:

- Discoverability: Enables users to easily find and access data assets across the organization.

- Data Governance: Supports governance initiatives by providing context and lineage for data assets.

- Collaboration: Facilitates collaboration between data producers and consumers by providing a shared understanding of data definitions.

- Data Quality: Helps in assessing the quality of data assets through user feedback and data profiling.

By maintaining a well-organized data catalog, organizations can derive more value from their data assets.

47. Can you explain how to implement a metadata-driven architecture?

Implementing a metadata-driven architecture involves several key steps:

- Define Metadata Standards: Establish clear standards for what metadata is required for all data assets.

- Develop a Metadata Repository: Create a centralized repository to store and manage all metadata.

- Integrate with Data Pipelines: Ensure that all data ingestion and transformation processes capture and update metadata automatically.

- Utilize Metadata for Automation: Use metadata to drive automation in data processing, reporting, and analytics.

- Monitor and Evolve: Continuously monitor the effectiveness of the metadata-driven architecture and make improvements as needed.

This approach enhances the agility and responsiveness of data systems, allowing for better decision-making and analytics.

Meta Data Engineer Interview Questions for Experienced

This set of interview questions is tailored for experienced Meta Data Engineers, focusing on advanced topics such as system architecture, data optimization strategies, scalability challenges, design patterns, and leadership in technical teams. Mastering these areas is crucial for candidates aiming to excel in high-level engineering roles.

49. What are the key architectural considerations when designing a metadata management system?

When designing a metadata management system, key architectural considerations include:

- Scalability: Ensure the system can handle growing amounts of metadata efficiently.

- Performance: Optimize for quick query responses and low latency.

- Data Integrity: Implement strict validation rules to maintain accurate metadata.

- Interoperability: Enable seamless integration with various data sources and formats.

- Security: Protect sensitive metadata through access controls and encryption.

These considerations will help create a robust metadata management system that supports organizational needs.

50. How do you optimize metadata storage for large-scale data systems?

To optimize metadata storage in large-scale data systems, consider the following strategies:

- Use a relational database with indexing for efficient querying.

- Implement partitioning strategies to distribute data across multiple storage nodes.

- Utilize caching mechanisms to reduce read times for frequently accessed metadata.

- Consider using NoSQL databases for unstructured metadata that require flexibility.

- Regularly audit and clean up outdated or unused metadata to save storage space.

These strategies enhance performance and scalability in managing large volumes of metadata.

51. Can you explain the concept of metadata lineage and its importance?

Metadata lineage refers to the tracking of the flow and transformation of data from its origin to its final destination. It is essential for several reasons:

- Data Governance: Enables organizations to maintain compliance and understand the lifecycle of data.

- Impact Analysis: Helps assess the effects of changes in data sources on downstream processes.

- Debugging: Facilitates troubleshooting data quality issues by tracing back to the root cause.

- Audit Trails: Provides necessary documentation for regulatory requirements.

Understanding metadata lineage is crucial for ensuring data accuracy and accountability in data management.

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

52. What design patterns are commonly used in metadata management systems?

Common design patterns in metadata management systems include:

- Repository Pattern: Centralizes metadata access and management in a single location.

- Observer Pattern: Allows systems to react to changes in metadata dynamically.

- Factory Pattern: Simplifies the creation of metadata objects based on specific requirements.

- Decorator Pattern: Enhances existing metadata objects with additional functionality without altering their structure.

- Singleton Pattern: Ensures a single instance of a metadata store is used throughout the application.

Implementing these patterns can lead to a more maintainable and scalable metadata management system.

53. How do you ensure data quality in metadata management?

Ensuring data quality in metadata management involves several best practices:

- Validation: Implement rules to verify the accuracy and completeness of metadata as it is ingested.

- Regular Audits: Conduct periodic reviews of metadata to identify and correct inaccuracies.

- Automated Monitoring: Use tools to continuously monitor metadata for anomalies or inconsistencies.

- Feedback Mechanism: Establish a process for users to report issues or suggest improvements related to metadata.

- Documentation: Maintain clear documentation of metadata standards and guidelines for all team members.

These practices help maintain high data quality standards in metadata management.

54. Describe the role of a metadata catalog and its features.

A metadata catalog acts as a centralized repository for metadata, providing features such as:

- Search and Discovery: Enables users to easily find and understand available datasets.

- Data Profiling: Offers insights into data characteristics and quality metrics.

- Data Governance: Facilitates compliance by managing data lineage and ownership information.

- Collaboration: Allows teams to share metadata and annotations, enhancing collective knowledge.

- Integration: Connects with various data sources and tools for seamless metadata management.

A well-designed metadata catalog enhances data discoverability and usability across the organization.

55. What techniques do you use to scale a metadata management solution?

Techniques to scale a metadata management solution include:

- Horizontal Scaling: Distributing the workload across multiple servers to handle increased load.

- Data Partitioning: Dividing metadata into smaller, more manageable segments for parallel processing.

- Load Balancing: Distributing incoming requests evenly across servers to optimize resource use.

- Asynchronous Processing: Implementing queues to process metadata updates without blocking user interactions.

- Cloud Solutions: Utilizing cloud services for elastic scalability based on demand.

These techniques ensure that the metadata management solution can grow with organizational needs.

56. How do you implement security in a metadata management system?

Implementing security in a metadata management system involves:

- Access Controls: Define user roles and permissions to restrict access to sensitive metadata.

- Encryption: Use encryption for metadata at rest and in transit to protect against unauthorized access.

- Audit Logs: Maintain detailed logs of user actions to track access and modifications to metadata.

- Authentication: Implement strong authentication mechanisms, such as multi-factor authentication.

- Regular Security Reviews: Conduct periodic assessments of security measures to identify and mitigate vulnerabilities.

These practices help secure sensitive metadata and maintain compliance with data protection regulations.

57. What role does metadata play in data warehousing?

In data warehousing, metadata plays a crucial role by providing:

- Data Definitions: Clear descriptions of data elements, including their meanings and formats.

- Data Lineage: Insights into the origin and transformations of data as it flows through the warehouse.

- Schema Information: Details about the structure of the data warehouse, including tables and relationships.

- Performance Metrics: Information on data refresh rates and query performance for optimization purposes.

- Business Glossary: A shared vocabulary that aligns technical and business stakeholders on data terminology.

Metadata enhances the usability and governance of data within a warehouse environment.

58. How would you mentor a junior metadata engineer?

Mentoring a junior metadata engineer involves several key strategies:

- Knowledge Sharing: Regularly share insights on best practices, tools, and methodologies in metadata management.

- Hands-On Training: Provide opportunities for them to work on real projects with guidance and support.

- Encouragement: Foster an environment where they feel comfortable asking questions and seeking help.

- Feedback: Offer constructive feedback on their work to help them improve their skills.

- Career Development: Help them set goals and identify areas for growth within the field.

Effective mentoring can significantly accelerate the professional development of a junior engineer.

59. Explain the concept of schema evolution in metadata management.

Schema evolution refers to the ability of a metadata management system to adapt to changes in data structures over time. Key aspects include:

- Backward Compatibility: Ensuring new schema changes do not break existing applications or queries.

- Version Control: Keeping track of different schema versions to manage transitions smoothly.

- Migration Tools: Providing utilities to assist in migrating data from one schema version to another.

- Flexibility: Allowing dynamic updates to the schema without requiring system downtime.

- Documentation: Maintaining clear records of schema changes for future reference.

Effective schema evolution is critical for accommodating changing business needs and data sources.

60. What are the challenges faced in metadata management for big data?

Challenges in metadata management for big data include:

- Volume: The sheer amount of metadata generated can be overwhelming, requiring efficient storage solutions.

- Variety: Managing diverse metadata types from various sources necessitates flexible schemas and tools.

- Velocity: Real-time data processing demands quick updates and retrieval of metadata.

- Data Governance: Ensuring compliance and quality across large datasets can be complex.

- Integration: Connecting disparate data systems and their metadata often presents technical hurdles.

Addressing these challenges is essential for effective big data management and analytics.

Below are four experienced interview questions tailored for a Meta Data Engineer role, focusing on architecture, optimization, scalability, design patterns, and leadership aspects.

64. Can you explain how you would design a scalable data pipeline?

To design a scalable data pipeline, I would focus on the following key aspects:

- Modularity: Break the pipeline into modular components that can be independently developed, tested, and deployed.

- Asynchronous Processing: Use message queues (like Kafka or RabbitMQ) to decouple the components, allowing for asynchronous data processing and improving scalability.

- Horizontal Scaling: Implement the pipeline in a way that allows for horizontal scaling, such as using container orchestration platforms like Kubernetes.

- Data Partitioning: Partition data effectively to ensure that workloads are balanced across the system.

This design approach not only supports scalability but also enhances maintainability and fault tolerance.

65. What design patterns do you find most useful in data engineering, and why?

Several design patterns are particularly useful in data engineering:

- Pipeline Pattern: This pattern helps in structuring data processing workflows, ensuring that data flows smoothly from one stage to the next.

- Event Sourcing: It allows capturing changes to application state as a sequence of events, which can be replayed for debugging or auditing.

- Lambda Architecture: This pattern is effective for processing large volumes of data, offering both batch and real-time processing capabilities to ensure low latency.

Utilizing these patterns can enhance data integrity, maintainability, and performance in data engineering projects.

66. How would you optimize a slow-performing ETL process?

To optimize a slow-performing ETL process, consider the following strategies:

- Parallel Processing: Implement parallelism in the ETL jobs to process multiple data chunks simultaneously.

- Incremental Loads: Instead of full data loads, use incremental extraction to process only the changed data since the last update.

- Efficient Data Storage: Optimize data storage formats (e.g., Parquet for columnar storage) to reduce I/O and speed up data retrieval.

- Indexing: Create indexes on source databases to speed up data extraction queries.

By applying these optimizations, ETL processes can be significantly accelerated, improving overall system performance.

67. Describe your experience with mentoring junior data engineers.

Mentoring junior data engineers involves several key practices:

- Knowledge Sharing: Regularly conduct knowledge-sharing sessions on best practices, tools, and design patterns in data engineering.

- Code Reviews: Provide constructive feedback during code reviews to help juniors improve coding skills and understand design choices.

- Project Guidance: Assign them meaningful tasks within larger projects, guiding them through challenges and encouraging independent problem-solving.

- Career Development: Discuss their career goals and provide resources or training opportunities that align with those aspirations.

Effective mentoring not only helps juniors grow but also strengthens the overall team and enhances project outcomes.

How to Prepare for Your Meta Data Engineer Interview

Preparing for a Meta Data Engineer interview requires a strategic approach, focusing on technical skills, data management concepts, and problem-solving abilities. Understanding the company’s data infrastructure and tools is equally important for showcasing your fit for the role.

- **Understand Data Management Principles**: Familiarize yourself with data governance, data quality, and metadata management concepts. Brush up on best practices for managing large datasets and how metadata plays a crucial role in data integrity and usability.

- **Master SQL and Data Querying**: Since data engineers often work with databases, enhance your SQL skills. Practice writing complex queries, optimizing them for performance, and understand how to extract meaningful insights from large datasets.

- **Explore ETL Processes**: Gain a solid understanding of Extract, Transform, Load (ETL) processes. Learn about various ETL tools and frameworks such as Apache NiFi, Talend, or AWS Glue, and be ready to discuss how you’ve implemented these in past projects.

- **Familiarize with Data Warehousing Concepts**: Understand the architecture and design of data warehouses. Study concepts such as star and snowflake schemas, and the differences between OLTP and OLAP systems to demonstrate your knowledge in data storage solutions.

- **Learn about Big Data Technologies**: Familiarize yourself with big data tools like Hadoop, Spark, and Kafka. Understanding how these technologies work together will help you discuss scalable data solutions and processing large volumes of data effectively.

- **Practice Problem-Solving Skills**: Engage in coding challenges and data structure problems relevant to data engineering. Websites like LeetCode and HackerRank can provide exercises to sharpen your analytical thinking and coding skills under pressure.

- **Review System Design Principles**: Prepare for system design questions by understanding how to architect data pipelines and scalable systems. Be ready to discuss trade-offs, data flow, and how to handle data consistency and availability in your designs.

Common Meta Data Engineer Interview Mistakes to Avoid

When interviewing for a Meta Data Engineer position, candidates often fall into several common traps that can hinder their chances of success. Understanding these mistakes can help improve preparation and performance during the interview process.

- Neglecting Data Governance Concepts: Failing to demonstrate knowledge of data governance frameworks can signal a lack of understanding of data management principles, which are crucial for a Meta Data Engineer role.

- Overlooking Technical Skills: Not showcasing relevant technical skills, such as SQL proficiency or familiarity with data modeling tools, can lead interviewers to question your capabilities in handling data workflows.

- Underestimating Collaboration: Ignoring the importance of teamwork and communication skills can be detrimental, as Meta Data Engineers often work with cross-functional teams to ensure data integrity and accessibility.

- Lack of Practical Examples: Failing to provide concrete examples of past projects or experiences can weaken your responses, as interviewers look for evidence of applied knowledge and problem-solving skills.

- Inadequate Understanding of Metadata Standards: Not being familiar with key metadata standards and frameworks, such as Dublin Core or ISO 11179, can demonstrate a lack of depth in the field.

- Ignoring Company-Specific Practices: Not researching Meta’s specific data practices, tools, and technologies can make you seem unprepared and less enthusiastic about the role.

- Not Asking Questions: Failing to ask insightful questions about the team, projects, or company culture can reflect a lack of genuine interest in the position.

- Rushing Through Answers: Providing hasty or incomplete answers can hinder the clarity of your responses, making it difficult for interviewers to assess your qualifications effectively.

Key Takeaways for Meta Data Engineer Interview Success

- Prepare a strong resume using an AI resume builder to highlight your technical skills and experience relevant to data engineering roles, ensuring clarity and impact.

- Utilize well-structured resume templates to present your qualifications effectively, focusing on key achievements and relevant projects that align with the job requirements.

- Showcase your experience with tailored resume examples that demonstrate your problem-solving abilities and familiarity with data processing tools and techniques.

- Craft personalized cover letters that connect your background to Meta’s mission, demonstrating your enthusiasm and understanding of the company’s data initiatives.

- Engage in mock interview practice to refine your responses to technical and behavioral questions, boosting your confidence and performance during the actual interview.

Frequently Asked Questions

1. How long does a typical Meta Data Engineer interview last?

A typical interview for a Meta Data Engineer position usually lasts between 45 minutes to 1 hour. This time frame often includes technical assessments, behavioral questions, and discussions about your previous work experience. Expect to spend part of the interview solving problems or answering technical questions relevant to data engineering. It’s essential to be prepared for a range of topics, as interviewers may dive deep into specific areas of your expertise.

2. What should I wear to a Meta Data Engineer interview?

For a Meta Data Engineer interview, aim for business casual attire. This typically means wearing dress pants or a skirt with a collared shirt or blouse. It’s important to look professional while also feeling comfortable. Companies like Meta appreciate a relaxed yet polished appearance. Avoid overly casual clothing, such as jeans or sneakers, unless you know the company’s culture encourages a more laid-back dress code.

3. How many rounds of interviews are typical for a Meta Data Engineer position?

Typically, a Meta Data Engineer interview process involves three to five rounds. The first round may be a phone screening with HR, followed by technical interviews focusing on data engineering skills. Subsequent rounds often consist of behavioral interviews and possibly a final round with senior management. Each round is crucial to assess both your technical capabilities and cultural fit within the team and the organization.

4. Should I send a thank-you note after my Meta Data Engineer interview?

Yes, it is advisable to send a thank-you note after your interview. This gesture demonstrates your appreciation for the interviewer’s time and reinforces your interest in the position. In your note, briefly mention specific topics discussed during the interview that you found engaging. A well-crafted thank-you email can help you stand out among other candidates and leave a positive impression on the hiring team.