Preparing for a Performance Testing interview is a crucial step for anyone aspiring to excel in this unique field. Performance testers play a vital role in ensuring software applications run efficiently under load, directly impacting user satisfaction and business success. Unlike typical testing roles, performance testing focuses on speed, scalability, and reliability. Proper preparation is essential, as it equips candidates with the knowledge and confidence to tackle technical questions and demonstrate their problem-solving abilities. This comprehensive guide will cover key concepts, essential tools, best practices, common interview questions, and tips to help you stand out in your performance testing interview.

What to Expect in a Performance Testing Interview

In a Performance Testing interview, candidates can expect a mix of technical questions, practical assessments, and behavioral inquiries. Interviews may be conducted by a combination of hiring managers, senior testers, and developers. The process typically begins with a phone screening to evaluate basic knowledge, followed by technical interviews that assess skills in tools like JMeter or LoadRunner. Candidates may also face scenario-based questions to demonstrate problem-solving abilities. Finally, there may be an assessment of soft skills to gauge teamwork and communication, which are critical in performance testing roles.

Performance Testing Interview Questions For Freshers

Performance Testing is essential for ensuring that applications meet expected speed, scalability, and stability standards. Freshers should master fundamental concepts such as load testing, stress testing, and performance monitoring to effectively evaluate system performance and identify potential bottlenecks.

1. What is Performance Testing?

Performance Testing is a type of testing to determine how a system performs in terms of responsiveness and stability under a particular workload. It involves testing the speed, scalability, and reliability of an application to ensure it meets the required performance standards before deployment.

2. What are the different types of Performance Testing?

- Load Testing: Evaluates the system’s performance under expected user loads.

- Stress Testing: Determines the system’s robustness by testing it under extreme conditions.

- Spike Testing: Measures how the system handles sudden increases in load.

- Endurance Testing: Assesses the system’s performance over an extended period.

- Scalability Testing: Tests the application’s ability to scale up or down as needed.

Each type serves a specific purpose and helps identify different potential issues in the system.

3. What tools are commonly used for Performance Testing?

Common tools for Performance Testing include:

- Apache JMeter: An open-source tool for load testing and performance measurement.

- LoadRunner: A comprehensive performance testing tool from Micro Focus.

- Gatling: A powerful tool for load testing that uses Scala.

- BlazeMeter: A cloud-based solution for performance testing that integrates with JMeter.

- NeoLoad: A performance testing tool that allows for continuous testing in DevOps.

These tools help simulate user behavior and analyze system performance effectively.

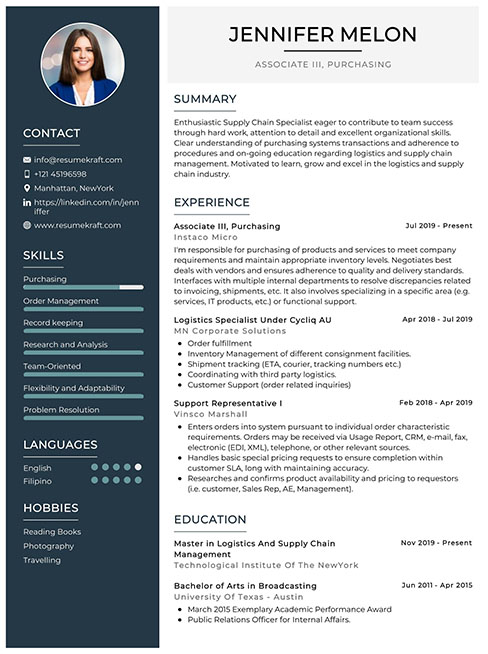

Build your resume in just 5 minutes with AI.

4. What is Load Testing and why is it important?

Load Testing is the process of putting demand on a system and measuring its response. It is important because it helps identify how much load the system can handle before performance degrades. This ensures that the application can sustain user traffic without any issues, ultimately enhancing user satisfaction.

5. Explain the concept of a ‘Throughput’ in Performance Testing.

Throughput refers to the number of requests processed by an application in a given amount of time, typically measured in requests per second (RPS). It is a crucial metric in Performance Testing as it indicates the system’s capacity to handle user load and helps identify bottlenecks.

6. What is the difference between Load Testing and Stress Testing?

Load Testing evaluates system performance under normal and peak conditions, focusing on how the system handles expected user loads. In contrast, Stress Testing pushes the system beyond its operational capacity to identify breaking points and assess how it recovers from failures.

7. What is a Performance Bottleneck?

A Performance Bottleneck refers to a point in the system where the performance is significantly limited due to insufficient resources or inefficient processes. It can occur in various components such as CPU, memory, database, and network, leading to degraded system performance.

8. What is the role of a Response Time in Performance Testing?

Response Time is the total time taken from when a user makes a request to when they receive a response. It is a critical performance metric that impacts user experience. A lower response time indicates better performance, while higher response times can lead to user frustration and abandonment.

9. What is Scalability Testing?

Scalability Testing assesses an application’s ability to scale up or down in response to varying loads. It determines how well the system can accommodate growth, ensuring that it can handle increased traffic or workload without significant performance degradation.

10. How do you identify Performance Issues in an application?

- Monitoring Metrics: Use performance monitoring tools to track key metrics like response time, throughput, and resource usage.

- Load Testing: Conduct load tests to simulate user traffic and observe system behavior under stress.

- Log Analysis: Analyze application logs for errors or warnings that might indicate performance problems.

- Profiling: Use profiling tools to identify resource-intensive operations within the code.

Combining these approaches helps identify and diagnose performance issues effectively.

11. Explain the concept of ‘Latency’ in Performance Testing.

Latency refers to the time delay between a user’s request and the response from the system. It is a crucial performance metric, as high latency can lead to a poor user experience. Reducing latency is often a key focus in Performance Testing to ensure quick and efficient application responses.

12. What is Endurance Testing?

Endurance Testing, also known as soak testing, evaluates the system’s performance over an extended period under a significant load. The objective is to identify memory leaks, resource depletion, and other issues that may arise during prolonged use, ensuring the system remains stable over time.

13. What metrics should be monitored during Performance Testing?

- Response Time: Time taken to complete a request.

- Throughput: Number of requests processed in a given time.

- Error Rate: Percentage of failed requests.

- Resource Usage: CPU, memory, disk I/O, and network usage.

- Concurrent Users: Number of users accessing the application simultaneously.

Monitoring these metrics helps identify performance issues and assess system health.

14. What is Spike Testing?

Spike Testing is a type of performance testing that evaluates how a system behaves when subjected to sudden and extreme increases in load. The goal is to determine whether the application can handle unexpected traffic spikes without crashing or experiencing significant performance degradation.

15. What are the best practices for Performance Testing?

- Define Clear Objectives: Establish specific goals for what you want to achieve with performance testing.

- Use Realistic Load Scenarios: Simulate actual user behavior and workloads to get accurate results.

- Automate Testing: Use automation tools to streamline the testing process and improve efficiency.

- Monitor Performance Continuously: Regularly track application performance in production to catch issues early.

- Analyze Results Thoroughly: Evaluate performance data to identify trends and areas for improvement.

Implementing these best practices can lead to more effective and reliable performance testing outcomes.

Performance Testing Intermediate Interview Questions

Performance Testing interview questions for intermediate candidates focus on essential concepts such as load testing, stress testing, and identifying bottlenecks in applications. Candidates should be familiar with tools, metrics, and best practices in performance testing to effectively assess application performance under various conditions.

16. What is the difference between load testing and stress testing?

Load testing involves evaluating an application’s performance under expected load conditions to ensure it can handle anticipated traffic. Stress testing, on the other hand, pushes the application beyond its limits to determine how it behaves under extreme conditions and to identify breaking points. Both types of testing are crucial for ensuring application reliability and performance in real-world scenarios.

17. What are some common performance metrics you should monitor?

- Response Time: The time taken by the system to respond to a request, crucial for user experience.

- Throughput: The number of transactions processed in a given time frame, indicating system efficiency.

- Resource Utilization: Monitoring CPU, memory, and disk usage helps identify bottlenecks.

- Error Rate: The percentage of failed requests, which can indicate performance issues.

Tracking these metrics allows testers to pinpoint performance issues and optimize system performance effectively.

18. How do you identify performance bottlenecks in an application?

Identifying performance bottlenecks involves several steps:

- Monitoring metrics: Use tools to monitor response times, CPU, memory, and network usage.

- Analyzing logs: Check application logs for errors or slow transactions.

- Profiling: Use profilers to analyze code execution and identify slow functions or methods.

- Load testing: Simulate load conditions to see where the application struggles.

Combining these methods provides a comprehensive view of potential bottlenecks.

19. Can you explain what a throughput is in the context of performance testing?

Throughput refers to the number of transactions or requests that a system can handle in a given period, typically expressed as transactions per second (TPS). It measures the capacity of an application to process requests and is a critical metric in performance testing. High throughput indicates a system’s ability to handle a large number of simultaneous users or transactions efficiently.

20. What tools would you recommend for performance testing and why?

- Apache JMeter: An open-source tool suitable for load testing and measuring performance across various protocols.

- LoadRunner: A comprehensive performance testing tool used for load testing and analyzing system behavior.

- Gatling: A powerful tool for continuous load testing, particularly for web applications, with a user-friendly DSL.

- Locust: A Python-based tool that allows for easy scripting of user behavior, suitable for distributed testing.

Each of these tools offers unique features and capabilities to meet different performance testing needs.

21. What is a performance testing strategy, and what key components should it have?

A performance testing strategy outlines the approach to testing an application’s performance. Key components include:

- Objectives: Define what aspects of performance are to be tested.

- Scope: Specify which systems, components, and scenarios will be included.

- Tools: Determine which tools will be used for testing.

- Metrics: Identify which metrics will be monitored.

- Reporting: Plan how results will be documented and communicated.

A well-defined strategy ensures comprehensive testing and clear communication of performance results.

22. How do you simulate virtual users in performance testing?

Simulating virtual users involves using performance testing tools to create multiple user sessions that mimic real user behavior. Tools like JMeter or LoadRunner allow you to configure user scenarios, set parameters for ramp-up time, and define the number of concurrent users to simulate. This helps in assessing how the application performs under load and identifying any potential issues.

23. What is a baseline in performance testing?

A baseline in performance testing refers to a set of performance metrics collected under normal operating conditions, serving as a standard for future comparisons. It helps in evaluating changes made to the application, infrastructure, or configuration by providing a reference point to measure performance improvements or regressions.

24. Describe the concept of a performance testing environment.

A performance testing environment is a dedicated setup that mirrors the production environment as closely as possible to ensure accurate testing results. It includes hardware, software, network configurations, and data similar to the production setup. This environment helps in identifying performance issues early and ensures that tests reflect real-world conditions.

25. What are some best practices for conducting performance tests?

- Define clear objectives: Establish what you want to achieve with performance testing.

- Use realistic data: Simulate real user scenarios and use data that resembles production data.

- Test early and often: Integrate performance testing into the development lifecycle to catch issues early.

- Analyze and report: Document findings and analyze data to make informed decisions for optimization.

Following these best practices helps in effective performance testing and ensures application reliability.

26. How can you ensure that your performance tests are repeatable?

To ensure repeatability in performance tests, follow these practices:

- Maintain a consistent test environment: Use the same hardware and software configurations for each test.

- Use automated scripts: Automate test scenarios to eliminate human error and ensure consistent execution.

- Control external factors: Minimize variations in network conditions and data to ensure tests run under similar circumstances.

By standardizing the testing approach, results can be reliably compared across different iterations.

27. What role does caching play in performance testing?

Caching plays a significant role in performance testing by temporarily storing frequently accessed data to reduce latency and improve response times. During tests, it can significantly affect the results by decreasing load on the database and speeding up data retrieval. Understanding how caching mechanisms work helps testers evaluate the true performance of an application under various load conditions.

28. What is the significance of response time in performance testing?

Response time is a critical performance metric that measures how quickly an application responds to user requests. It significantly impacts user experience; longer response times can lead to user dissatisfaction and potential loss of business. In performance testing, tracking response time helps identify bottlenecks and optimize application performance to meet user expectations.

Performance Testing Interview Questions for Experienced

Performance Testing interview questions for experienced professionals delve into advanced topics such as system architecture, optimization techniques, scalability concerns, design patterns, and leadership in testing practices. These questions assess a candidate’s depth of knowledge and ability to mentor others in the field.

31. What are the main components of a performance testing architecture?

The key components of a performance testing architecture include:

- Load Generators: These simulate user traffic to create realistic load on the application.

- Performance Testing Tool: Tools like JMeter or LoadRunner that capture performance metrics and analyze results.

- Monitoring Tools: Tools to monitor server performance, such as CPU, memory usage, and network traffic.

- Reporting System: A mechanism to aggregate and present results for analysis.

Understanding these components helps ensure that performance tests are conducted effectively and provide valuable insights.

32. How do you identify performance bottlenecks in an application?

Identifying performance bottlenecks involves several steps:

- Baseline Performance Metrics: Establish baseline performance metrics to compare against.

- Monitoring Tools: Use tools like APM (Application Performance Management) to gather data on resource usage.

- Load Testing: Conduct load tests to see how the application performs under stress.

- Database Profiling: Analyze database queries for efficiency and optimization opportunities.

By following these steps, you can effectively pinpoint areas that require optimization.

33. What is the difference between Load Testing and Stress Testing?

Load Testing assesses the system’s behavior under anticipated load conditions, measuring performance metrics like response time and throughput. Stress Testing, on the other hand, evaluates how the system behaves under extreme load conditions, often beyond its specified limits, to identify breaking points. Both types of testing are essential for ensuring application reliability and performance.

34. Can you explain the concept of scalability in performance testing?

Scalability in performance testing refers to the ability of an application to handle increasing amounts of load without performance degradation. This can be vertical scalability (adding resources to a single node) or horizontal scalability (adding more nodes). Testing scalability involves evaluating how well the application can maintain performance while increasing load, which is crucial for ensuring it can grow with user demand.

35. Describe how you would set up a performance testing environment.

Setting up a performance testing environment requires:

- Isolated Environment: Create a testing environment that mirrors production to avoid external factors affecting results.

- Configuration: Ensure the environment is configured identically to production, including hardware, software, and network settings.

- Load Generators: Deploy load generators to simulate user activity from various geographic locations.

- Monitoring Tools: Install monitoring tools to gather performance data during tests.

This meticulous setup allows for accurate performance testing outcomes.

36. What design patterns are commonly used in performance testing?

Some common design patterns in performance testing include:

- Page Object Model: Used for organizing test code and enhancing reusability.

- Factory Pattern: Helps in creating objects without specifying the concrete class, useful in dynamic test case generation.

- Chain of Responsibility: Useful for managing requests in a sequence, especially in load testing scenarios.

Implementing these design patterns can improve test maintainability and efficiency.

37. How can you optimize the performance of a database for testing?

Optimizing database performance can involve:

- Indexing: Use proper indexing to speed up query performance.

- Query Optimization: Analyze and refine SQL queries to reduce execution time.

- Connection Pooling: Implement connection pooling to manage database connections efficiently.

- Data Partitioning: Split large datasets into manageable partitions to improve query performance.

These strategies are critical for ensuring that the database can handle the load effectively during testing.

38. What role does caching play in performance testing?

Caching plays a significant role in enhancing application performance. It involves storing frequently accessed data in a temporary storage area, reducing the time required to retrieve that data on subsequent requests. During performance testing, you should evaluate how caching strategies impact response times and server load, as effective caching can significantly improve performance under load.

39. How do you handle reporting and analysis after performance tests?

After performance tests, effective reporting and analysis involve:

- Data Aggregation: Collect data from various sources such as performance testing tools and monitoring systems.

- Visualizations: Use graphs and charts to present data clearly and highlight key findings.

- Root Cause Analysis: Identify the underlying causes of any performance issues discovered during testing.

- Recommendations: Provide actionable recommendations based on the analysis for performance improvements.

These steps ensure that stakeholders understand performance test outcomes and their implications for future development.

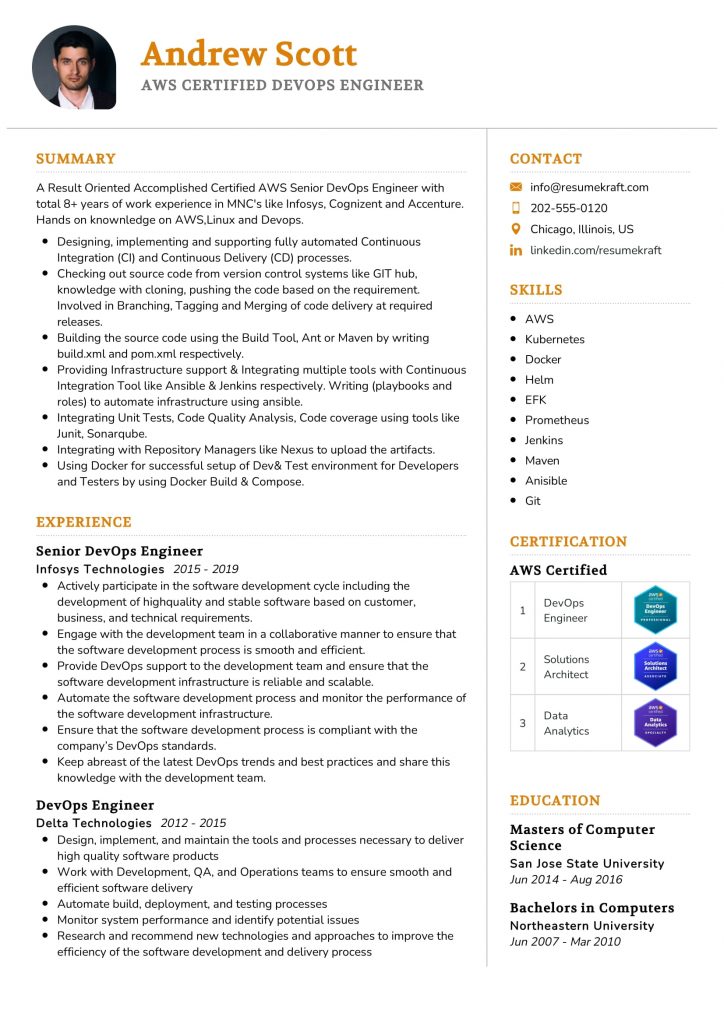

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

40. What leadership qualities do you consider essential for a performance testing team?

Essential leadership qualities for a performance testing team include:

- Technical Expertise: A strong understanding of performance testing tools and methodologies.

- Communication Skills: The ability to convey complex information clearly to various stakeholders.

- Mentoring Ability: Supporting team members in their professional development and fostering a collaborative environment.

- Problem-Solving Skills: The capacity to analyze issues critically and develop effective solutions.

These qualities foster a productive team culture and enhance the overall effectiveness of performance testing initiatives.

41. How do you ensure the accuracy of performance test results?

Ensuring the accuracy of performance test results involves:

- Consistent Environment: Conduct tests in a controlled environment to reduce variability.

- Multiple Test Runs: Perform tests multiple times to account for anomalies and gather average metrics.

- Realistic Scenarios: Use realistic user scenarios and workloads to simulate actual use.

- Validation: Cross-validate results with monitoring tools to ensure consistency across different metrics.

These practices help establish confidence in the reliability of performance test findings.

How to Prepare for Your Performance Testing Interview

Preparing for a Performance Testing interview requires an understanding of testing methodologies, tools, and best practices. Familiarity with load testing, stress testing, and performance metrics will enhance your confidence and competence, helping you to stand out as a candidate.

- Familiarize yourself with key performance testing concepts such as load, stress, and endurance testing. Understand the differences between them and when to apply each type. This foundational knowledge will enable you to articulate your expertise during the interview.

- Gain hands-on experience with popular performance testing tools like JMeter, LoadRunner, or Gatling. Set up test scenarios and analyze results to develop insights into how different factors impact application performance. Practical experience will help you answer technical questions confidently.

- Study performance testing metrics such as response time, throughput, and resource utilization. Be prepared to discuss how you would measure these metrics and their significance in determining application performance. Understanding these concepts is crucial for any performance tester.

- Review case studies or past projects where you conducted performance testing. Be ready to discuss the challenges you faced, the strategies you employed, and the outcomes of your testing. Real-world examples will demonstrate your problem-solving skills and expertise.

- Stay updated on the latest trends in performance testing, including cloud-based testing and CI/CD integration. Research how these trends are shaping the industry and be prepared to discuss how they can improve testing efficiency and effectiveness in your role.

- Practice explaining complex technical concepts in simple terms. Interviewers may ask how you would communicate findings to non-technical stakeholders. Being able to convey information clearly will showcase your communication skills and ability to work with cross-functional teams.

- Prepare for behavioral questions by reflecting on your past experiences related to teamwork, conflict resolution, and project management. Use the STAR method (Situation, Task, Action, Result) to structure your responses, ensuring you provide specific examples that highlight your skills and contributions.

Common Performance Testing Interview Mistakes to Avoid

When interviewing for a Performance Testing position, avoiding common mistakes can significantly impact your chances of success. Understanding these pitfalls will help you present your skills effectively and demonstrate your expertise in performance testing methodologies and tools.

- Neglecting Technical Knowledge: Failing to demonstrate a solid understanding of performance testing tools and techniques can raise red flags. Familiarize yourself with popular tools like JMeter, LoadRunner, and Gatling to showcase your expertise.

- Ignoring Real-World Scenarios: Avoid providing generic answers. Use specific examples from past experiences to illustrate how you approached performance issues, which highlights your practical knowledge and problem-solving skills.

- Not Understanding Metrics: Performance testing is heavily reliant on metrics. Failing to discuss key performance indicators (KPIs) such as response times, throughput, and resource utilization can indicate a lack of depth in your knowledge.

- Overlooking Test Environment Setup: Interviewers expect candidates to be knowledgeable about setting up test environments. Discussing your experience with configuring servers and test data management can set you apart from other candidates.

- Failing to Communicate Clearly: Performance testing concepts can be complex. Avoid using jargon or technical terms without explanation. Clear communication demonstrates your ability to convey technical information to non-technical stakeholders.

- Disregarding Collaboration: Performance testing often involves teamwork. Not mentioning your experience working with developers and other teams can imply a lack of collaboration skills, which are crucial for successful testing initiatives.

- Not Preparing for Behavioral Questions: Performance testing interviews may include behavioral questions to assess teamwork and conflict resolution. Prepare examples that show your ability to handle challenges and adapt to changing environments.

- Neglecting Continuous Learning: The field of performance testing is evolving. Failing to discuss your commitment to staying updated with industry trends and new tools can suggest a lack of initiative in professional development.

Key Takeaways for Performance Testing Interview Success

- Understand key performance testing concepts such as load, stress, and endurance testing. Familiarize yourself with tools like JMeter and LoadRunner to showcase your practical knowledge.

- Review your previous performance testing experiences and be prepared to discuss specific challenges, solutions, and outcomes, as real-world examples can significantly strengthen your responses.

- Use an interview preparation checklist to ensure you cover essential topics like testing methodologies, metrics, and reporting, making your preparation more structured and effective.

- Engage in mock interview practice to refine your answers and improve your confidence. This can help you articulate your thoughts clearly and respond to questions more effectively.

- Stay updated on the latest performance testing trends and best practices, as demonstrating your commitment to learning can impress interviewers and set you apart from other candidates.

Frequently Asked Questions

1. How long does a typical Performance Testing interview last?

A typical Performance Testing interview can last anywhere from 30 minutes to 1 hour. The duration largely depends on the company’s interview process and the number of technical questions asked. Expect to discuss your experience, methodologies, and tools used in performance testing, alongside practical scenarios. Some interviews may also include a hands-on assessment or a case study, which can extend the time needed. Being prepared for both technical and situational questions can help you manage your time effectively.

2. What should I wear to a Performance Testing interview?

For a Performance Testing interview, it’s best to dress in business casual attire unless otherwise specified. This typically means wearing slacks and a collared shirt for men, and a blouse or smart dress for women. If you’re interviewing at a tech company known for a casual culture, you can opt for smart jeans and a neat top. The goal is to look professional while also being comfortable, as it helps you feel confident during the interview.

3. How many rounds of interviews are typical for a Performance Testing position?

Performance Testing positions typically involve 2 to 4 rounds of interviews. The first round is often a screening call with HR, followed by one or more technical interviews assessing your skills and knowledge in performance testing tools and methodologies. Some companies may include a final round focused on cultural fit or managerial skills. It’s important to prepare for both technical and behavioral questions across all rounds to increase your chances of success.

4. Should I send a thank-you note after my Performance Testing interview?

Yes, sending a thank-you note after your Performance Testing interview is a good practice. It demonstrates your professionalism and appreciation for the interviewer’s time. In your note, briefly thank them for the opportunity, reiterate your interest in the position, and mention any key points discussed during the interview that highlight your fit. Sending this within 24 hours of the interview can leave a positive impression and keep you fresh in their minds during the decision-making process.