Apache Airflow has become the go-to orchestration tool for managing complex workflows and data pipelines. Its flexibility, scalability, and integration capabilities with modern tools make it a must-have skill for data engineers and developers alike. If you’re preparing for an Airflow interview, you’re likely to encounter questions that test your understanding of its core concepts, architecture, and best practices.

In this article, we’ll cover the top 35 Airflow interview questions, each with an answer and a brief explanation to help you gain a comprehensive understanding of how Apache Airflow works.

Top 35 Airflow Interview Questions

1. What is Apache Airflow, and why is it used?

Apache Airflow is an open-source platform for programmatically authoring, scheduling, and monitoring workflows. It allows users to define workflows as Directed Acyclic Graphs (DAGs) and automates the orchestration of complex data pipelines.

Explanation:

Airflow is used to organize tasks in a way that ensures smooth execution, error handling, and reusability. It is particularly beneficial for managing ETL (Extract, Transform, Load) processes.

2. What is a Directed Acyclic Graph (DAG) in Airflow?

In Airflow, a Directed Acyclic Graph (DAG) is a collection of tasks organized in a way that defines their execution order and dependencies. A DAG ensures that tasks are executed in the correct order, and once executed, they do not need to run again unless specified.

Explanation:

The DAG serves as the blueprint for workflow execution in Airflow. Its acyclic property ensures there are no circular dependencies among tasks.

3. How do you define a DAG in Airflow?

A DAG in Airflow is defined using Python code, and it consists of tasks, dependencies, and execution logic. The DAG is created as a Python object using the airflow.models.DAG class.

Explanation:

The Python-based DAG definition allows for dynamic workflow creation, giving users flexibility to programmatically define their workflows.

4. Can you explain the concept of operators in Airflow?

Operators in Airflow are used to define individual tasks within a DAG. There are different types of operators, such as PythonOperator, BashOperator, and SensorOperator, each responsible for a specific task action.

Explanation:

Operators act as building blocks in a DAG, allowing different task types to be performed, such as running scripts, querying databases, or waiting for external events.

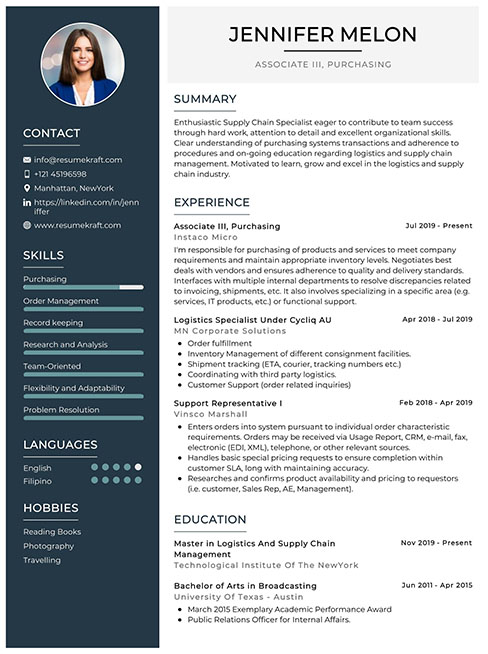

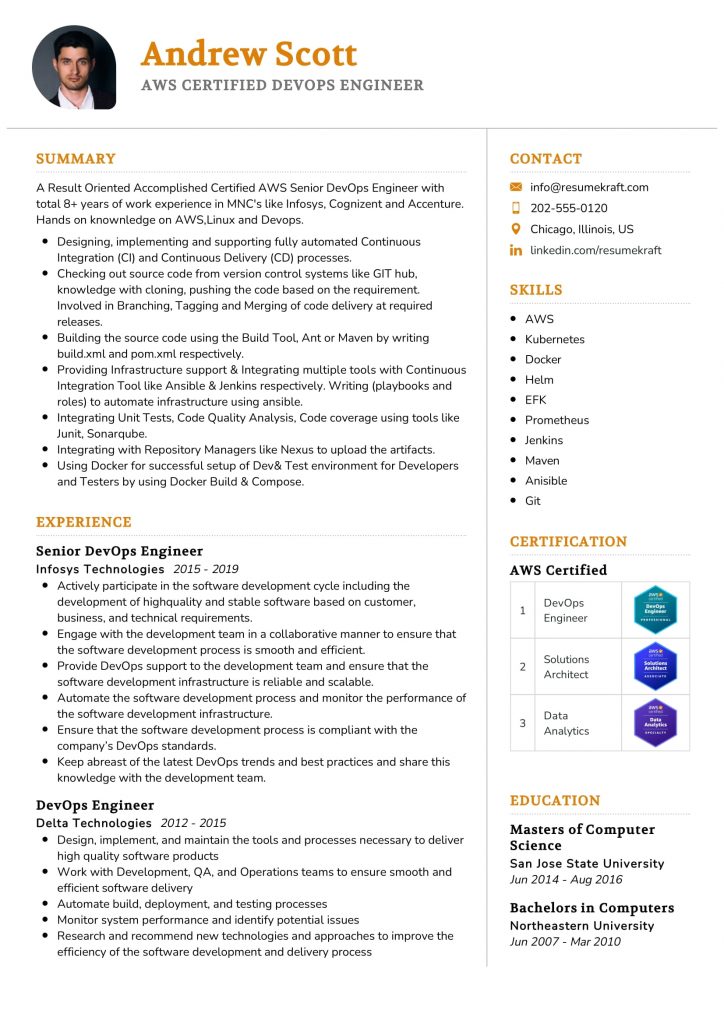

Build your resume in just 5 minutes with AI.

5. What are Sensors in Airflow?

Sensors are a special type of operator in Airflow that wait for a specific condition to be met before triggering downstream tasks. Examples include waiting for a file to land in a particular location or a partition to be available in a database.

Explanation:

Sensors ensure that a task doesn’t start until all necessary conditions are met, making workflows more reliable.

6. What is XCom in Airflow?

XCom, or cross-communication, is a mechanism in Airflow that allows tasks to exchange small amounts of data. XComs are used to pass messages or metadata between tasks in a DAG.

Explanation:

XComs are useful when you need tasks to share the results or statuses with each other, facilitating communication within the workflow.

7. How do you schedule a DAG in Airflow?

DAGs in Airflow are scheduled using a schedule_interval parameter, which defines when the DAG should be triggered. It supports cron expressions and built-in options like @daily, @hourly, etc.

Explanation:

Scheduling allows you to define how often your workflow runs, whether it’s every hour, daily, or based on more complex timing needs.

8. What is the role of the Airflow scheduler?

The Airflow scheduler is responsible for triggering tasks according to their schedule and dependencies. It continuously monitors the DAGs and ensures that the tasks are executed in the right order.

Explanation:

The scheduler is the backbone of Airflow’s execution mechanism, ensuring that all tasks run at the correct time and sequence.

9. How does Airflow handle retries for failed tasks?

Airflow allows you to configure retry logic for each task. You can specify the number of retries and the delay between retries using the retries and retry_delay parameters.

Explanation:

Retry logic is crucial for handling intermittent failures, allowing tasks to be retried without manually re-running the entire workflow.

10. Can you explain the role of an Airflow worker?

Airflow workers are processes that execute the tasks assigned by the scheduler. Each task is run on a worker, and the worker’s resources determine how efficiently the tasks are executed.

Explanation:

Workers ensure that tasks are executed in parallel and are distributed across different resources to balance the load.

11. What is the purpose of the Airflow web UI?

The Airflow web UI provides a graphical interface for managing, monitoring, and troubleshooting DAGs and tasks. It allows users to view task statuses, logs, and overall workflow performance.

Explanation:

The web UI is an essential tool for developers and operators to easily monitor and manage workflows, reducing the need for command-line interactions.

12. How can you trigger a DAG manually in Airflow?

You can manually trigger a DAG in Airflow using the web UI or the command-line interface (CLI) with the command airflow dags trigger <dag_id>.

Explanation:

Manual triggering is useful when you need to rerun a workflow outside its scheduled interval, such as for debugging or testing.

13. What is backfilling in Airflow?

Backfilling refers to the process of running a DAG for past time intervals that were missed or skipped. Airflow can automatically backfill tasks if a DAG was not run during certain intervals.

Explanation:

Backfilling ensures that no data is lost by running workflows for the missed intervals, especially when the system or DAG was paused.

14. What are pools in Airflow?

Pools in Airflow are used to limit the number of tasks that can run concurrently within specific categories of tasks. This helps in resource allocation and ensures that the system is not overwhelmed.

Explanation:

By using pools, you can control resource allocation and ensure that high-priority tasks get executed first.

15. What is the difference between ExternalTaskSensor and a normal Sensor?

An ExternalTaskSensor is used to wait for a task in a different DAG to complete, whereas a normal sensor waits for a condition within the same DAG or external system.

Explanation:

ExternalTaskSensor is useful for workflows that span multiple DAGs, ensuring proper task dependency across them.

16. What are Task Instances in Airflow?

Task instances represent the individual runs of a task in a specific DAG run. A task instance is a unique execution of a task for a particular date and time.

Explanation:

Task instances are key for tracking the execution and status of tasks, helping with debugging and understanding workflow behavior.

17. What is the role of Airflow metadata database?

The Airflow metadata database stores information about DAGs, tasks, task instances, logs, and other operational metadata. It is a crucial part of Airflow’s architecture.

Explanation:

The metadata database ensures that the state of the workflows is persistent and can be queried for history and logs.

18. How do you handle task dependencies in Airflow?

Task dependencies in Airflow are managed using set_upstream() and set_downstream() methods or by using the >> and << operators to define the task order.

Explanation:

Defining dependencies ensures that tasks are executed in the correct order and based on the completion of other tasks.

19. Can you explain how logging works in Airflow?

Airflow provides logging for each task instance, and the logs can be viewed through the web UI. Logs are stored locally by default, but can also be stored in external systems like S3 or Google Cloud Storage.

Explanation:

Logging is critical for debugging and monitoring workflows, providing insights into task execution details and errors.

20. What is the purpose of Airflow hooks?

Airflow hooks are interfaces to external systems, such as databases, APIs, or cloud services. They are used to execute queries, pull data, and interact with external systems from within a task.

Explanation:

Hooks allow seamless integration between Airflow and external services, making data extraction and interaction easier.

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

21. What is the function of a task group in Airflow?

A task group allows you to group multiple tasks together under a common name, making DAGs easier to manage and visualize. Task groups do not affect task dependencies but improve clarity in the DAG.

Explanation:

Task groups are especially useful in complex workflows, making it easier to organize tasks and improve readability.

22. How can you parallelize tasks in Airflow?

Tasks can be parallelized in Airflow by defining them without explicit dependencies between them. Airflow’s scheduler will execute independent tasks concurrently.

Explanation:

Parallelizing tasks helps optimize the execution time of workflows, especially when tasks are independent of each other.

23. How do you retry failed tasks in Airflow?

Failed tasks can be retried automatically by configuring the retries parameter in the task definition. You can also set retry_delay to specify the time between retries.

Explanation:

*Retrying tasks reduces the risk of permanent failures due

to transient issues, improving workflow resilience.*

24. Can you explain task instances in Airflow?

Task instances are unique runs of tasks within a DAG, representing each task’s execution in the workflow. Task instances have states such as success, failed, and retry.

Explanation:

Tracking task instances is essential for understanding the workflow’s current state and performance.

25. What is the use of SLA (Service Level Agreement) in Airflow?

An SLA in Airflow is a time-based expectation for task completion. If a task exceeds its SLA, Airflow can trigger alerts, making it a useful tool for monitoring workflow performance.

Explanation:

SLA helps maintain the efficiency of workflows, ensuring tasks are completed within expected timeframes.

26. How does Airflow handle concurrency?

Airflow handles concurrency at the task level using the max_active_runs parameter for DAGs and concurrency for tasks. These parameters limit the number of simultaneous task or DAG executions.

Explanation:

Concurrency control ensures that workflows do not overload system resources, maintaining stability.

27. What are variables in Airflow?

Variables in Airflow are key-value pairs that can be used to store configuration or settings that can be accessed globally across DAGs and tasks. They can be managed via the web UI, CLI, or code.

Explanation:

Using variables allows for dynamic configuration and simplifies workflows by reducing hardcoding.

28. How can you handle task failures in Airflow?

Task failures in Airflow can be handled using retries, alerting mechanisms like email notifications, or by setting dependencies with downstream tasks that handle errors gracefully.

Explanation:

Proper handling of task failures ensures that workflows are robust and errors are caught early.

29. Can you explain the purpose of Airflow Executors?

Executors in Airflow are responsible for determining where and how tasks are executed. Common executors include the LocalExecutor, CeleryExecutor, and KubernetesExecutor.

Explanation:

Executors define the scalability of Airflow, allowing it to run tasks in a distributed or local environment.

30. What is the difference between the LocalExecutor and the CeleryExecutor?

The LocalExecutor runs tasks in parallel on a single machine, while the CeleryExecutor distributes tasks across multiple worker nodes, providing greater scalability.

Explanation:

Choosing the right executor depends on the scale of your workflows and the available infrastructure.

31. What is the use of the catchup parameter in Airflow?

The catchup parameter controls whether a DAG should backfill and execute for previous intervals if it has missed runs. It can be set to True or False.

Explanation:

Catchup ensures that missed runs are executed, allowing workflows to remain consistent, especially in ETL processes.

32. Can you explain the role of a DAG Run in Airflow?

A DAG Run is an individual instance of a DAG that represents a specific execution of that DAG. Each DAG Run corresponds to a specific execution date.

Explanation:

DAG Runs are crucial for understanding when and how often a DAG is executed, aiding in tracking and performance analysis.

33. How do you implement dynamic DAGs in Airflow?

Dynamic DAGs are created using logic in Python to generate DAGs dynamically based on certain conditions, such as reading a list of tasks from a file or database.

Explanation:

Dynamic DAGs allow for flexible and scalable workflows that can adapt to changing requirements.

34. What is the role of the airflow.cfg file?

The airflow.cfg file contains the configuration settings for an Airflow instance, such as database connections, executor settings, and web server parameters.

Explanation:

The configuration file is essential for customizing how Airflow operates, enabling tailored setups for different environments.

35. How do you monitor Airflow workflows?

Airflow workflows can be monitored using the web UI, which provides task status, logs, and graph views. Additionally, email alerts and external logging systems can be set up for more advanced monitoring.

Explanation:

Monitoring ensures that workflows run smoothly and that any issues are quickly identified and addressed.

Conclusion

Apache Airflow has become a vital tool for managing data pipelines and workflows in the modern data landscape. Mastering its core concepts and being prepared for questions on architecture, scheduling, and error handling can set you apart in interviews. With these top 35 Airflow interview questions and explanations, you’re now well-equipped to tackle questions about DAGs, operators, sensors, and other critical components in your next Airflow interview.

Recommended Reading: