Data modeling is a critical component in managing and analyzing data efficiently. It plays a vital role in the design of databases, helping ensure data integrity and accessibility. Whether you are interviewing for a position as a data modeler, data analyst, or database architect, having a strong grasp of data modeling concepts can give you a competitive edge. This article will take you through the top 39 data modeling interview questions to help you prepare for your next interview. These questions cover various levels of difficulty and focus on both conceptual and practical aspects of data modeling.

Top 39 Data Modeling Interview Questions

1. What is Data Modeling?

Data modeling is the process of creating a visual representation of data, describing how data is stored, organized, and manipulated. It serves as a blueprint for designing databases.

Explanation:

Data modeling helps in structuring the data to meet business requirements, making it easier for developers and business analysts to work together.

2. What are the different types of data models?

There are three main types of data models: Conceptual, Logical, and Physical. The conceptual model outlines the high-level structure, the logical model focuses on business requirements, and the physical model deals with implementation details.

Explanation:

Each model serves a specific purpose, from understanding business needs to designing the database’s technical structure.

3. What is an Entity in Data Modeling?

An entity refers to any object or concept in a database that stores information. For example, “Customer” can be an entity in a sales database.

Explanation:

Entities represent real-world things in a database system and are essential for organizing data effectively.

4. What is an Entity-Relationship Diagram (ERD)?

An ERD is a graphical representation of entities and their relationships in a database. It is widely used during the design phase to map out data relationships visually.

Explanation:

ERDs help stakeholders understand how entities are interconnected in the database, improving the communication between technical and non-technical teams.

5. Can you explain the concept of Normalization?

Normalization is the process of organizing data to minimize redundancy and dependency. This is achieved by dividing large tables into smaller ones and defining relationships between them.

Explanation:

Normalization ensures that databases are efficient and consistent, making data easier to manage and update.

6. What are the normal forms in database normalization?

There are several normal forms, with the most common being 1NF, 2NF, 3NF, and BCNF. Each form eliminates specific types of redundancy and ensures data integrity.

Explanation:

Higher normal forms ensure that the database is free of anomalies like update, delete, and insert anomalies.

7. What is a Primary Key?

A primary key is a unique identifier for each record in a table. It ensures that no duplicate entries exist for the primary key field and that each entry is unique.

Explanation:

Primary keys are essential for uniquely identifying each record and maintaining data integrity.

8. What is a Foreign Key?

A foreign key is a field in one table that links to the primary key in another table. It establishes a relationship between the two tables.

Explanation:

Foreign keys maintain the referential integrity between tables and are vital for relating data stored in different tables.

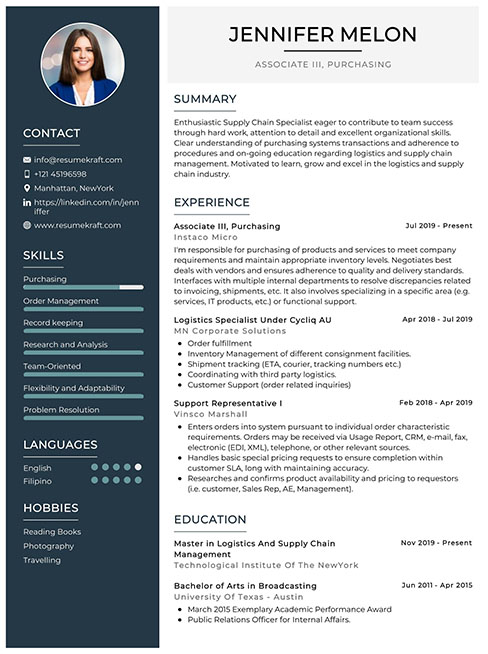

Build your resume in just 5 minutes with AI.

9. What is Denormalization?

Denormalization is the process of intentionally introducing redundancy into a database to improve performance. It is often used in data warehouses where faster query execution is a priority.

Explanation:

Though it increases redundancy, denormalization helps in speeding up the retrieval process, especially in read-heavy databases.

10. What are the differences between OLTP and OLAP?

OLTP (Online Transaction Processing) focuses on managing transaction data, while OLAP (Online Analytical Processing) is designed for query-heavy environments to analyze historical data.

Explanation:

OLTP is optimized for write-heavy workloads, whereas OLAP is geared toward complex query processing and data analysis.

11. What is a Surrogate Key?

A surrogate key is a system-generated, unique identifier for a record in a table. Unlike a natural key, it has no business meaning and is primarily used for joining tables.

Explanation:

Surrogate keys improve performance in complex databases where natural keys may be inefficient.

12. What is a Snowflake Schema?

A snowflake schema is a type of data warehouse schema that normalizes the dimension tables, making them more complex and structured like a snowflake.

Explanation:

Snowflake schemas are useful when the dimension tables contain hierarchical data.

13. What is a Star Schema?

A star schema is a simple, denormalized data warehouse schema where fact tables are connected to dimension tables, forming a star-like structure.

Explanation:

Star schemas are easy to understand and query, making them popular in data warehousing.

14. What is Dimensional Modeling?

Dimensional modeling is a data structure technique optimized for querying and reporting. It uses facts and dimensions to represent data, making it easier for end-users to retrieve information.

Explanation:

Dimensional models simplify data navigation for business intelligence and reporting purposes.

15. What are Fact Tables and Dimension Tables?

Fact tables store quantitative data for analysis, while dimension tables contain descriptive attributes related to the facts.

Explanation:

Fact and dimension tables work together to support meaningful data analysis.

16. What is a Data Mart?

A data mart is a subset of a data warehouse that focuses on a particular department or business function, such as sales or marketing.

Explanation:

Data marts help in delivering focused reports and analysis to specific business areas.

17. What is a Slowly Changing Dimension (SCD)?

An SCD is a dimension that captures the changes in data over time. There are different types of SCDs (Type 1, Type 2, and Type 3) to handle changes in various ways.

Explanation:

Handling slowly changing dimensions ensures that historical data is accurately represented.

18. Can you explain the difference between Star Schema and Snowflake Schema?

A star schema is denormalized, leading to faster query performance, whereas a snowflake schema normalizes dimension tables, making the schema more complex.

Explanation:

The choice between star and snowflake schemas depends on the trade-off between query performance and data redundancy.

19. What is a Composite Key?

A composite key is a primary key that consists of two or more fields to uniquely identify a record in a table.

Explanation:

Composite keys are used when a single field is not sufficient to uniquely identify records.

20. What is Data Redundancy?

Data redundancy occurs when the same piece of data is stored in multiple places. While sometimes necessary for performance, it often leads to data inconsistency.

Explanation:

Reducing data redundancy helps maintain data consistency and integrity in a database.

21. What is an Index in a database?

An index is a database object that speeds up the retrieval of rows. It is created on columns that are frequently queried, improving the overall performance of the database.

Explanation:

Indexes improve query performance by reducing the amount of data scanned during data retrieval.

22. What are Constraints in a database?

Constraints are rules applied to data columns that enforce data integrity. Common constraints include primary key, foreign key, unique, and not null constraints.

Explanation:

Constraints help ensure the accuracy and reliability of data in the database.

23. What is a Hierarchical Database Model?

A hierarchical database model organizes data in a tree-like structure where each parent has one or more children, but children have only one parent.

Explanation:

Hierarchical models are fast for certain types of data access but lack flexibility for complex relationships.

24. What is a Relational Database?

A relational database organizes data into tables (also called relations) where each table contains rows and columns. These tables are related to one another through keys.

Explanation:

Relational databases are widely used because they are easy to query and maintain, ensuring data integrity.

25. What is a Data Warehouse?

A data warehouse is a centralized repository that stores integrated data from multiple sources for reporting and analysis purposes.

Explanation:

Data warehouses support business intelligence activities by providing a consolidated view of the organization’s data.

26. What is Data Lake?

A data lake is a storage repository that holds large amounts of raw data in its native format until it is needed for analysis.

Explanation:

Data lakes provide flexibility in storing both structured and unstructured data, making them ideal for big data environments.

27. What is Schema in a database?

A schema is the structure that defines how the database is organized, including tables, views, and relationships between different tables.

Explanation:

Schemas provide a logical grouping of database objects, making it easier to manage and maintain data.

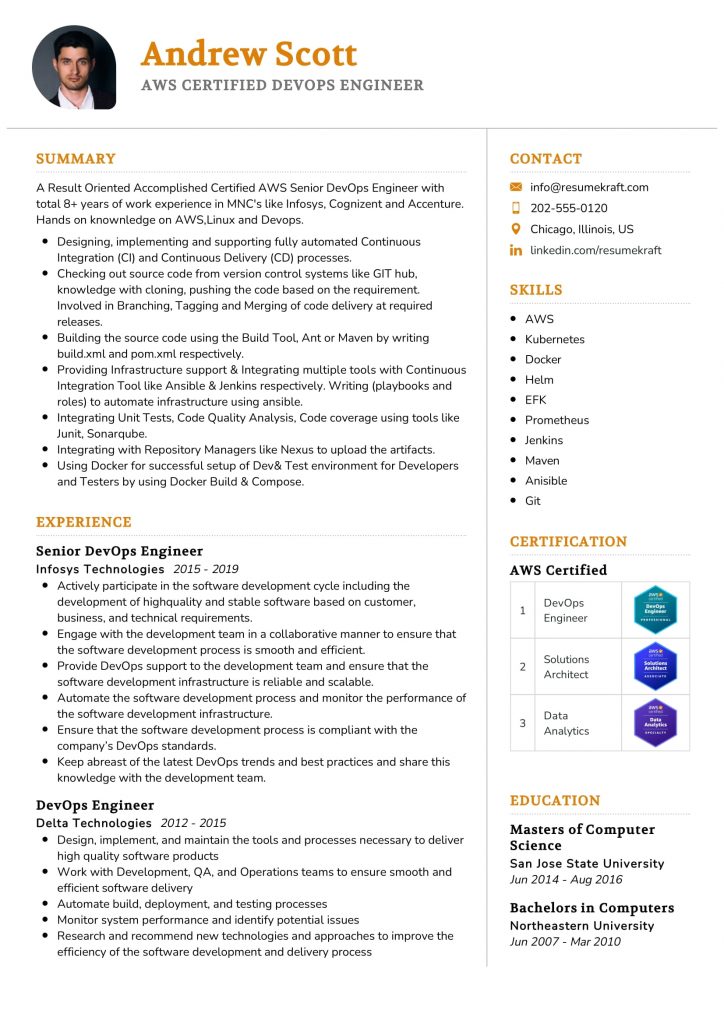

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

28. What is Referential Integrity?

Referential integrity ensures that relationships between tables remain consistent. For example, it prevents adding records to a table with a foreign key if the corresponding record in the referenced table doesn’t exist.

Explanation:

Referential integrity helps prevent orphaned records and ensures consistency between related tables.

29. What is a Self-Join?

A self-join is a type of join that links a table to itself. It is useful when you want to compare rows within the same table.

Explanation:

Self-joins are often used to find hierarchical data or to perform comparisons within a table.

30. What are the advantages of using Views in a database?

Views are virtual tables that

provide a simplified interface to query complex data. They can be used to restrict access to sensitive data or to simplify queries for end-users.

Explanation:

Views improve data security and simplify complex queries by hiding unnecessary details from end-users.

31. What is a Data Dictionary?

A data dictionary is a centralized repository that stores metadata about the database, such as table names, field types, and relationships between tables.

Explanation:

Data dictionaries help developers and database administrators understand the structure and usage of the database.

32. What is Cardinality in Data Modeling?

Cardinality defines the relationship between two entities in terms of how many instances of one entity can be associated with another. For example, one-to-many or many-to-many.

Explanation:

Understanding cardinality is essential for designing efficient databases that accurately represent real-world relationships.

33. What is a Lookup Table?

A lookup table is a table used to store static data that is referenced by other tables. For example, a lookup table might store country names that are referenced by a customer table.

Explanation:

Lookup tables are essential for reducing redundancy and improving data consistency across multiple tables.

34. What is Data Integrity?

Data integrity refers to the accuracy and consistency of data stored in a database. It ensures that data is correct, complete, and reliable.

Explanation:

Data integrity is critical for ensuring that the data used for decision-making is trustworthy and accurate.

35. What is a Data Flow Diagram (DFD)?

A data flow diagram represents the flow of data within a system, showing how data moves between processes, data stores, and external entities.

Explanation:

DFDs help in understanding how data flows through a system, making them useful for both analysis and design phases.

36. What is the purpose of a Foreign Key Constraint?

A foreign key constraint is used to maintain referential integrity between two tables by ensuring that the value in a foreign key column matches a primary key value in another table.

Explanation:

Foreign key constraints prevent orphan records and ensure that relationships between tables are properly maintained.

37. What are Triggers in a database?

Triggers are automated procedures executed in response to certain events, such as insertions, updates, or deletions in a database table.

Explanation:

Triggers are useful for enforcing business rules or automatically updating related data when changes occur in the database.

38. What is an ER (Entity-Relationship) Model?

An ER model is a conceptual representation of the data and relationships in a system. It focuses on defining entities, attributes, and relationships.

Explanation:

ER models provide a high-level, abstract view of the database, making it easier to understand and design complex data systems.

39. What is a Schema-less Database?

A schema-less database, often used in NoSQL systems, allows you to store data without a predefined schema. This offers flexibility in handling unstructured or semi-structured data.

Explanation:

Schema-less databases are ideal for big data and applications where the data structure is unpredictable or evolving.

Conclusion

Data modeling is a crucial skill for anyone involved in database design, data analysis, or business intelligence. Mastering these interview questions will not only help you prepare for your next data modeling interview but also deepen your understanding of core data concepts. From understanding the basics like ER diagrams and normalization to more advanced topics like fact tables and star schemas, these questions cover a wide range of data modeling topics.

To advance your career, ensure you have a solid understanding of these concepts and how they relate to real-world scenarios. And if you’re preparing your resume for your next big data modeling interview, check out our resume builder, explore free resume templates, and browse through resume examples for some inspiration.

Recommended Reading: