Scenario-based software testing interview questions are designed to evaluate a tester’s problem-solving skills and experience in handling complex testing situations. These questions often require testers to demonstrate their approach to real-world testing challenges, including test case design, defect management, and risk analysis. Scenario-based questions are crucial for experienced testers as they reflect the depth of understanding and practical application of testing techniques.

In this article, we’ll walk through the Top 37 scenario-based software testing interview questions and their answers. These questions are tailored for experienced professionals and include explanations to clarify the reasoning behind each answer.

Top 37 Scenario-Based Software Testing Interview Questions and Answers

1. How would you handle a situation where a critical defect is found close to the release date?

In this scenario, the immediate priority is to assess the impact of the defect on the application. I would escalate the issue to the project stakeholders, outlining potential risks, and suggest possible workarounds or patches if available. The decision to proceed with the release or delay it should be a collaborative one based on the severity of the defect.

Explanation:

Handling critical defects near a release requires effective communication with stakeholders and an understanding of risk management.

2. How do you prioritize test cases in a situation with limited time for testing?

When time is limited, I prioritize test cases based on risk and impact. Critical functionalities that affect the core business or customer experience are tested first. Next, I focus on areas that have undergone recent changes or have a history of defects. Regression testing and low-risk areas are tested last if time permits.

Explanation:

Prioritizing test cases based on risk and impact ensures that the most critical functionalities are verified, even under time constraints.

3. You are testing a new feature, and the development team says it’s complete, but you notice gaps in the implementation. What will you do?

I would first gather evidence of the gaps through testing, then communicate the issues to the development team with a clear explanation of the missing functionality or discrepancies. It’s important to highlight how these gaps may affect the user experience or system integrity. Collaboration with the development team is essential to ensure the feature is thoroughly reworked.

Explanation:

Identifying and communicating gaps ensures that all functionalities are tested comprehensively before release.

4. How do you approach testing a system where the requirements are not fully defined?

In the absence of clear requirements, I focus on exploratory testing and gather information from key stakeholders. I would also perform risk-based testing, ensuring that critical functionalities are tested. Regular communication with business analysts and product owners is crucial to refine the understanding of requirements as the testing progresses.

Explanation:

Testing in undefined environments requires flexibility and continuous communication with stakeholders.

5. What steps would you take if a feature fails during UAT (User Acceptance Testing)?

First, I would identify and document the failure, ensuring that all relevant information is captured. Then, I would work with both the development and UAT teams to reproduce the issue. If it’s a critical bug, I’d escalate it for a fix. Communication with the UAT team is crucial to ensure their concerns are addressed promptly.

Explanation:

Managing UAT failures involves quick identification, documentation, and coordination with development and user teams.

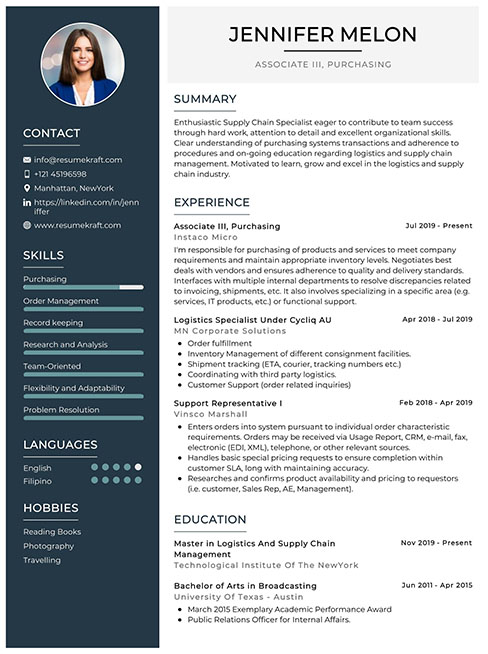

Build your resume in just 5 minutes with AI.

6. How would you test an application that integrates with multiple external systems?

I would begin by identifying the critical points of integration, including API calls and data exchange. Then, I’d focus on validating the data consistency, error handling, and response time of each external system. Test cases would cover scenarios for successful integration, failure cases, and edge cases.

Explanation:

Integration testing ensures that the application communicates effectively with external systems under various conditions.

7. How do you handle flaky or intermittent test failures?

For intermittent failures, I start by isolating the specific conditions under which the failures occur. This could involve reviewing logs, running tests in different environments, or checking the configuration. Once the root cause is identified, I work on stabilizing the tests or reporting the issue to the development team if it’s application-related.

Explanation:

Handling flaky tests requires a systematic approach to isolate and resolve environmental or configuration-related issues.

8. What would you do if the test environment is down or not functioning properly?

In this situation, I would first communicate the issue to the relevant teams to get an estimated downtime. Meanwhile, I’d shift focus to tasks that don’t depend on the environment, such as test case creation, test data preparation, or defect triage. Keeping the testing process moving forward even in the absence of the environment is essential.

Explanation:

Planning alternative tasks ensures productivity even during environment downtimes.

9. How do you validate that the fixes provided by the development team address the reported defects?

Once the fix is deployed, I rerun the test cases associated with the defect to verify if the issue is resolved. I also perform regression testing around the affected areas to ensure that the fix hasn’t introduced new issues. Clear documentation of the retesting results is critical for tracking.

Explanation:

Validating fixes involves both specific retesting and surrounding area regression to ensure quality.

10. How would you test an application where security is a top priority?

Security testing requires a combination of techniques, including vulnerability scanning, penetration testing, and validating security policies like authentication and authorization. I would also ensure data encryption and adherence to security standards like OWASP. Test cases would be designed to simulate potential security breaches and test system resilience.

Explanation:

Security testing is vital for applications dealing with sensitive data and requires a multifaceted approach.

11. How do you handle a situation where your test cases are outdated due to frequent changes in the application?

I continuously review and update the test cases during each sprint or release cycle. Automation can help reduce the overhead of maintaining large test suites. Additionally, I ensure that any changes are reflected in the test management tools to keep track of updated test scenarios.

Explanation:

Frequent updates require continuous test case maintenance to ensure that tests remain relevant and accurate.

12. How would you ensure the quality of an application with a tight release deadline?

I would prioritize high-risk and high-impact areas for testing, focusing on core functionalities and business-critical components. Automation can be used for regression testing to save time. Clear communication with stakeholders about risks and testing progress is crucial to balance quality and deadlines.

Explanation:

Balancing quality and deadlines involves smart prioritization and leveraging automation for efficient testing.

13. What approach do you take when the business logic of the application is complex?

For complex business logic, I break down the requirements into smaller, manageable units and create test cases that cover all possible scenarios, including edge cases. I also collaborate with business analysts and developers to ensure all aspects of the logic are understood and covered.

Explanation:

Thorough testing of complex logic requires a clear understanding of business rules and detailed test coverage.

14. How do you manage defects that are not reproducible?

For non-reproducible defects, I gather as much information as possible, including logs, screenshots, and steps to reproduce. I attempt to replicate the environment and conditions in which the defect was found. If it remains non-reproducible, I collaborate with the development team to investigate further.

Explanation:

Non-reproducible defects require detailed investigation and collaboration to identify root causes.

15. How do you test applications for performance under high load conditions?

I design load tests to simulate high user traffic and stress the system to identify performance bottlenecks. Tools like JMeter or LoadRunner are used to generate the load, and I monitor key metrics such as response time, CPU usage, and memory consumption. I report any performance degradation to the development team for optimization.

Explanation:

Load testing ensures that the application performs efficiently under high user traffic conditions.

16. How would you handle a situation where the client reports issues that you couldn’t reproduce during testing?

In this case, I would first gather all the necessary information from the client, such as the environment, steps to reproduce, and logs. I would then replicate the client’s environment as closely as possible to reproduce the issue. If needed, I’d arrange a session with the client to observe the issue firsthand.

Explanation:

Reproducing client-reported issues often requires close collaboration and environment replication.

17. What is your approach to testing an application with a tight budget?

When budget constraints exist, I focus on risk-based testing, targeting critical and high-risk areas first. Exploratory testing can also be employed to quickly uncover defects without the need for extensive test case creation. Prioritizing automation for repetitive tasks can also help reduce costs.

Explanation:

Effective testing under budget constraints focuses on critical areas and minimizes unnecessary costs.

18. How do you approach regression testing in a project with frequent releases?

To manage frequent releases, I would automate the regression tests to ensure that key functionalities are tested quickly and consistently. I would prioritize automation for the most critical test cases and ensure that the suite is updated with each release. This helps maintain quality while keeping up with the release cadence.

Explanation:

Automating regression tests allows for efficient and consistent verification across frequent releases.

19. How do you test an application that has multiple language support?

I would first ensure that test cases cover all languages supported by the application. Testing would include validation of language-specific content, character encoding, and localization of date and currency formats. I would also verify that the application handles language switching seamlessly.

Explanation:

Testing for multilingual support ensures that the application works correctly for users across different regions and languages.

20. How would you

manage a scenario where testing resources are shared among multiple teams?

In this scenario, I would coordinate with the other teams to ensure optimal scheduling of resources. Effective communication and time management are key to avoid conflicts. Resource sharing could also be managed by automating repetitive tasks, reducing the need for manual intervention.

Explanation:

Managing shared resources involves effective coordination and prioritization to ensure smooth testing.

21. How do you validate that the application meets performance standards during peak usage times?

I use performance testing tools to simulate peak loads and monitor the system’s behavior. The test focuses on response time, throughput, error rates, and system stability. Monitoring tools help identify any performance degradation during peak usage, and the results are shared with the development team for optimization.

Explanation:

Validating performance during peak usage ensures the application’s stability under maximum load conditions.

22. What steps do you take to ensure backward compatibility during testing?

To ensure backward compatibility, I test the application on older versions of the operating system, browsers, and devices to verify that it works as expected. I also check if the application can handle data or files from previous versions without any issues. Collaboration with the development team is crucial for identifying any known compatibility risks.

Explanation:

Backward compatibility testing ensures that updates do not break functionality for users on older platforms.

23. How would you handle testing when major features are still under development?

In this case, I would perform testing on the available components while collaborating closely with the development team to understand the progress of the remaining features. I’d focus on integration testing for completed modules and prepare for end-to-end testing once all features are integrated.

Explanation:

Testing alongside development requires flexibility and clear communication with the development team.

24. How do you manage test data in a complex testing environment?

I ensure that test data is relevant, consistent, and anonymized if dealing with sensitive information. Automated scripts can help generate test data for large test suites. Regular reviews of test data are necessary to ensure that it aligns with current test requirements.

Explanation:

Managing test data effectively ensures accurate test results and compliance with data privacy regulations.

25. How would you test a mobile application that needs to function on multiple devices?

I perform cross-device testing using both real devices and emulators to ensure the app functions properly on different screen sizes, operating systems, and hardware configurations. Testing would cover performance, responsiveness, and compatibility across devices.

Explanation:

Mobile app testing across devices ensures that the application works seamlessly on various platforms and hardware configurations.

26. What approach would you take to test the scalability of a cloud-based application?

For scalability testing, I simulate increased loads over time to assess how the cloud infrastructure scales. Key metrics such as response time, latency, and resource utilization are monitored. I would also test the application’s ability to scale both vertically (adding more resources to existing machines) and horizontally (adding more machines).

Explanation:

Scalability testing ensures that cloud applications can handle growing demands without performance degradation.

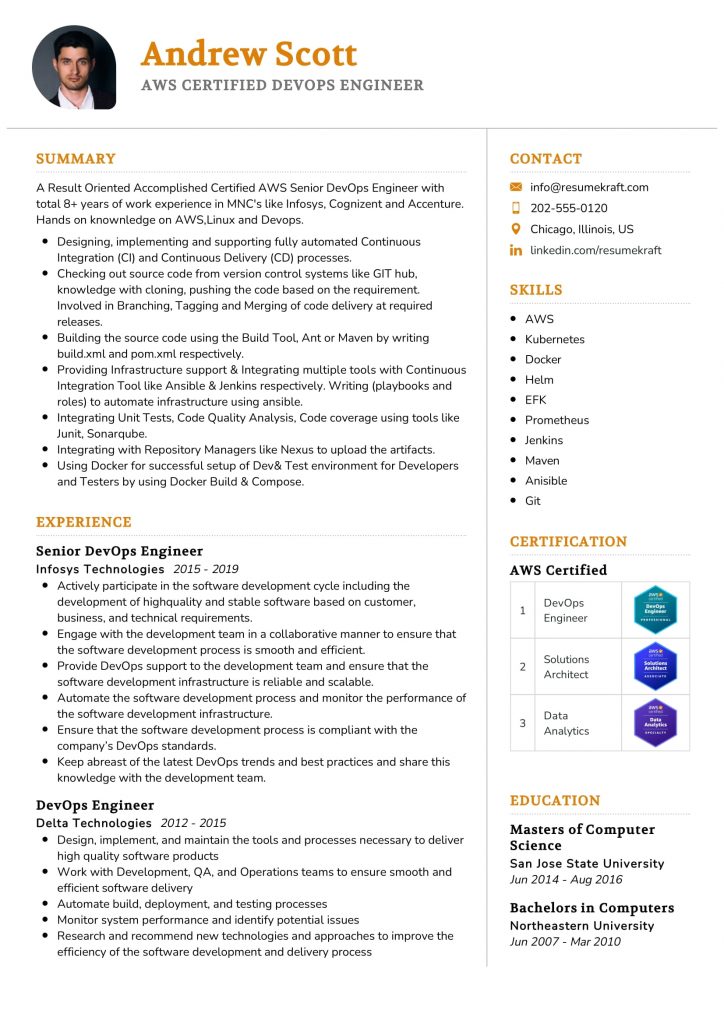

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

27. How do you manage testing for continuous integration (CI) pipelines?

In a CI environment, I automate key tests (such as unit, integration, and regression tests) to run with each code commit. Any failures in the pipeline are immediately addressed, and I ensure that test coverage is sufficient to catch major issues. Clear documentation and reporting are key to maintaining the quality of the CI pipeline.

Explanation:

Testing in a CI environment requires robust automation and quick feedback loops to maintain code quality.

28. What is your approach to testing APIs in an application?

For API testing, I verify that the API endpoints return the expected data and handle errors gracefully. I use tools like Postman or REST Assured to create automated tests for both functional and performance aspects of the API. Testing includes validating response codes, data formats, and security checks such as authentication and authorization.

Explanation:

API testing ensures that the backend services are functional, reliable, and secure for communication with the application.

29. How do you ensure the accuracy of automated test scripts in a rapidly changing environment?

I continuously review and update the automated test scripts to align with the latest changes in the application. Test scripts are modularized to allow easy updates, and I maintain version control to track changes. Regular maintenance helps ensure that the automated tests remain accurate and effective.

Explanation:

Maintaining automated test scripts ensures that tests remain relevant even as the application evolves.

30. How would you test an application that is being migrated from on-premise to the cloud?

For a cloud migration, I focus on testing the data integrity during the migration process, verifying that all data is transferred correctly without any loss. I would also test for performance, security, and scalability in the cloud environment, ensuring that the application functions as expected after the migration.

Explanation:

Cloud migration testing ensures a smooth transition from on-premise systems to cloud infrastructure, maintaining data and functionality integrity.

31. How do you handle a scenario where a feature works in one environment but not in another?

I would compare the two environments to identify any configuration differences, such as operating systems, middleware, or network settings. Once the differences are identified, I work with the relevant teams to resolve any inconsistencies and ensure that the feature works consistently across environments.

Explanation:

Environment discrepancies can cause unexpected issues, so testing across multiple setups helps identify and resolve such problems.

32. How do you ensure that performance testing covers real-world scenarios?

To cover real-world scenarios, I gather data on expected user behavior, such as peak usage times, geographic distribution, and device types. I then simulate these conditions during performance testing to mimic actual usage patterns, ensuring that the application can handle real-world demands.

Explanation:

Simulating real-world scenarios ensures that the performance tests reflect actual user behavior and application load.

33. How would you test a real-time messaging application?

For a real-time messaging app, I would test latency, message delivery reliability, and system performance under various load conditions. I’d also validate the consistency of message ordering and handle scenarios where users are offline or have poor network connectivity.

Explanation:

Real-time applications require testing for speed, reliability, and robustness under varying network conditions.

34. How do you test the scalability of a microservices-based application?

I would simulate increased traffic across the microservices to assess how well they scale independently. Testing would include ensuring that load balancing works as expected and that the services can communicate effectively under heavy load. Monitoring tools help identify any bottlenecks in specific services.

Explanation:

Scalability testing of microservices ensures that each service can handle load independently and function as part of the larger system.

35. How do you approach testing for data integrity in an application with complex databases?

For data integrity testing, I validate that the data is correctly inserted, updated, and retrieved from the database according to business rules. I also test for referential integrity and ensure that any constraints (such as primary and foreign keys) are enforced. Automated scripts can be used to test large datasets.

Explanation:

Ensuring data integrity is crucial for applications that rely heavily on accurate and consistent database operations.

36. How do you approach testing in Agile environments?

In Agile, I adopt a continuous testing approach where testing is integrated into every sprint. I focus on early identification of issues by testing as soon as a feature is developed. Automation is key to maintaining testing speed, and I work closely with developers and product owners to ensure that testing aligns with the sprint goals.

Explanation:

Agile testing requires flexibility and close collaboration with the development team to ensure continuous delivery of quality software.

37. How do you handle testing in a DevOps environment?

In a DevOps environment, I integrate testing into the CI/CD pipeline to ensure that testing is automated and occurs with each code deployment. I focus on creating comprehensive automated test suites that cover unit, integration, and performance tests. Collaboration with both development and operations teams is essential to maintain smooth releases.

Explanation:

DevOps testing emphasizes automation and continuous feedback to ensure smooth integration and delivery of software.

Conclusion

Scenario-based software testing interview questions challenge experienced testers to demonstrate their critical thinking, problem-solving, and real-world testing experience. By preparing for these questions, you can showcase your ability to manage complex testing environments, handle defects, and collaborate effectively with development teams.

For further resources to improve your career, explore our resume builder, check out free resume templates, or browse through resume examples. Each of these tools can help you refine your professional presence as you pursue your next opportunity in software testing.

Recommended Reading: