The field of artificial intelligence (AI) continues to grow rapidly, with AI researchers playing a critical role in advancing the technology. For professionals seeking a career in AI research, acing a technical interview is a vital step. The technical interview assesses not only the candidate’s knowledge of AI concepts but also their problem-solving skills, ability to implement solutions, and experience with AI tools and frameworks. In this article, we will cover the most common AI researcher interview questions and provide detailed explanations to help you prepare. Understanding the reasoning behind each question will boost your confidence and readiness for the interview.

Top 38 Technical Interview AI Researcher Questions

1. What is the difference between supervised and unsupervised learning?

Supervised learning involves training a model using labeled data, where the input-output pairs are known. Unsupervised learning, on the other hand, works with unlabeled data, and the model must identify patterns without explicit output labels.

Explanation

Supervised learning predicts outputs based on input features, while unsupervised learning clusters or groups data without pre-existing labels.

2. How do you handle missing data in a dataset?

Missing data can be handled by various techniques, such as imputation (filling missing values with the mean, median, or mode), using algorithms that support missing data, or removing the rows/columns that contain missing values, depending on the importance of the data.

Explanation

Handling missing data effectively ensures that the model remains robust and does not suffer from skewed results.

3. Explain overfitting and underfitting in machine learning.

Overfitting occurs when a model is too complex and learns the noise in the training data, resulting in poor generalization. Underfitting happens when a model is too simple and fails to capture the underlying patterns in the data.

Explanation

The goal is to find a balance, using techniques like cross-validation or regularization to avoid overfitting and underfitting.

4. What is the difference between deep learning and traditional machine learning?

Traditional machine learning requires feature engineering, where humans must identify the important features, while deep learning uses neural networks to automatically learn features from raw data.

Explanation

Deep learning typically excels with large datasets and complex patterns, whereas traditional machine learning is more efficient with smaller, structured data.

5. What is a neural network, and how does it work?

A neural network is a model inspired by the human brain’s structure, consisting of layers of interconnected nodes (neurons) that process and transmit information. Data flows through these layers, and the network learns by adjusting weights during training to minimize error.

Explanation

Neural networks are particularly effective in solving complex tasks like image recognition, natural language processing, and more.

Build your resume in just 5 minutes with AI.

6. Explain the role of activation functions in neural networks.

Activation functions introduce non-linearity to the model, enabling it to learn and solve complex tasks. Common activation functions include ReLU, sigmoid, and tanh.

Explanation

Without activation functions, neural networks would behave like linear regression models, unable to capture complex patterns.

7. What is backpropagation, and why is it important?

Backpropagation is the process of updating weights in a neural network by propagating the error backward from the output layer to the input layer. It helps optimize the network by minimizing the loss function.

Explanation

Backpropagation ensures that the neural network learns from its mistakes and gradually improves its accuracy.

8. What is the difference between gradient descent and stochastic gradient descent?

Gradient descent updates model parameters after calculating the gradient for the entire dataset, while stochastic gradient descent (SGD) updates parameters for each data point, leading to faster convergence but noisier updates.

Explanation

SGD is often preferred for large datasets due to its efficiency and lower memory requirements.

9. How do you prevent a neural network from overfitting?

Techniques to prevent overfitting include using regularization methods like L2 (Ridge) regularization, dropout, early stopping, and increasing the amount of training data.

Explanation

These techniques help improve the generalization capability of the neural network by reducing its complexity.

10. What are convolutional neural networks (CNNs), and where are they used?

CNNs are specialized neural networks designed for image processing tasks. They use convolutional layers to automatically learn spatial hierarchies of features, making them effective in tasks like image classification and object detection.

Explanation

CNNs are particularly useful in handling structured grid data such as images, video, and spatial data.

11. What are recurrent neural networks (RNNs), and where are they used?

RNNs are a class of neural networks that are designed for sequential data processing. They have loops that allow information to persist, making them suitable for tasks like language modeling and time-series prediction.

Explanation

RNNs are effective at capturing dependencies in sequences, unlike traditional neural networks.

12. What is the vanishing gradient problem, and how can it be resolved?

The vanishing gradient problem occurs when gradients become too small during backpropagation, hindering the training process. Solutions include using ReLU activation functions, batch normalization, and advanced architectures like LSTMs.

Explanation

By addressing the vanishing gradient problem, neural networks can learn more effectively, particularly in deep architectures.

13. What is transfer learning, and why is it useful?

Transfer learning involves using a pre-trained model on a new, but related, task. It allows researchers to leverage previously acquired knowledge, reducing training time and improving performance on small datasets.

Explanation

Transfer learning is a powerful tool when training data is limited or expensive to obtain.

14. How do generative models differ from discriminative models?

Generative models learn the joint probability distribution of input and output, enabling them to generate new data points. Discriminative models focus on learning the decision boundary between classes, improving classification tasks.

Explanation

Generative models are used for tasks like image generation, while discriminative models are used for classification.

15. What are autoencoders, and how are they used?

Autoencoders are unsupervised neural networks used to compress data into a lower-dimensional representation and then reconstruct it. They are commonly used in tasks like data compression and anomaly detection.

Explanation

Autoencoders help identify important features in the data and are useful for dimensionality reduction.

16. Explain the importance of the bias-variance tradeoff.

The bias-variance tradeoff represents the balance between a model’s ability to generalize to unseen data (low variance) and its accuracy on training data (low bias). High bias leads to underfitting, while high variance leads to overfitting.

Explanation

Balancing bias and variance ensures optimal model performance across both training and unseen data.

17. How does reinforcement learning differ from supervised learning?

In reinforcement learning, agents learn by interacting with an environment and receiving feedback in the form of rewards or penalties. Supervised learning, on the other hand, involves learning from labeled datasets with a clear input-output relationship.

Explanation

Reinforcement learning is commonly used in real-world applications like robotics, gaming, and autonomous systems.

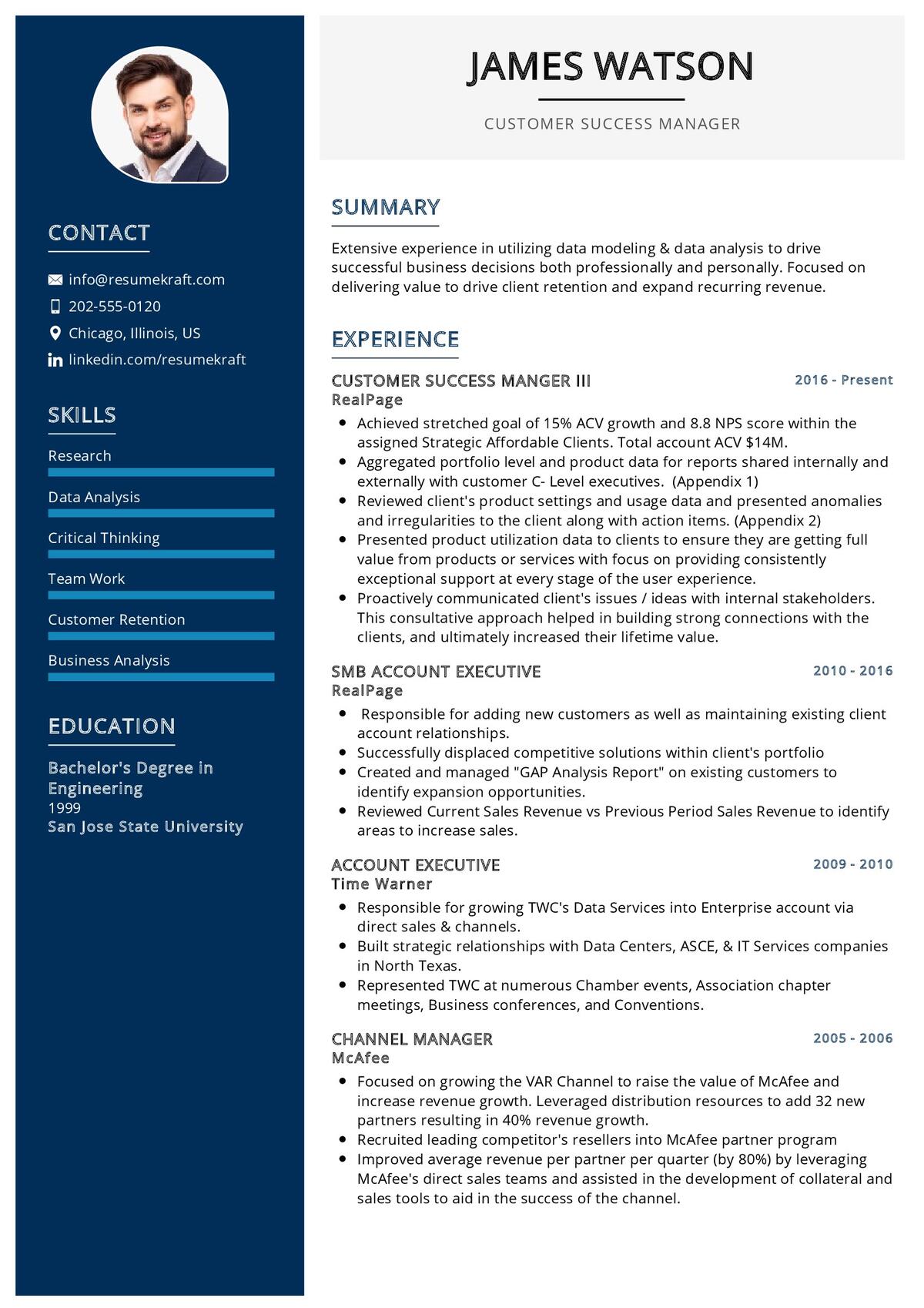

Planning to Write a Resume?

Check our job winning resume samples

18. What is Q-learning, and how does it work?

Q-learning is a reinforcement learning algorithm that aims to learn the optimal policy for an agent. It uses a Q-table to store the values of state-action pairs, guiding the agent toward the best action in each state to maximize long-term rewards.

Explanation

Q-learning allows agents to learn from their environment without needing a model of the environment.

19. What is the curse of dimensionality, and how does it affect machine learning models?

The curse of dimensionality refers to the exponential increase in computational complexity as the number of features (dimensions) grows. It can lead to sparse data and degrade model performance.

Explanation

Reducing dimensionality using techniques like PCA helps mitigate the curse and improve model accuracy.

20. Explain the concept of ensemble learning.

Ensemble learning combines multiple models to improve overall performance. Techniques like bagging, boosting, and stacking leverage the strengths of individual models, leading to better predictions.

Explanation

Ensemble methods often outperform single models by reducing errors due to bias and variance.

21. What are support vector machines (SVM), and how do they work?

SVM is a supervised learning algorithm used for classification and regression. It works by finding the optimal hyperplane that maximally separates different classes in the dataset.

Explanation

SVMs are particularly effective in high-dimensional spaces and for classification tasks with clear margins between classes.

22. How do decision trees work, and what are their limitations?

Decision trees are a flowchart-like structure where each internal node represents a test on a feature, each branch represents the outcome, and each leaf node represents a class label. They are prone to overfitting and can become unstable with small changes in data.

Explanation

Pruning techniques can help reduce overfitting in decision trees and improve generalization.

23. What is a random forest, and why is it used?

A random forest is an ensemble method that constructs multiple decision trees and merges their predictions to improve accuracy and reduce overfitting. Each tree is built from a random subset of data and features.

Explanation

Random forests are powerful for handling high-dimensional data and avoiding the limitations of single decision trees.

24. What is the role of cross-validation in model evaluation?

Cross-validation is used to assess the generalizability of a model. In k-fold cross-validation, the data is split into k subsets, and the model is trained on k-1 subsets and validated on the remaining one,

ensuring the model’s robustness.

Explanation

Cross-validation prevents overfitting and provides a reliable estimate of model performance.

25. What are some common loss functions used in machine learning?

Common loss functions include mean squared error (MSE) for regression, binary cross-entropy for binary classification, and categorical cross-entropy for multi-class classification.

Explanation

Choosing the correct loss function is crucial as it directly impacts the training and optimization of the model.

26. What is regularization, and why is it important?

Regularization techniques like L1 (Lasso) and L2 (Ridge) regularization add a penalty to the loss function to prevent overfitting by discouraging large coefficients.

Explanation

Regularization helps maintain a balance between model complexity and performance, ensuring better generalization.

27. Explain the concept of Bayesian inference.

Bayesian inference involves updating the probability of a hypothesis as more evidence becomes available. It relies on Bayes’ Theorem to calculate the posterior probability based on prior knowledge and observed data.

Explanation

Bayesian methods are useful in scenarios where uncertainty is present and can be incorporated into model predictions.

28. What is the role of hyperparameter tuning in machine learning?

Hyperparameters are parameters that govern the training process of a model and must be tuned to achieve optimal performance. Techniques like grid search and random search are commonly used to find the best hyperparameter values.

Explanation

Proper hyperparameter tuning ensures the model is trained efficiently and performs well on unseen data.

29. How does a recommendation system work?

Recommendation systems use algorithms to suggest items to users based on their preferences. Collaborative filtering and content-based filtering are common techniques, with hybrid methods combining both.

Explanation

Recommendation systems are widely used in e-commerce, social media, and content platforms to enhance user experience.

30. What is the purpose of feature selection, and how is it done?

Feature selection involves selecting the most important features from the dataset to improve model performance and reduce computational complexity. Techniques include filter methods, wrapper methods, and embedded methods.

Explanation

Feature selection enhances model interpretability and reduces overfitting by removing irrelevant or redundant features.

31. What is the difference between PCA and LDA?

Principal Component Analysis (PCA) is an unsupervised technique for reducing dimensionality by identifying principal components. Linear Discriminant Analysis (LDA) is a supervised technique used for dimensionality reduction, focusing on maximizing the separation between classes.

Explanation

PCA is useful for exploratory data analysis, while LDA is more suited for classification tasks.

32. What is the importance of data normalization?

Data normalization ensures that all features have the same scale, which improves the performance of machine learning algorithms that rely on distance-based metrics, like k-NN and SVM.

Explanation

Normalization helps models converge faster during training and avoids bias toward features with larger values.

33. How do you evaluate the performance of a classification model?

The performance of a classification model can be evaluated using metrics like accuracy, precision, recall, F1-score, and AUC-ROC. Each metric provides insight into different aspects of model performance.

Explanation

Evaluating models on multiple metrics ensures that they perform well across various conditions and data distributions.

34. What is an attention mechanism in neural networks?

The attention mechanism allows neural networks to focus on specific parts of the input when making predictions. It is widely used in natural language processing (NLP) tasks like machine translation and text summarization.

Explanation

Attention mechanisms improve the performance of sequence models by enabling them to capture important information more effectively.

35. What is a GAN, and how does it work?

Generative Adversarial Networks (GANs) consist of two networks: a generator and a discriminator. The generator tries to create realistic data, while the discriminator tries to distinguish between real and generated data. The two networks are trained together in a game-like scenario.

Explanation

GANs are popular for generating realistic images, videos, and other types of data.

Build your resume in 5 minutes

Our resume builder is easy to use and will help you create a resume that is ATS-friendly and will stand out from the crowd.

36. What is the role of reinforcement learning in AI research?

Reinforcement learning (RL) plays a crucial role in AI research, particularly in areas like robotics, gaming, and autonomous systems. RL algorithms allow agents to learn optimal behaviors by interacting with their environment and maximizing long-term rewards.

Explanation

RL enables AI systems to make decisions based on dynamic and complex environments, leading to innovative solutions.

37. How does AI research contribute to autonomous systems?

AI research drives the development of autonomous systems by providing algorithms and models that enable machines to perceive, reason, and make decisions without human intervention. This includes self-driving cars, drones, and robotic systems.

Explanation

AI research enables breakthroughs in autonomy, resulting in more sophisticated, efficient, and safer systems.

38. What ethical considerations are important in AI research?

AI research must address ethical concerns like fairness, transparency, accountability, and the potential societal impact of AI technologies. Ensuring that AI systems do not perpetuate bias or harm is essential for responsible AI development.

Explanation

Ethical AI research aims to create systems that benefit society while minimizing negative consequences.

Conclusion

Acing a technical interview for an AI researcher position requires a deep understanding of fundamental AI concepts, machine learning algorithms, and practical experience with various tools and techniques. By preparing with the common interview questions outlined in this article, candidates can build their confidence and improve their chances of success. From neural networks to ethical considerations in AI, these topics provide a comprehensive foundation for excelling in your AI researcher interview and pursuing a rewarding career in artificial intelligence.

Recommended Reading: