Project Manager Resume Keywords & Skills

A strong project manager resume is no longer simply about enumerating your experience or job titles. In today’s competitive job market, you have to use not only the appropriate keywords but also the specific terms that correspond with the responsibilities, achievements, and skills that employers are looking for. These keywords play a crucial role in filtering candidates, and so they are heavily relied upon by recruiters and applicant tracking systems (ATS).

So if your project manager resume does not have the targeted phrases, it may never get to a real person, no matter how great your experience is. This guide will inform you everything you need to know about project manager resume keywords – starting from understanding their role in ATS screening to discovering the most essential hard and soft skills to include.

- Understanding Project Manager Resume Keywords

- Sample Resume

- Why Project Manager Resume Keywords Matter

- How to Identify the Right Keywords

- 100+ Top Project Manager Resume Keywords and Skills

- 1. Core Project Management Skills

- 2. Methodologies & Frameworks

- 3. Tools & Software

- 4. Leadership & Communication Skills

- 5. Analytical & Strategic Skills

- 6. Industry-Specific Project Management Keywords

- 7. Certifications & Credentials

- 8. Soft Skills

- 9. Technical & Digital Skills

- 10. Action Verbs for Resume Bullet Points

- How to Integrate Keywords Effectively

- Project Manager Resume Summary Examples Using Keywords

- Common Mistakes to Avoid When Using Keywords

- Final Thoughts

Understanding Project Manager Resume Keywords

Keywords in a CV are the terms or very brief phrases that point out the necessary skills, experience, and qualifications for a project management role. A great deal of times, these keywords reflect the very same wording found in job postings. For example, if a job posting calls for someone familiar with Agile methodologies or stakeholder management, it is highly likely that using those precise phrases on your resume will greatly increase your likelihood of getting through the ATS screening.

Keywords can belong to several categories:

- Core project management skills

- Technical tools and software

- Methodologies or frameworks

- Leadership and soft skills

- Certifications and professional credentials

- Industry-specific competencies

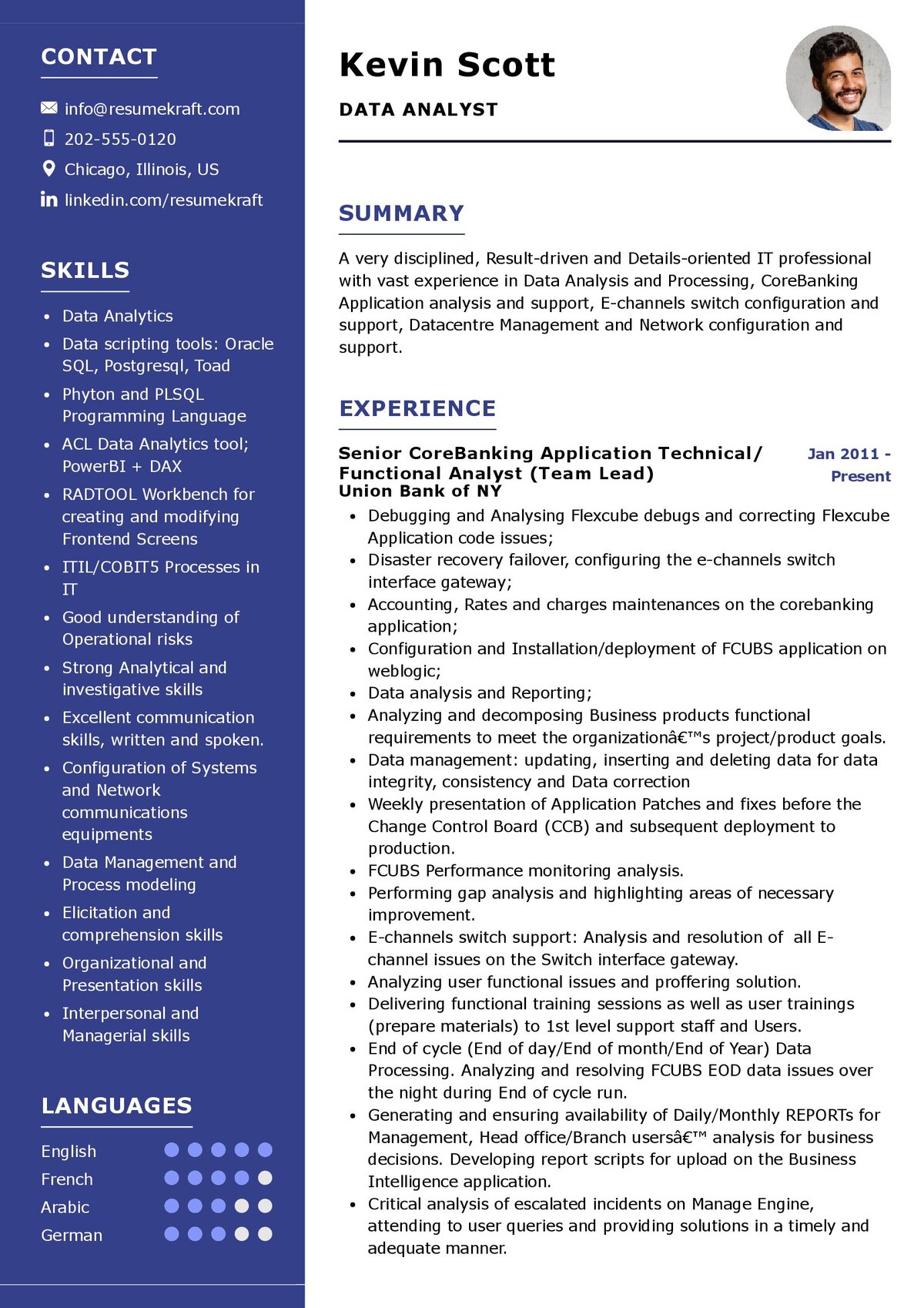

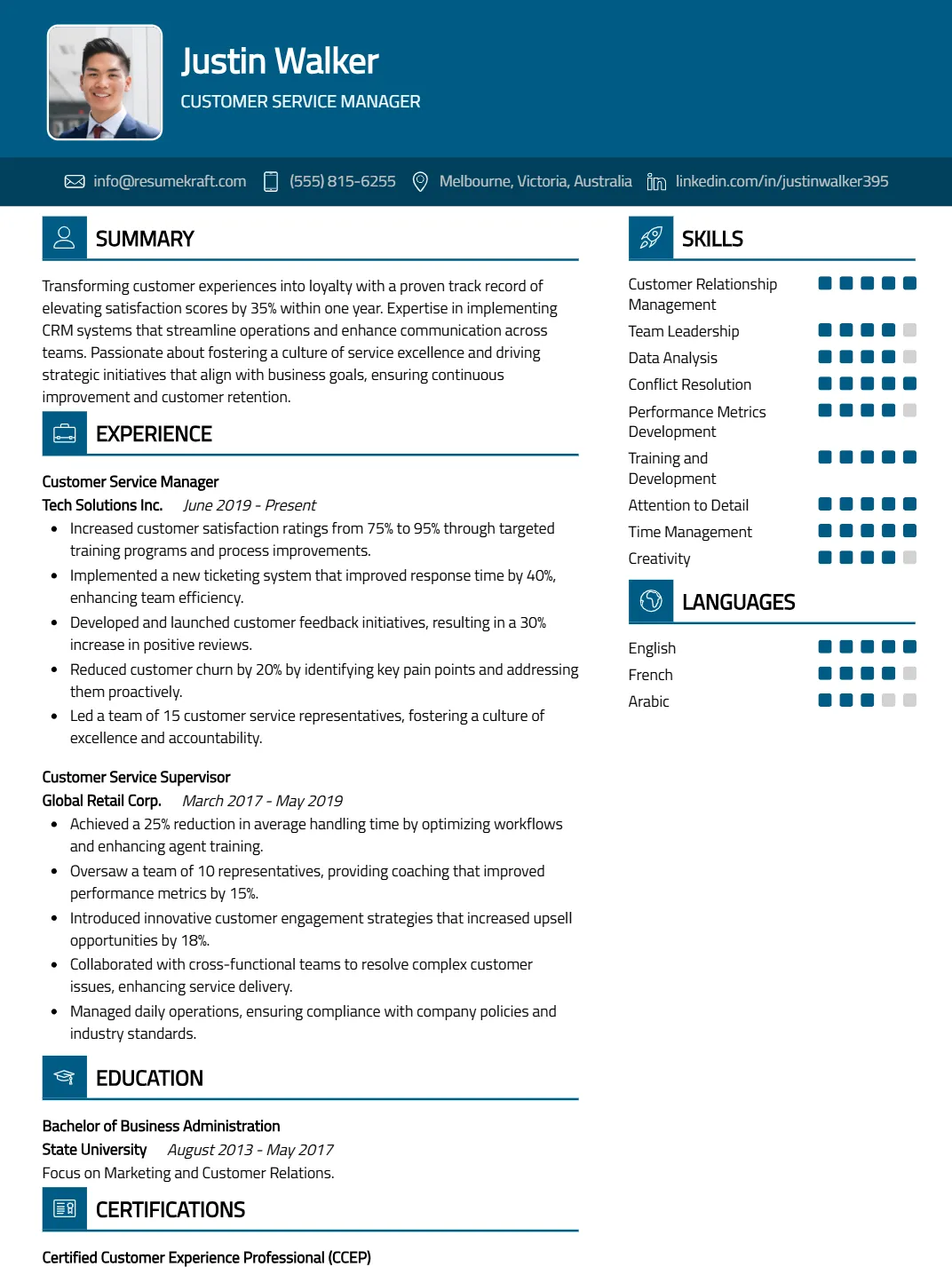

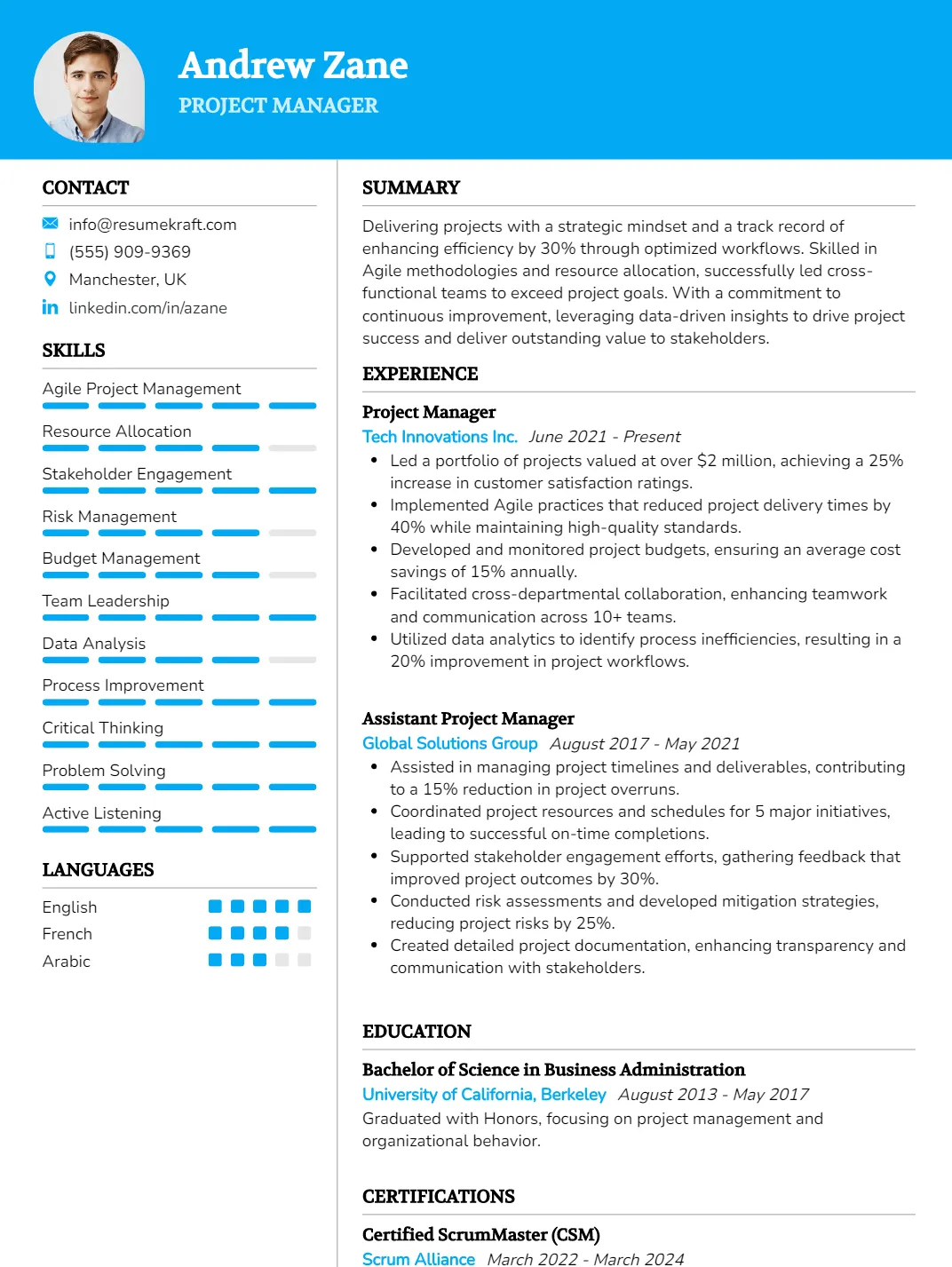

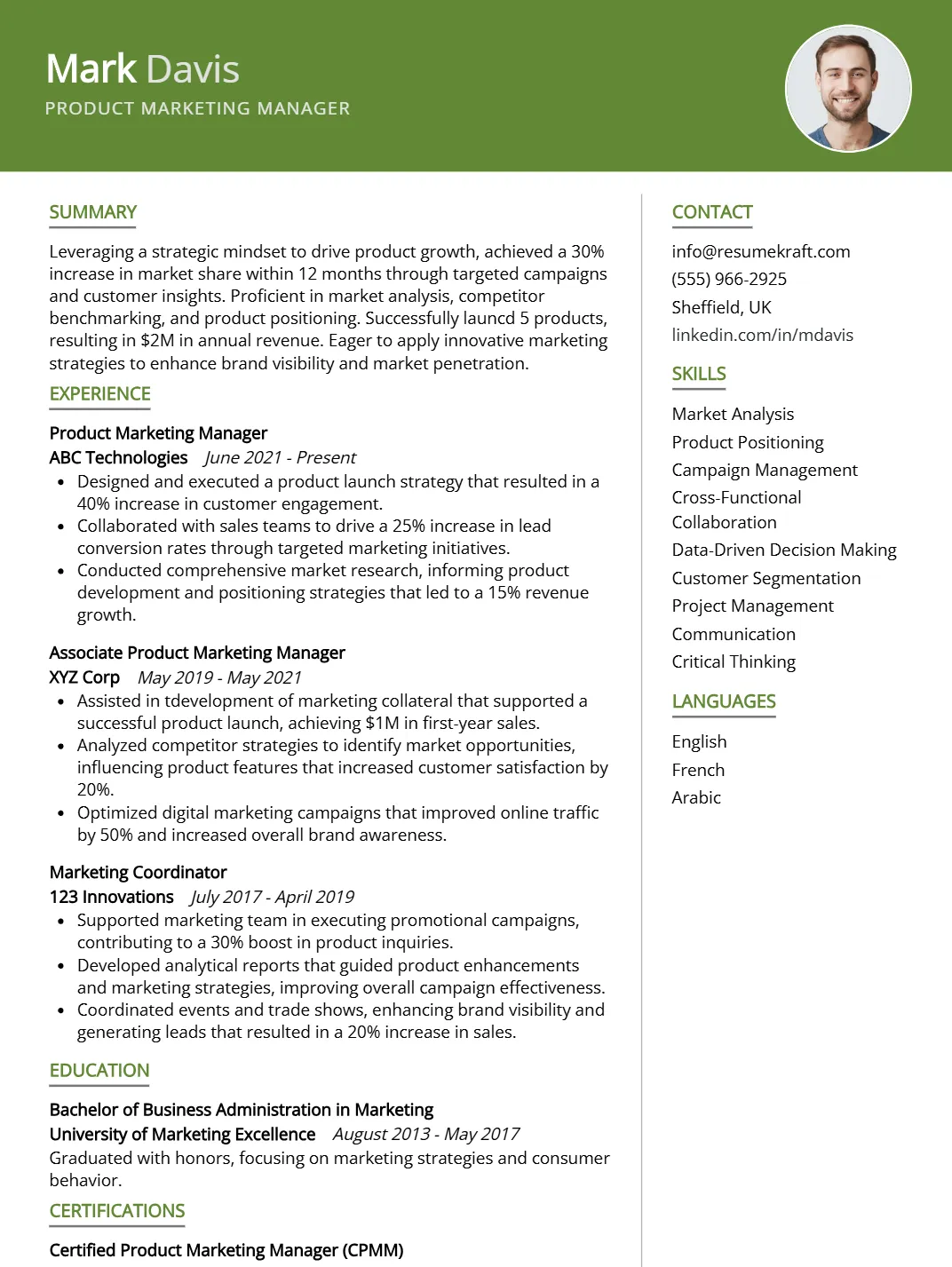

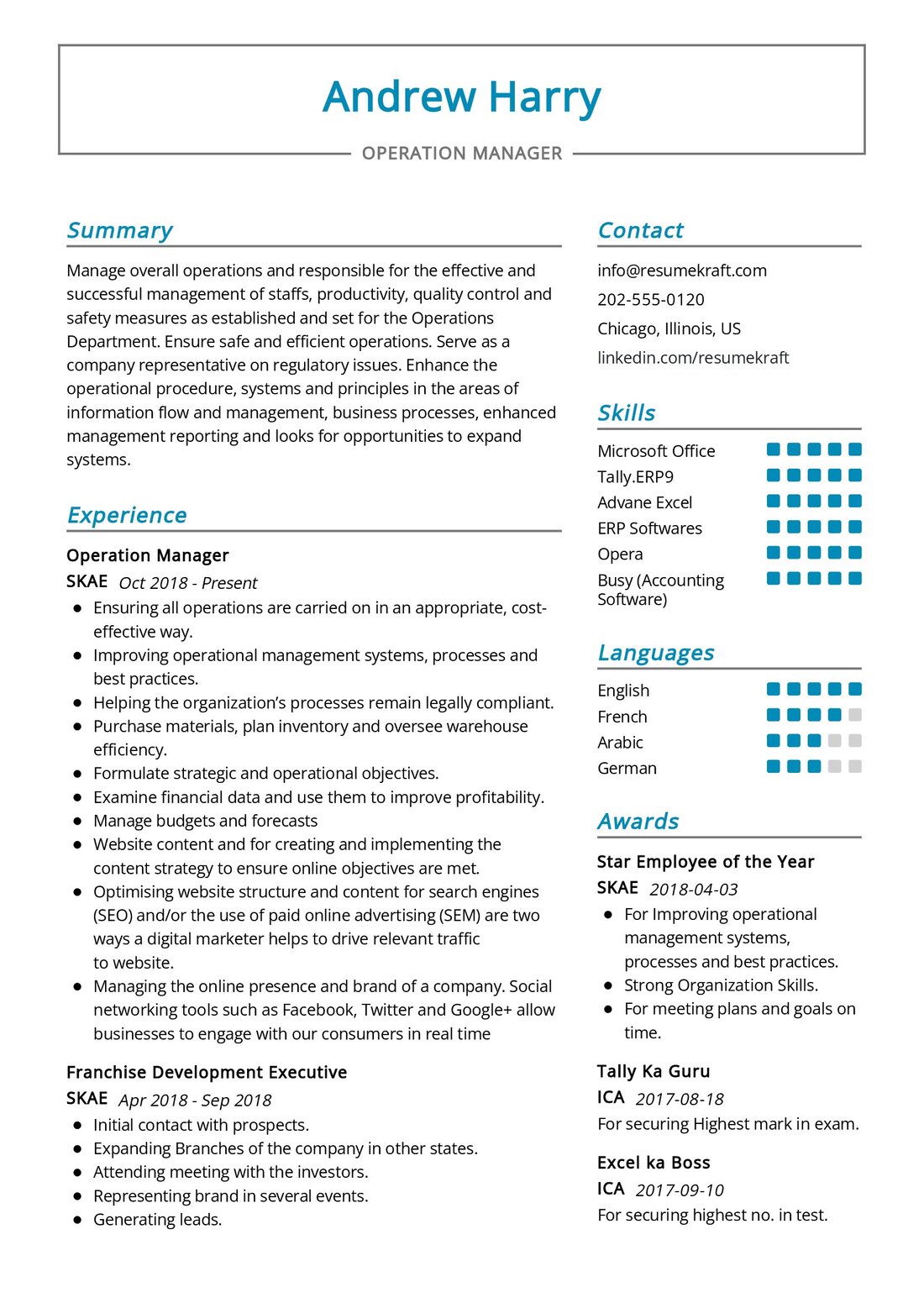

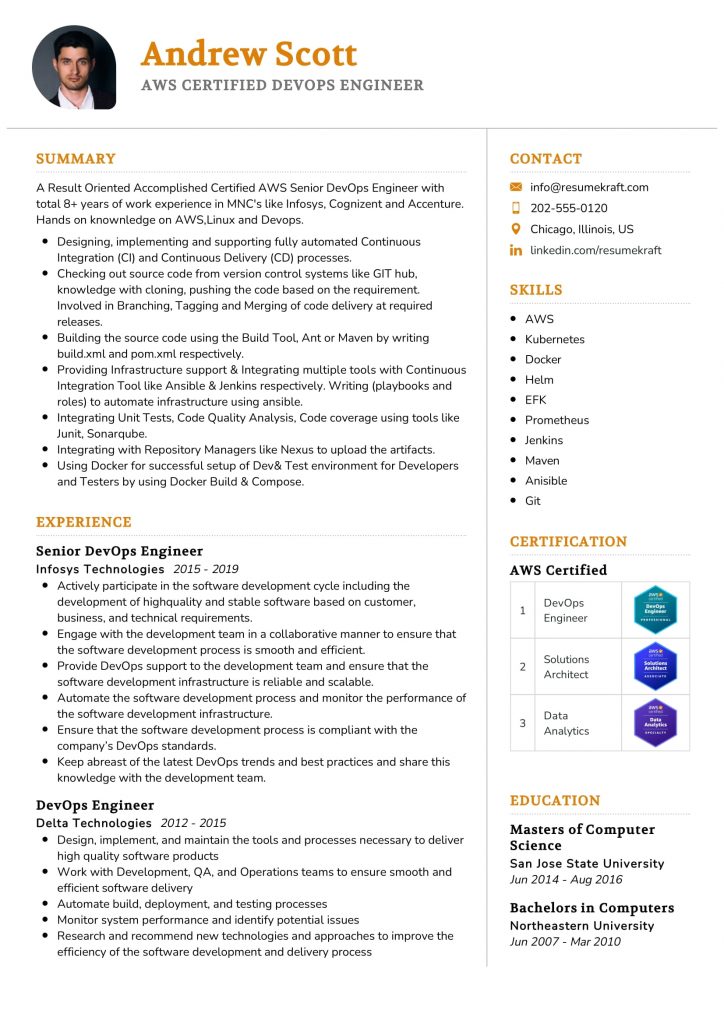

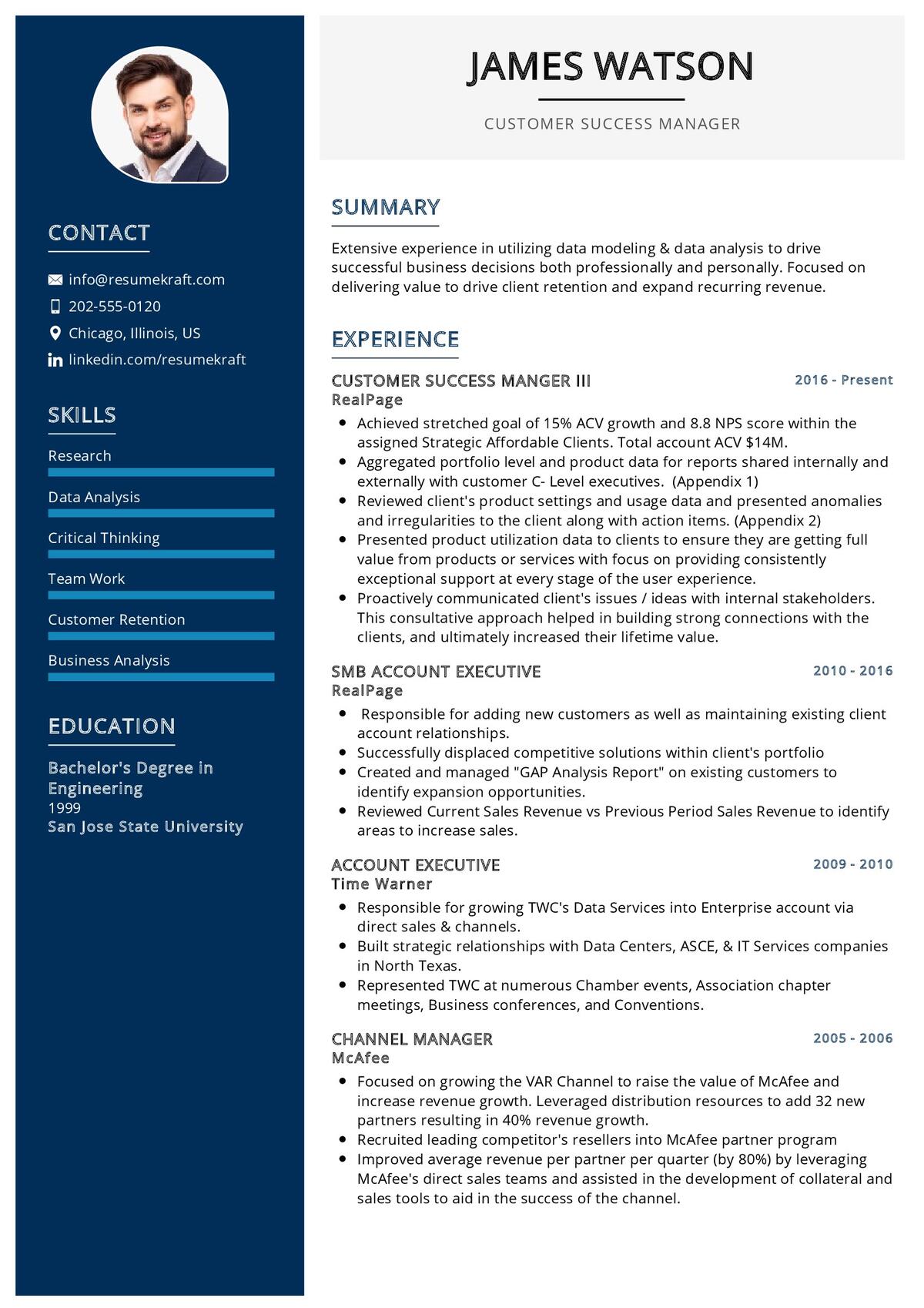

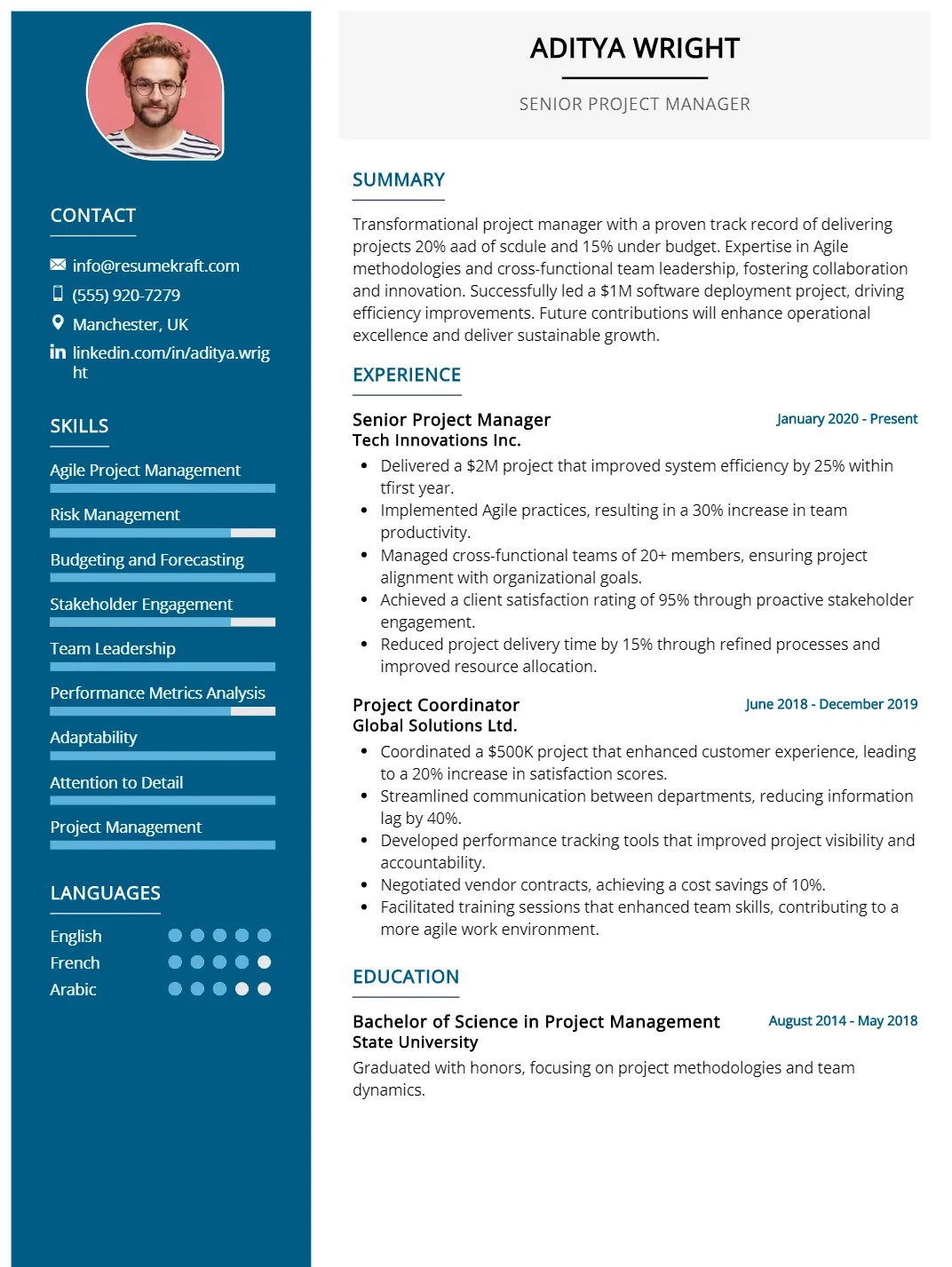

Sample Resume

Tip: Make resume writing easier and smarter with the help of out AI resume builder, designed to optimize your content instantly.

Why Project Manager Resume Keywords Matter

- Enhancing ATS Compatibility

The majority of firms utilize Applicant Tracking Systems to oversee the hiring process. These systems evaluate resumes and award points based on the relevance of the keywords. Your resume can get automatically rejected without good keyword alignment. The right mix of keywords can help you score better and get closer to the recruiter shortlist. - Mimicking Job Descriptions

Recruiters always pick particular expressions for job ads because those words express the chief skills they are looking for. Resume keyword alignment lets the employer know you grasp the job requirements—an indirect but powerful signal of fit. - Showcasing Your Professional Skills

The intelligent integration of high-quality keywords also indicates knowledge. If they are included in your achievements as if they were in the natural text, they will not only make your resume persuasive rather than overstuffed but will also improve its readability. - Enhancing Visibility on Job Sites

Keywords are the tools that help algorithms to identify the candidates that best fit the opportunities on sites such as LinkedIn or Indeed. This consequently increases your visibility to the recruiters in charge of the project managers’ hiring process.

How to Identify the Right Keywords

Before you start adding words blindly, it’s crucial to research and identify the right ones that align with your role, experience level, and target job. Here’s how:

Step 1: Analyze Job Descriptions

Search for several project manager job postings in your industry. Highlight recurring terms—such as scope management, cross-functional collaboration, or Agile delivery—these are likely to be critical keywords.

Step 2: Use Industry Keyword Tools

Tools like Jobscan, ResumeWorded, and skills extractors on LinkedIn can help identify the most frequently used terms in your target field.

Step 3: Review Certification Bodies

Reputable organizations like PMI (Project Management Institute) and PRINCE2 often list key competencies associated with their frameworks. Those terms frequently align with industry expectations.

Step 4: Balance Hard and Soft Skills

Employers look for technical expertise and leadership attributes. Make sure your keyword mix includes both.

100+ Top Project Manager Resume Keywords and Skills

Here’s a complete and categorized list of 100+ powerful keywords and skills to feature on a Project Manager Resume.

1. Core Project Management Skills

- Project Planning

- Project Scheduling

- Budget Management

- Resource Allocation

- Risk Management

- Scope Management

- Stakeholder Management

- Project Lifecycle Management

- Change Management

- Quality Assurance

- Cost Control

- Timeline Management

- Project Execution

- Deliverable Tracking

- Post-Project Evaluation

2. Methodologies & Frameworks

- Agile

- Scrum

- Kanban

- Waterfall

- Lean Project Management

- Six Sigma

- PRINCE2

- PMP (Project Management Professional)

- PMBOK

- SAFe (Scaled Agile Framework)

- DevOps

- Design Thinking

3. Tools & Software

- Microsoft Project

- Jira

- Trello

- Asana

- Monday.com

- Smartsheet

- ClickUp

- Basecamp

- Wrike

- Notion

- Confluence

- Google Workspace

- Slack

- Teamwork

- Microsoft Teams

4. Leadership & Communication Skills

- Team Leadership

- Cross-Functional Collaboration

- Conflict Resolution

- Decision-Making

- Strategic Planning

- Delegation

- Mentoring

- Negotiation

- Stakeholder Communication

- Meeting Facilitation

- Presentation Skills

- Executive Reporting

5. Analytical & Strategic Skills

- Data-Driven Decision Making

- KPI Tracking

- Performance Monitoring

- Risk Analysis

- Cost-Benefit Analysis

- Forecasting

- Benchmarking

- Resource Optimization

- Business Case Development

- Continuous Improvement

6. Industry-Specific Project Management Keywords

- IT Project Management

- Construction Project Management

- Engineering Projects

- Marketing Campaign Management

- Product Launch

- Financial Project Management

- Healthcare Projects

- Software Development Projects

- Infrastructure Projects

- E-commerce Initiatives

- Digital Transformation Projects

7. Certifications & Credentials

- PMP Certified

- CAPM Certified

- PRINCE2 Certified

- Agile Certified Practitioner (PMI-ACP)

- Scrum Master Certified (CSM)

- Six Sigma Green Belt

- Six Sigma Black Belt

- SAFe Certified

- ITIL Certified

8. Soft Skills

- Organization

- Adaptability

- Time Management

- Problem Solving

- Attention to Detail

- Collaboration

- Multitasking

- Resilience

- Creativity

- Critical Thinking

9. Technical & Digital Skills

- ERP Systems

- MS Office Suite (Excel, PowerPoint, Word)

- Google Analytics

- Power BI / Tableau

- Data Visualization

- Workflow Automation

- Budget Tracking Tools

- Cloud Platforms (AWS, Azure)

10. Action Verbs for Resume Bullet Points

- Achieved

- Analyzed

- Coordinated

- Delivered

- Directed

- Executed

- Facilitated

- Implemented

- Initiated

- Led

- Managed

- Optimized

- Organized

- Oversaw

- Planned

- Prioritized

- Streamlined

- Supervised

- Tracked

- Upgraded

How to Integrate Keywords Effectively

1. Natural Context Matters

Do not stuff your content with keywords. The ATS algorithms are now smarter—they evaluate the relevance of the context. To illustrate, rather than using the term “risk management” ten times in a row, use it once in its proper sense:

Reduced project risk by implementing a structured risk management framework that lowered cost overruns by 12%.

2. Use Keywords Across All Resume Sections

Distribute keywords evenly throughout:

- Resume summary: Embed 3–4 high-impact keywords

- Skills section: List core competencies clearly

- Experience: Place action-oriented keywords in bullet achievements

- Education and Certifications: Include accredited credentials

3. Vary Keyword Usage

Use synonymous or related terms to capture multiple ATS variations. For example, budget control, cost optimization, and financial oversight target similar meanings.

4. Align with Metrics

Pair keywords with measurable impact. This anchors your achievements in data, increasing credibility.

Example:

Delivered 20+ Agile projects with 95% on-time completion rate, improving workflow efficiency by 30%.

5. Mirror Each Job Description

Customize your resume for each application. Even minor changes in phrasing by aligning the corporate language can affect ATS rankings.

Project Manager Resume Summary Examples Using Keywords

Below are keyword-optimized summary samples crafted for various project management profiles.

Example 1: IT Project Manager

Certified Professional Project Management (PMP) with 8+ years of dynamic experience in leading all-around Agile teams. Mastering the Software Development Life Cycle (SDLC) management, communication with the stakeholders, and execution of the cloud migration projects. Jira, Confluence, and Microsoft Project are just some of the tools which he is comfortable working with.

Example 2: Construction Project Manager

A Construction Project Manager with a results-oriented approach and a wide-ranging knowledge in the areas of cost estimation, resource scheduling, and site management. Capable of directing safety compliance operations and overseeing subcontractors for huge projects worth millions of dollars. Accumulated victories in project delivery through the application of Lean and PRINCE2 methodologies.

Example 3: Marketing Project Manager

A Creative Project Manager who is highly skilled in digital campaign execution, brand development, and performance analytics, and is able to manage the different departments’ efforts through Asana and Monday.com easily. Have been through the process of collaborating with stakeholders and optimizing timelines across various channels.

Common Mistakes to Avoid When Using Keywords

No matter how skilled you are, there are still mistakes that can lead to a poor ranking of your resume. Here are the ways that can ruin it for you:

- Filling your resume with keywords that have the same meaning and are used repeatedly.

- Using “results-oriented” without any context and thus, making it a vague buzzword.

- Cutting and pasting entire phrases from the job description.

- Mentioning software or frameworks that you have never worked with.

- Not adjusting keywords for each job application.

The word-by-word approach of keyword density in terms of the performance of consistency, accuracy, and B in the context always lose.

Final Thoughts

In the current job market, keywords are the main way of communication of your resume and the recruiter’s interest, like a digital handshake. An optimized resume speaks to both the hiring system and the recruiter; it gets through the ATS filters and gives the human an impactful-detail engagement with the recruiter.

For the project managers, this translates into a need for precise and not very catchy words. Words like accuracy, context, modern methodologies, and quantifiable achievements should be the ones you use. As the shift in business practices takes place and digital innovation is at the forefront, positioning yourself with the keywords around automation, collaboration, and data-driven strategy will make you ready for the future.

A keyword-optimized project manager resume does not only have the function of attracting attention but also strengthening your personal brand. Words, when used thoughtfully and with the right focus, convey your capacity to produce tangible results, give effective guidance, and help the organization achieve its goals.