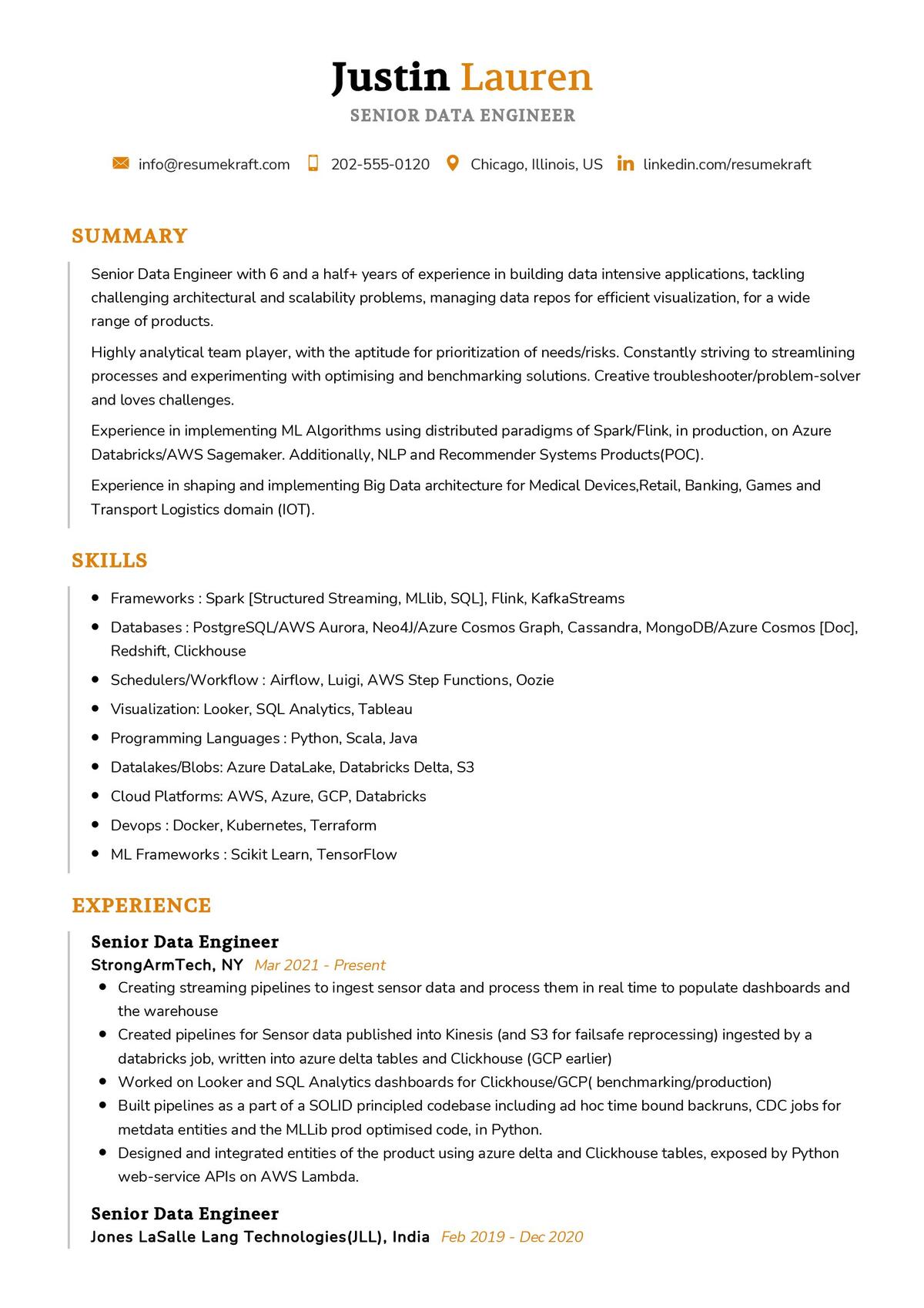

Justin Lauren

Senior Data Engineer

Summary

Senior Data Engineer with 6 and a half+ years of experience in building data intensive applications, tackling challenging architectural and scalability problems, managing data repos for efficient visualization, for a wide range of products.

Highly analytical team player, with the aptitude for prioritization of needs/risks. Constantly striving to streamlining processes and experimenting with optimising and benchmarking solutions. Creative troubleshooter/problem-solver and loves challenges.

Experience in implementing ML Algorithms using distributed paradigms of Spark/Flink, in production, on Azure Databricks/AWS Sagemaker. Additionally, NLP and Recommender Systems Products(POC).

Experience in shaping and implementing Big Data architecture for Medical Devices,Retail, Banking, Games and Transport Logistics domain (IOT).

Skills

- Frameworks : Spark [Structured Streaming, MLlib, SQL], Flink, KafkaStreams

- Databases : PostgreSQL/AWS Aurora, Neo4J/Azure Cosmos Graph, Cassandra, MongoDB/Azure Cosmos [Doc], Redshift, Clickhouse

- Schedulers/Workflow : Airflow, Luigi, AWS Step Functions, Oozie

- Visualization: Looker, SQL Analytics, Tableau

- Programming Languages : Python, Scala, Java

- Datalakes/Blobs: Azure DataLake, Databricks Delta, S3

- Cloud Platforms: AWS, Azure, GCP, Databricks

- Devops : Docker, Kubernetes, Terraform

- ML Frameworks : Scikit Learn, TensorFlow

Work Experience

Senior Data Engineer

- Creating streaming pipelines to ingest sensor data and process them in real time to populate dashboards and the warehouse

- Created pipelines for Sensor data published into Kinesis (and S3 for failsafe reprocessing) ingested by a databricks job, written into azure delta tables and Clickhouse (GCP earlier)

- Worked on Looker and SQL Analytics dashboards for Clickhouse/GCP( benchmarking/production)

- Built pipelines as a part of a SOLID principled codebase including ad hoc time bound backruns, CDC jobs for metdata entities and the MLLib prod optimised code, in Python.

- Designed and integrated entities of the product using azure delta and Clickhouse tables, exposed by Python web-service APIs on AWS Lambda.

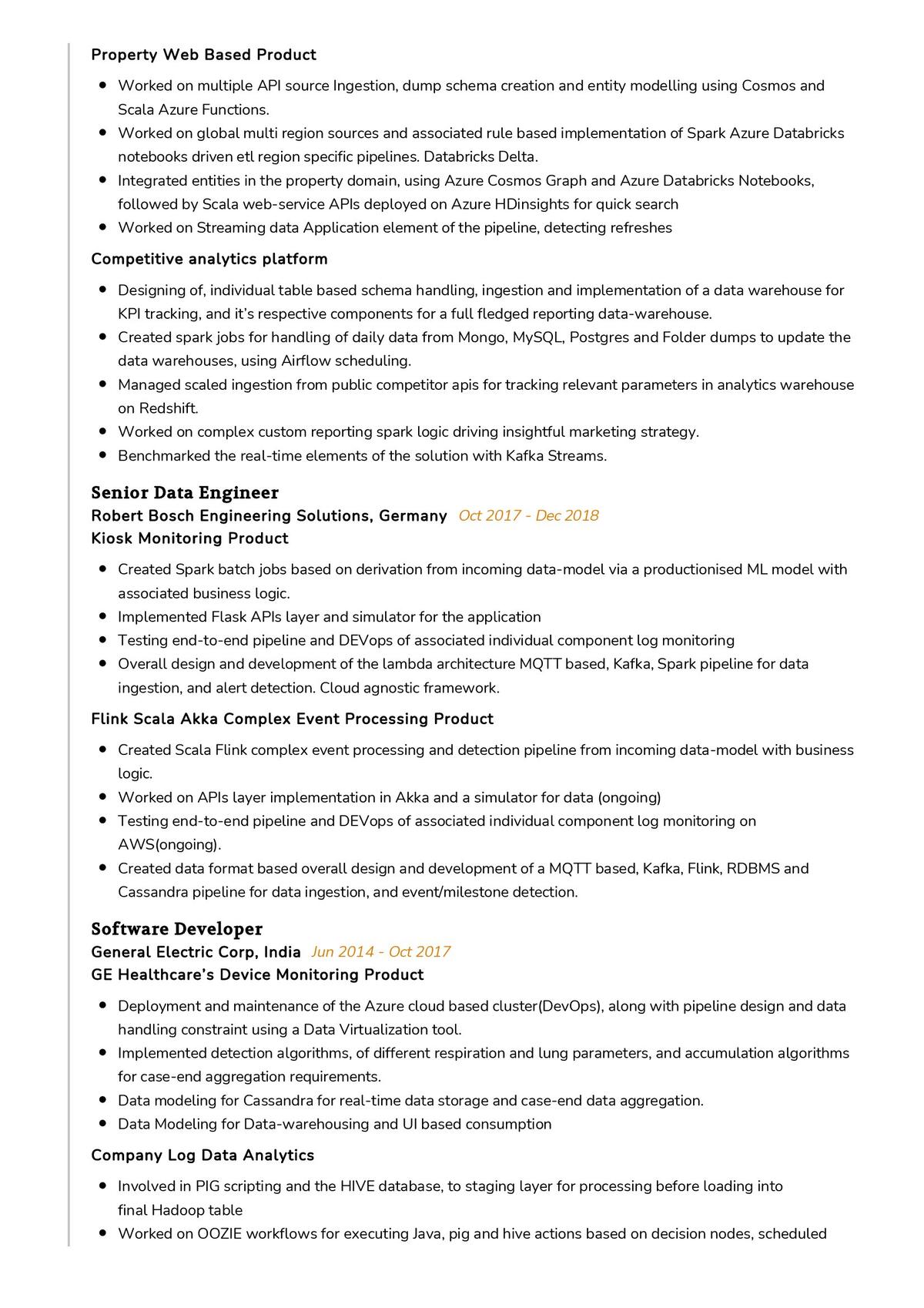

Senior Data Engineer

Property Web Based Product

- Worked on multiple API source Ingestion, dump schema creation and entity modelling using Cosmos and Scala Azure Functions.

- Worked on global multi region sources and associated rule based implementation of Spark Azure Databricks notebooks driven etl region specific pipelines. Databricks Delta.

- Integrated entities in the property domain, using Azure Cosmos Graph and Azure Databricks Notebooks, followed by Scala web-service APIs deployed on Azure HDinsights for quick search

- Worked on Streaming data Application element of the pipeline, detecting refreshes

Competitive analytics platform

- Designing of, individual table based schema handling, ingestion and implementation of a data warehouse for KPI tracking, and it’s respective components for a full fledged reporting data-warehouse.

- Created spark jobs for handling of daily data from Mongo, MySQL, Postgres and Folder dumps to update the data warehouses, using Airflow scheduling.

- Managed scaled ingestion from public competitor apis for tracking relevant parameters in analytics warehouse on Redshift.

- Worked on complex custom reporting spark logic driving insightful marketing strategy.

- Benchmarked the real-time elements of the solution with Kafka Streams.

Senior Data Engineer

Kiosk Monitoring Product

- Created Spark batch jobs based on derivation from incoming data-model via a productionised ML model with associated business logic.

- Implemented Flask APIs layer and simulator for the application

- Testing end-to-end pipeline and DEVops of associated individual component log monitoring

- Overall design and development of the lambda architecture MQTT based, Kafka, Spark pipeline for data ingestion, and alert detection. Cloud agnostic framework.

Flink Scala Akka Complex Event Processing Product

- Created Scala Flink complex event processing and detection pipeline from incoming data-model with business logic.

- Worked on APIs layer implementation in Akka and a simulator for data (ongoing)

- Testing end-to-end pipeline and DEVops of associated individual component log monitoring on AWS(ongoing).

- Created data format based overall design and development of a MQTT based, Kafka, Flink, RDBMS and Cassandra pipeline for data ingestion, and event/milestone detection.

Software Developer

GE Healthcare’s Device Monitoring Product

- Deployment and maintenance of the Azure cloud based cluster(DevOps), along with pipeline design and data handling constraint using a Data Virtualization tool.

- Implemented detection algorithms, of different respiration and lung parameters, and accumulation algorithms for case-end aggregation requirements.

- Data modeling for Cassandra for real-time data storage and case-end data aggregation.

- Data Modeling for Data-warehousing and UI based consumption

Company Log Data Analytics

- Involved in PIG scripting and the HIVE database, to staging layer for processing before loading into final Hadoop table

- Worked on OOZIE workflows for executing Java, pig and hive actions based on decision nodes, scheduled Oozie Workflow and Coordinator Jobs

Education

Bachelors in Engg.

Languages

- English

- French

- Arabic

- German

Career Expert Tips:

- Always make sure you choose the perfect resume format to suit your professional experience.

- Ensure that you know how to write a resume in a way that highlights your competencies.

- Check the expert curated popular good CV and resume examples

What Should Be Included In A Senior Data Engineer Resume?

As a senior data engineer, your resume should showcase your technical skills, professional experience and qualifications. It should also demonstrate your ability to bring innovative ideas and solutions to the organization. When writing a resume for senior data engineer positions, it is important to focus on the skills and accomplishments that make you stand out from the competition.

The most effective senior data engineer resumes should include an overview of your experience, qualifications, and technical skills. You should also include a list of applicable technologies and relevant certifications that demonstrate your expertise. It is also important to highlight your communication and organizational skills.

In addition, it is a good idea to include a list of past projects that you have completed. This will demonstrate your ability to successfully complete projects and help you demonstrate your skills to potential employers. Finally, you should include any awards or recognitions you have received in your field. This can be a great way to stand out from the competition and showcase your abilities.

In conclusion, your senior data engineer resume should showcase your technical skills, professional experience and qualifications. It should also include a list of applicable technologies and relevant certifications, a list of past projects, and any awards or recognitions you have received in your field. By including all of these items, you will make your resume stand out and show employers that you are the right candidate for the position.

What Skills Should I Put On My Resume For Senior Data Engineer?

As a Senior Data Engineer, you should be sure to include a range of skills on your resume that are related to the position. Your technical abilities should be the focus of your resume, as these are often the most important skills for this role. You should include a section for your programming languages, as well as the tools and frameworks you are familiar with. Additionally, you should list the databases and data warehouses that you have experience working with. Finally, you should include a section for any data cleaning and data analysis skills that you possess.

You should also include a section for general skills related to the position. These can include project management, problem solving, and team collaboration. Your communication skills should also be highlighted, as data engineering is a role that often requires working with different teams and stakeholders. Finally, you should include any certifications or additional training that you have taken related to data engineering.

By including all of the appropriate skills on your resume, you will show potential employers that you are an experienced and qualified candidate for the Senior Data Engineer role. Additionally, including the right types of skills will help you stand out from other applicants and demonstrate that you are knowledgeable and prepared for the role.

What Is The Job Description Of The Senior Data Engineer?

A Senior Data Engineer is responsible for the design, development, and deployment of large-scale data systems. They must be able to define and implement data solutions, design and implement data architectures, and analyze and improve data performance. Senior Data Engineers are also responsible for ensuring that data systems remain reliable, secure, and efficient.

In order to excel in this profession, Senior Data Engineers must possess an in-depth understanding of data structures, algorithms, and software development. They should also be experienced in working with a variety of databases, data warehouses, and other data sources, and be able to design and implement data pipelines. Other important skills include data analytics, data visualization, and problem solving.

Senior Data Engineers must have a solid understanding of data security and the ability to recognize, prevent, and respond to cyber security risks. They should be able to develop and maintain complex data systems, and have a deep knowledge of different programming languages. Finally, they should be able to collaborate closely with other departments, such as IT and software engineering, to ensure that data systems are running smoothly.

What Is A Good Objective For A Senior Data Engineer Resume?

A good objective for a Senior Data Engineer resume should emphasize the candidate’s technical skillset, ability to manage teams, and knowledge of data engineering principles. It should also mention the candidate’s commitment to data quality and accuracy, as well as their ability to manage and analyze large amounts of data.

When writing an objective for a Senior Data Engineer position, it’s important to include the specific tasks and responsibilities that the job requires. For example, an objective might mention developing, maintaining and deploying data management systems, or designing and building the data pipelines and architectures necessary to store, transform and integrate data. Additionally, it might mention the candidate’s experience with data analytics, SQL programming, ETL processes, data modeling, data warehousing, and machine learning.

Finally, a good objective for a Senior Data Engineer should emphasize the candidate’s technical proficiency, leadership skills, and attention to detail. It is also important to demonstrate the candidate’s commitment to delivering solutions and projects on time and to specification.

These professionals need to have a strong background in programming, mathematics, and statistics, as well as experience in big data technologies such as Hadoop, Spark, and NoSQL databases. Data engineers with the right qualifications and experience can expect to make an average salary of $90,0 combination of education, experience, and certifications, data engineers can also expect to have access to a range of benefits, including bonuses and stock options. With the right credentials, knowledge, experi 00 per year. With the right comences, and architectures to create efficient, secure, reliable, and scalable data systems.

What Are The Career Prospects In The Senior Data Engineer?

Senior Data Engineers are highly valuable professionals within the tech industry due to their expertise in the area of data engineering. They are responsible for designing, building, and managing data-driven architectures that are used to extract, transform, and load data into databases and other systems. As such, they are responsible for ensuring that data is secure, accurate, and compliant with industry standards. In addition, they are often tasked with creating and maintaining data-driven business intelligence tools that help organizations make better decisions.

The demand for Senior Data Engineers is increasing rapidly in today’s market, which makes this a very lucrative career path. Senior Data Engineers typically have an advanced degree in Computer Science, Data Analysis, or a related field. They also possess strong problem-solving and analytical skills, as well as the ability to work collaboratively within a team.

Senior Data Engineers are usually employed by large corporations, government agencies, and other organizations needing to analyze large amounts of data. They are often responsible for developing and maintaining data warehouses, designing and implementing data models, and creating algorithms to process data. They also play an important role in system design, helping to ensure the integrity of data and provide secure access to data. As such, they must have strong technical skills and a deep understanding of data structures, principles, and concepts.

Due to the increasing demand for data engineers and the complexity of the job, these professionals can command a salary well above the average. Depending on the organization and the individual’s experience, Senior Data Engineers can make anywhere from $80,0 00 to $150,000 a year. In addition to a competitive salary, data engineers are also often eligible for bonuses, stock options, and other benefits that can further increase their income. As the demand for data engineers continues to grow, employers are also offering flexible hours, remote work, and other benefits to attract the best talent. With the right00 to $150,000 per year.

Key Takeaways for an Senior Data Engineer resume

When it comes to crafting a Senior Data Engineer resume, there are many important things to keep in mind. Whether you are applying to a startup or a large corporation, your resume should be tailored to fit the company and the position you are applying for. As a Senior Data Engineer, your skills and experience should be highlighted, as should any certifications or qualifications you may have. Here are some of the key takeaways for an outstanding Senior Data Engineer resume:

First, highlight your technical skills. As a Senior Data Engineer, you will need to be able to demonstrate a deep knowledge of data engineering and analytics tools, such as SQL, Hadoop, Python, Spark, and more. Make sure to include these skills prominently on your resume and provide examples of the projects or tasks you have completed using those tools.

Second, emphasize your problem-solving abilities. Companies are looking for Senior Data Engineers who can approach problems from a creative and analytical perspective. Showcase your experience in identifying and solving complex data engineering issues, as well as your ability to think critically and develop creative solutions.

Third, demonstrate your leadership skills. As a Senior Data Engineer, you will likely be expected to lead and mentor other engineers in the company. Make sure to include any past leadership roles on your resume, and demonstrate your ability to lead and manage projects.

With the right combination of education, experience, and certifications, these professionals can expect to make even more money . Data engineers also need to stay up-to-date on the latest trends, technologies, and architectures. They need to have a deep understanding of datastructure, Data engineers are responsible for designing, developing, and managing large-scale data systems and ensuring that data flows smoothly between different systems.

By following these key takeaways, you can create an outstanding Senior Data Engineer resume that will help you land the job of your dreams. With the right experience and skill set, you can become a successful Senior Data Engineer and earn a lucrative salary. As a Senior Data Engineer, you will be responsible for the design, development, and optimization of data systems and processes. You’ll need to have a deep understanding of data structure and algorithms, as well as an understanding of software engineering principles.

You’ll also need to showcase your communication and collaboration skills. You should be able to work with people from different departments and backgrounds, so be sure to include any relevant experience on your resume. You should also demonstrate your ability to effectively communicate ideas and collaborate with other teams. Additionally, you should highlight your coding and software development expertise, as well as any other relevant experience.

As a Senior Data Engineer, you will be responsible for managing the development of data systems and data solutions. You will need to work with a variety of data sources, such as databases, big data solutions, and cloud technologies. You’ll need to be able to design, develop, and optimize data solutions , as well as maintain and improve existing data systems.

Additionally, you will need to stay up to date with the latest data engineering technologies and trends. You should also have a strong understanding of software engineering principles, and be able to explain complex data concepts to a variety of audiences. You’ll also need to showcase your communication and collaboration skills, as well as your coding and software development expertise, as well as any other relevant experience such as project management, data analysis, or machine learning.

Finally, showcase your communication and collaboration skills. Senior Data Engineers must be able to work with people from different departments and backgrounds, so be sure to include any relevant experience in your resume. Demonstrate your ability to effectively communicate ideas and collaborate with other teams.

Check Other Great Resumes:

- DevOps Engineer Resume Sample

- Back-End Developer Resume Sample

- Procurement Manager Resume Sample

- Front-End Developer Resume Sample

- Family Physician Resume Sample

- Loan Officer Resume Sample

- Personal Banker Resume Sample

- Bank Teller Resume Sample

- Full-Stack Developer Resume Sample

- Front-End Developer Resume Sample